As I’m sure many of you know, x86 architecture has been around for quite some time. It has its roots in Intel’s early 8086 processor, the first in the family. Indeed, even the original 8086 inherits a small amount of architectural structure from Intel’s 8-bit predecessors, dating all the way back to the 8008. But the 8086 evolved into the 186, 286, 386, 486, and then they got names: Pentium would have been the 586.

Along the way, new instructions were added, but the core of the x86 instruction set was retained. And a lot of effort was spent making the same instructions faster and faster. This has become so extreme that, even though the 8086 and modern Xeon processors can both run a common subset of code, the two CPUs architecturally look about as far apart as they possibly could.

So here we are today, with even the highest-end x86 CPUs still supporting the archaic 8086 real mode, where the CPU can address memory directly, without any redirection. Having this level of backwards compatibility can cause problems, especially with respect to multitasking and memory protection, but it was a feature of previous chips, so it’s a feature of current x86 designs. And there’s more!

I think it’s time to put a lot of the legacy of the 8086 to rest, and let the modern processors run free.

Some Key Terms

To understand my next arguments, you need to understand the very basics of a few concepts. Modern x86 is, to use the proper terminology, a CISC, superscalar, out-of-order Von Neumann architecture with speculative execution. What does that all mean?

Von Neumann architectures are CPUs where both program and data exist in the same address space. This is the basic ability to run programs from the same memory in which regular data is stored; there is no logical distinction between program and data memory.

Superscalar CPU cores are capable of running more than one instruction per clock cycle. This means that an x86 CPU running at 3 GHz is actually running more than 3 billion instructions per second on average. This goes hand-in-hand with the out-of-order nature of modern x86; the CPU can simply run instructions in a different order than they are presented if doing so would be faster.

Finally, there’s the speculative keyword causing all this trouble. Speculative execution is to run instructions in a branching path, despite it not being clear whether said instructions should be run in the first place. Think of it as running the code in an if statement before knowing whether the condition for said if statement is true and reverting the state of the world if the condition turns out to be false. This is inherently risky territory because of side-channel attacks.

But What is x86 Really?

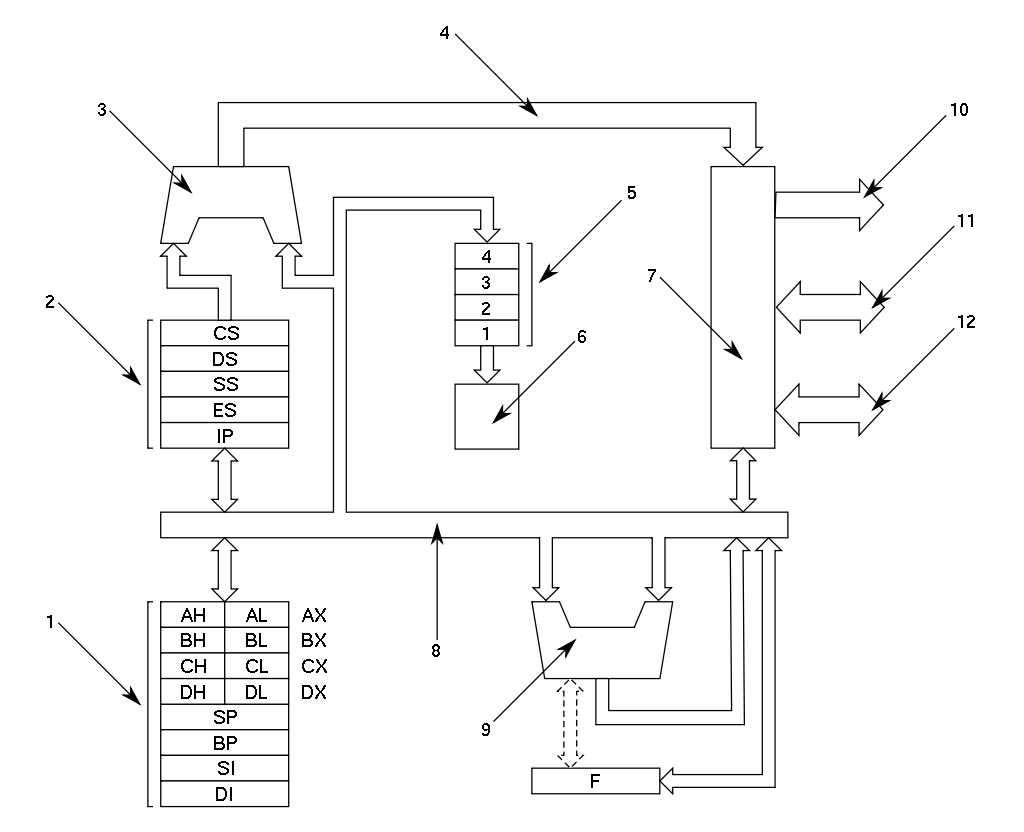

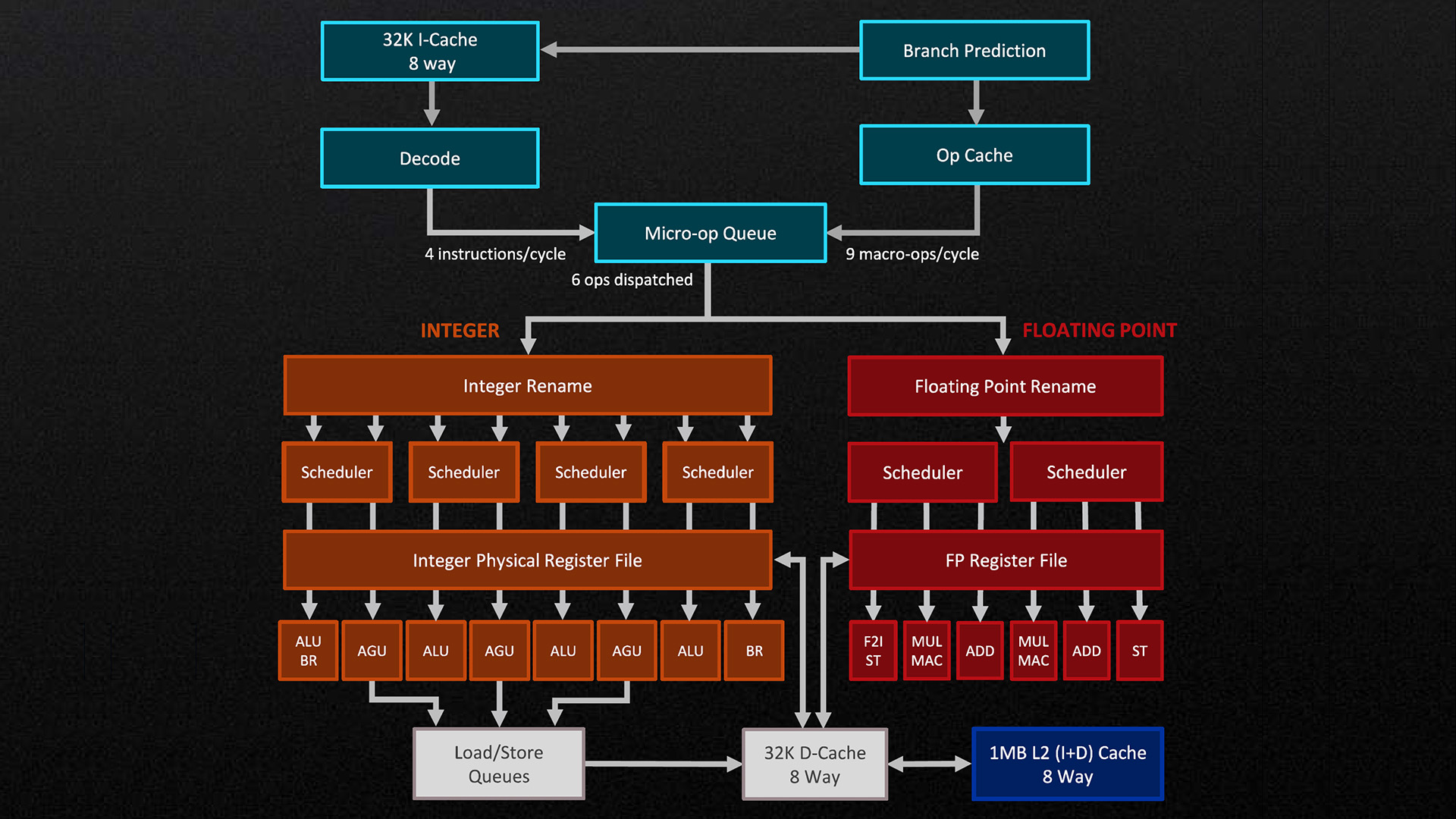

Here, you can see block diagrams of the microarchitectures of two seemingly completely unrelated CPUs. Don’t let the looks deceive you; the Zen 4 CPU still supports “real mode”; it can still run 8086 programs.

The 8086 is a much simpler CPU. It takes multiple clock cycles to run instructionsa: anywhere from 2 to over 80. One cycle is required per byte of instruction and one or more cycles for the calculations. There is also no concept of superscalar or out-of-order here; everything takes a predertermined amount of time and happens strictly in-order.

By contrast, Zen 4 is a monster: Not only does it have four ALUs, it has three AGUs as well. Some of you may have heard of the Arithmetic and Logic Unit before, but Address Generation Unit is less well known. All of this means that Zen 4 can, under perfect conditions, perform four ALU operations and three load/store operations per clock cycle. This makes Zen 4 a factor of two to ten faster than the 8086 at the same clock speed. If you factor in clock speed too, it becomes closer to roughly five to seven orders of magnitude. Despite that, the Zen 4 CPUs still supports the original 8086 instructions.

Where the Problem Lies

The 8086 instruction set is not the only instruction set that modern x86 supports. There are dozens of instruction sets from the well-known floating-point, SSE, AVX and other vector extensions to the obscure PAE (for 32-bit x86 to have wider addresses) and vGIF (for interrupts in virtualization). According to [Stefan Heule], there may be as many as 3600 instructions. That’s more than twenty times as many instructions as RISC-V has, even if you count all of the most common RISC-V extensions.

These instructions come at a cost. Take, for example one of x86’s oddball instructions: mpsadbw. This instruction is six to seven bytes long and compares how different a four-byte sequence is in multiple positions of an eleven-byte sequence. Doing so takes at least 19 additions but the CPU runs it in just two clock cycles. The first problem is the length. The combination of the six-to-seven byte instruction length and no alignment requirements makes fetching the instructions a lot more expensive to do. This instruction also comes in a variant that accesses memory, which complicates decoding of the instruction. Finally, this instruction is still supported by modern CPUs, despite how rare it is to see it being used. All that uses up valuable space in cutting-edge x86 CPUs.

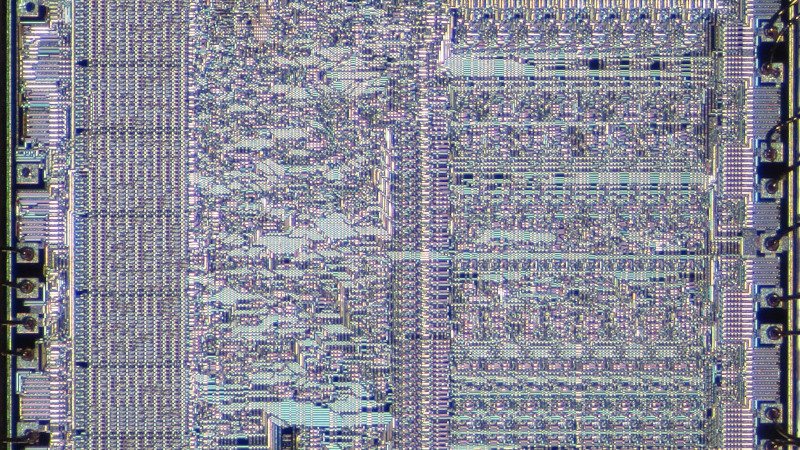

In RISC architectures like MIPS, ARM, or RISC-V, the implementation of instructions is all hardware; there are dedicated logic gates for running certain instructions. The 8086 also started this way, which would be an expensive joke if that was still the case. That’s where microcode comes in. You see, modern x86 CPUs aren’t what they seem; they’re actually RISC CPUs posing as CISC CPUs, implementing the x86 instructions by translating them using a mix of hardware and microcode. This does give x86 the ability to update its microcode, but only to change the way existing instructions work, which has mitigated things like Spectre and Meltdown.

Fortunately, It Can Get Worse

Let’s get back to those pesky keywords: speculative and out-of-order. Modern x86 runs instructions out-of-order to, for example, do some math while waiting for a memory access. Let’s assume for a moment that’s all there is to it. When faced with a divide that uses the value of rax followed by a multiply that overwrites rax, the multiply must logically be run after the divide, even though the result of the multiply does not depend on that of the divide. That’s where register renaming comes in. With register renaming, both can run simultaneously because the rax that the divide sees is a different physical register than the rax that the multiply writes to.

This acceleration leaves us with two problems: determining which instructions depend on which others, and scheduling them optimally to run the code as fast as possible. These problems depend on the particular instructions being run and their solution logic gets more complicated the more instructions exist. The x86 instruction encoding format is so complex an entire wiki page is needed to serve as a TL;DR. Meanwhile, RISC-V needs only two tables (1) (2) to describe the encoding of all standard instructions. Needless to say, this puts x86 at a disadvantage in terms of decoding logic complexity.

Change is Coming

Over time, other instruction sets like ARM have been eating at x86’s market share. ARM is completely dominant in smartphones and single-board computers, it is growing in the server market, and it has even become the primary CPU architecture in Apple’s devices since 2020. RISC-V is also progressively getting more popular, becoming the most widely adopted royalty-free instruction set to date. RISC-V is currently mostly used in microcontrollers but is slowly growing towards higher-power platforms like single-board computers and even desktop computers. RISC-V, being as free as it is, is also becoming the architecture of choice for today’s computer science classes, and this will only make it more popular over time. Why? Because of its simplicity.

Conclusion

The x86 architecture has been around for a long time: a 46-year long time. In this time, it’s grown from the simple days of early microprocessors to the incredibly complex monolith of computing we have today.

This evolution has taken it’s toll, though, by restricting one of the biggest CPU platforms to the roots of a relatively ancient instruction set, which doesn’t even benefit from small code size like it did 46 years ago. The complexities of superscalar, speculative, and out-of-order execution are heavy burdens on an instruction set that is already very complex by definition and the RISC-shaped grim reapers named ARM and RISC-V are slowly catching up.

Don’t get me wrong: I don’t hate x86 and I’m not saying it has to die today. But one thing is clear: The days of x86 are numbered.

3175x175(CURRENT).thumb.jpg.b05acc060982b36f5891ba728e6d953c.jpg)

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.