CEO Jensen Huang says new chips will power robots and billions of AI agents.

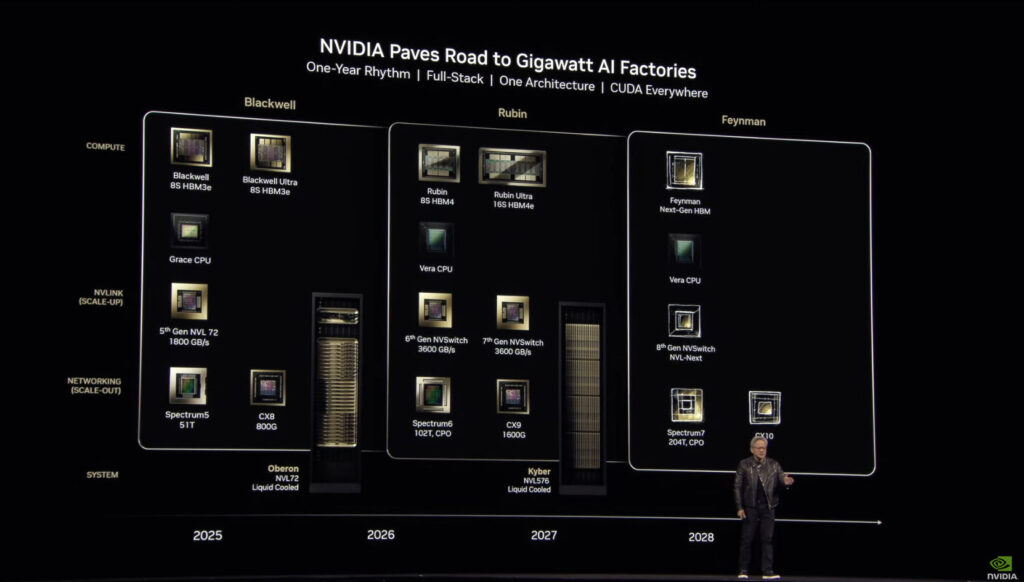

On Tuesday at Nvidia's GTC 2025 conference in San Jose, California, CEO Jensen Huang revealed several new AI-accelerating GPUs the company plans to release over the coming months and years. He also revealed more specifications about previously announced chips.

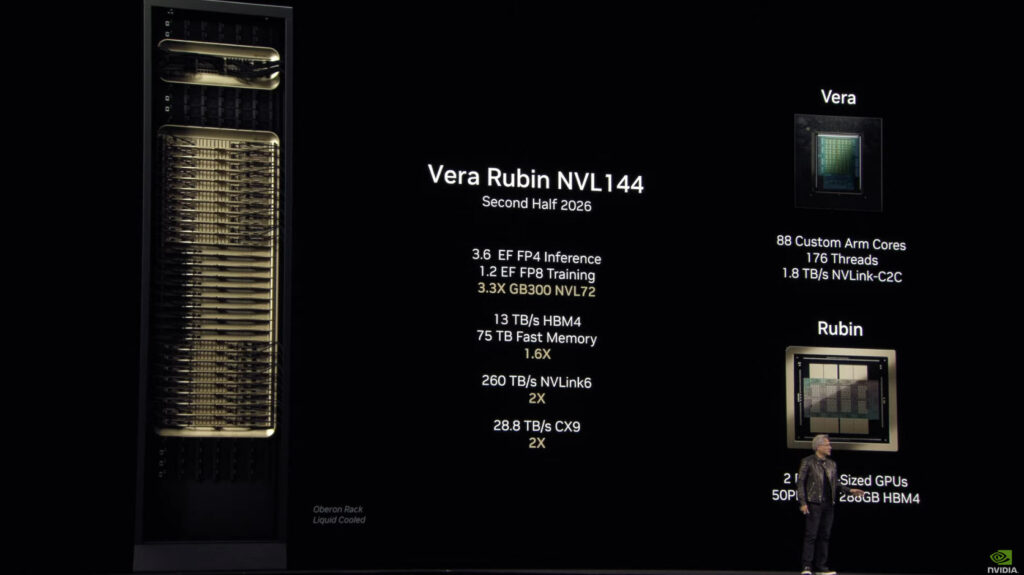

The centerpiece announcement was Vera Rubin, first teased at Computex 2024 and now scheduled for release in the second half of 2026. This GPU, named after a famous astronomer, will feature tens of terabytes of memory and comes with a custom Nvidia-designed CPU called Vera.

According to Nvidia, Vera Rubin will deliver significant performance improvements over its predecessor, Grace Blackwell, particularly for AI training and inference.

Vera Rubin features two GPUs together on one die that deliver 50 petaflops of FP4 inference performance per chip. When configured in a full NVL144 rack, the system delivers 3.6 exaflops of FP4 inference compute—3.3 times more than Blackwell Ultra's 1.1 exaflops in a similar rack configuration.

The Vera CPU features 88 custom ARM cores with 176 threads connected to Rubin GPUs via a high-speed 1.8 TB/s NVLink interface.

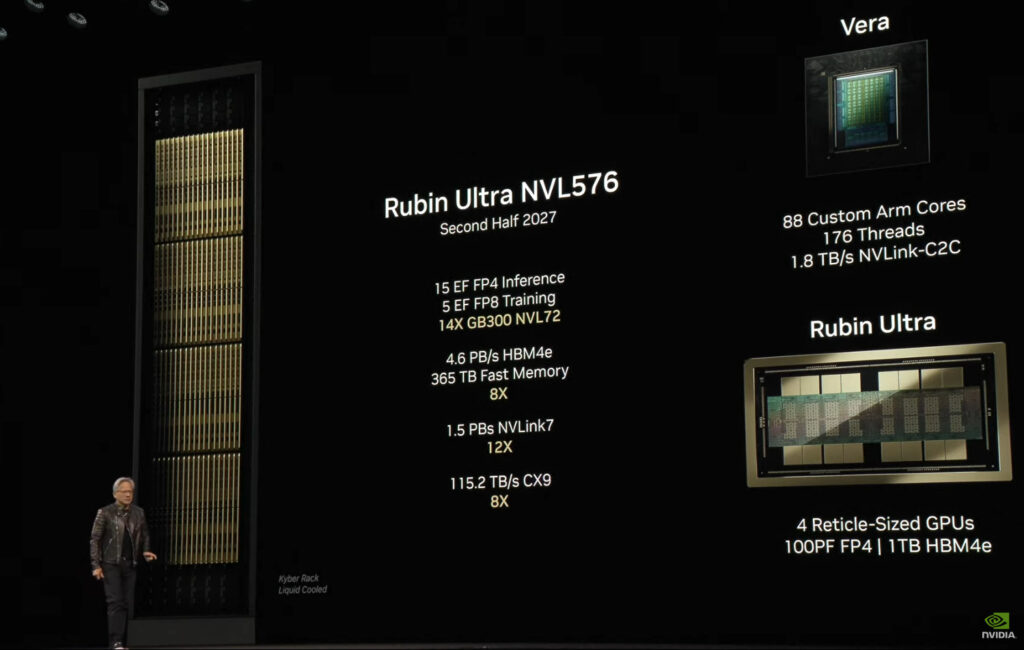

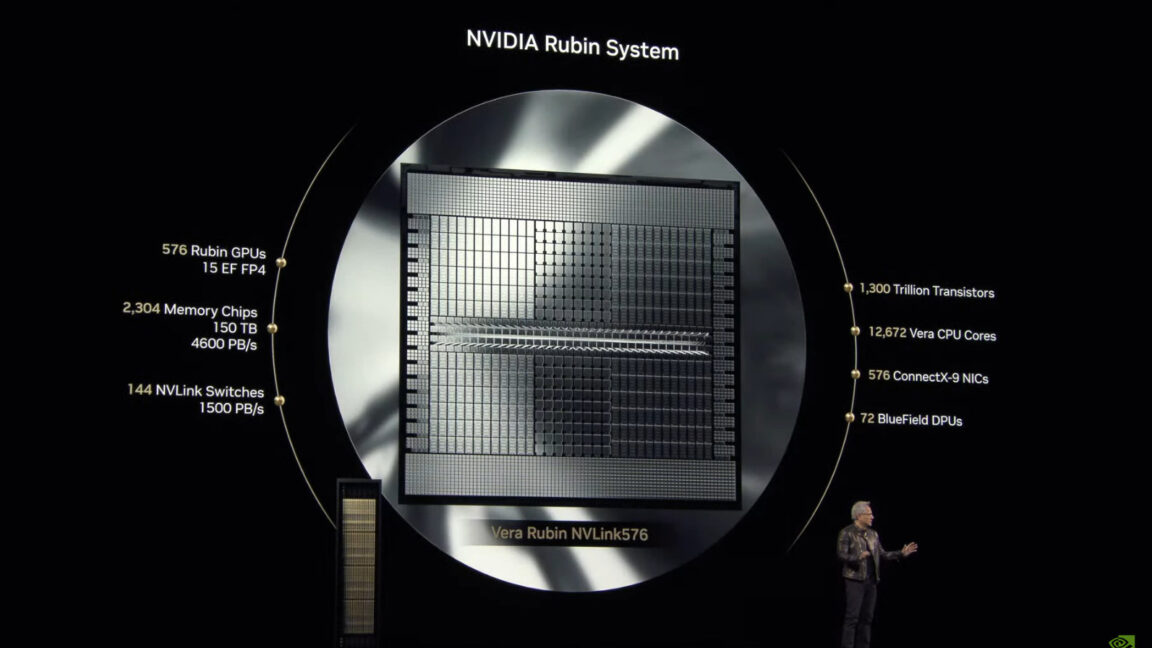

Huang also announced Rubin Ultra, which will follow in the second half of 2027. Rubin Ultra will use the NVL576 rack configuration and feature individual GPUs with four reticle-sized dies, delivering 100 petaflops of FP4 precision (a 4-bit floating-point format used for representing and processing numbers within AI models) per chip.

At the rack level, Rubin Ultra will provide 15 exaflops of FP4 inference compute and 5 exaflops of FP8 training performance—about four times more powerful than the Rubin NVL144 configuration. Each Rubin Ultra GPU will include 1TB of HBM4e memory, with the complete rack containing 365TB of fast memory.

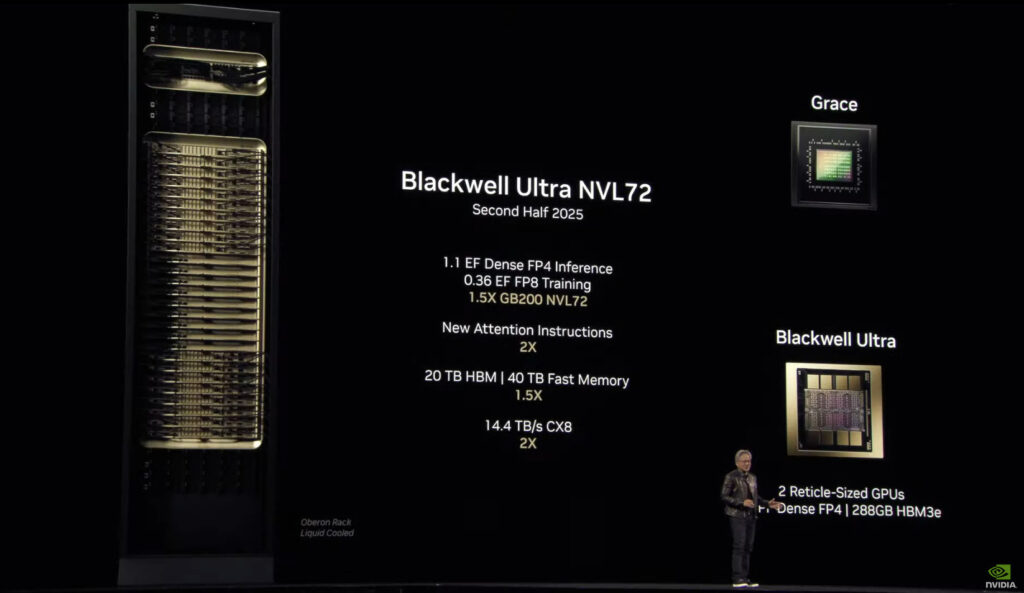

For the near future, Nvidia will launch Blackwell Ultra B300 in the second half of 2025. This chip features two GPUs delivering 15 petaflops of dense FP4 compute performance per chip. When configured in a full NVL72 rack, Blackwell Ultra will provide 1.1 exaflops of dense FP4 inference compute—1.5 times more than the current Blackwell B200 configuration. Each B300 GPU has 288GB of HBM3e memory compared to Blackwell's 192GB.

Huang briefly mentioned a next-generation GPU architecture called "Feynman," named after American theoretical physicist Richard Feynman. He provided few additional details about Feynman's design or capabilities, only that it would use a "Vera" CPU instead of the expected "Richard" based on the naming pattern and that it would arrive sometime in 2028.

During the keynote, Huang also laid out an optimistic roadmap for the future of AI—with its success vitally interlinked with the continued success of his company—where he called data centers "AI factories" that produce tokens (the units of data that AI models currently process) instead of physical objects. He shared his vision for the future of "physical AI" that will one day power humanoid robots to perform human-like labor. Nvidia currently provides software platforms that help robot-controlling AI models train in virtual worlds.

In the meantime, Huang speculated that Nvidia chips will soon power "10 billion digital agents" that perform helpful work for humans, and he mentioned that by the end of this year, 100 percent of Nvidia engineers will be assisted by AI models.

Hope you enjoyed this news post.

Thank you for appreciating my time and effort posting news every day for many years.

News posts... 2023: 5,800+ | 2024: 5,700+ | 2025 (till end of February): 874

RIP Matrix | Farewell my friend ![]()

3175x175(CURRENT).thumb.jpg.b05acc060982b36f5891ba728e6d953c.jpg)

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.