Sayan Sen contributed to this review, and also provided the benchmark graphics.

When Intel reached out to Neowin asking if we were interested in testing the 14th Gen Processor family for the desktop, I jumped at the chance, but also because I had recently upgraded my main PC to an AMD chip after being loyal to Intel for decades as my main go-to. I figured that I have a system I can pitch the new Intel chips against.

Firstly, I should mention that I am fully aware the i9-13900KS already exists with a Max Turbo of 6.0 GHz, but that is not a mainstream processor. Our title reflects what Intel has now brought to the masses, not just to people with too much money to wait for the proper mainstream offering.

With that out of the way, now the disclosures: Intel let me keep the i5-14600K and i9-14900K samples they provided, and I (mostly unsuccessfully) reached out to some companies to try and secure hardware for our review. Only Sabrent stepped up, by providing a 4TB Rocket 4 Plus-G NVMe for us to use in our test build, and due to time constraints with that delivery (it arrived the day this review was published), I ended up having to order the 1TB version myself anyway.

If you want 17 pages of lab conditioned benchmarks, you're better off going somewhere like Tom's Hardware. I am not going to pretend I am an authority on CPUs, this is my first review of such hardware. However I should mention that I have been tinkering with self-builds since the 90s, and have built my own systems using Cyrix, AMD, and Intel CPUs. I am not going to kid myself by trying to look like Tom's Hardware, so instead, I've decided to do a comparison to another flagship processor, which is the AMD 7950X3D.

For our benchmarks, hwinfo.com provided a commercial license of HWiNFO, and UL Solutions provided us with Professional (commercial use) licenses for 3DMark, PCMark 10, and Procyon.

Introduction

The 14th generation of Intel's Core desktop processors, which were officially released yesterday, are a refresh of Raptor Lake-S, and really need no introduction. They are based on the same LGA1700 socket design of the 12th and 13th generation. They support both DDR4 and DDR5 memory using the Z690 or Z790 chipsets. Yes it's recommended you get an x90 chipset board as Intel's chips are quite power-hungry as we'll see later, and so something with robust power delivery with heatsinks help.

They even ship with the same iGPU (Intel UHD Graphics 770) as the 13th generation, offering 32 EUs. The full specs of the 14th Gen Core i9 and i5 are listed below.

| Raptor Lake-S Refresh | i9-14900K | i5-14600K |

|---|---|---|

| Processor Cores (P+E) | 24 (8+16) | 14 (6+8) |

| Processor Threads | 32 | 20 |

| Intel Smart Cache (L3) | 36MB | 24 MB |

| Total L2 Cache | 32 MB | 20 MB |

| Thermal Boost | 6.0GHz | na |

| Max Turbo Frequency | Up to 5.8 GHz | Up to 5.3 Ghz |

| P-Core Max Turbo Frequency | Up to 5.6 GHz | Up to 5.3 GHz |

| E-Core Max Turbo Frequency | Up to 4.4 GHz | Up to 4.0 GHz |

| P-Core Base Frequency | 3.2 GHz | 3.5 GHz |

| E-Core Base Frequency | 2.4 GHz | 2.6 GHz |

| Processor Graphics | Intel UHD Graphics 770 | |

| Total PCIe Lanes | 20 | |

| Max Memory Speed | DDR5 5600 MT/s - DDR4 3200 MT/s | |

| Memory Capacity | 192 GB | |

| Processor Base Power | 125 W | |

| Max Turbo Power | 253 W | 181 W |

| Price (MSRP) | $589 | $319 |

Building the beast

Test system

- Intel Core i9-14900K or Core i5-14600K

- ASUS ROG STRIX Z790-H GAMING WIFI (BIOS v1402)

- ASUS ROG STRIX 850W Gold

- Thermalright Peerless Assassin 120 SE ARGB CPU Air Cooler

- Coolermaster Thermal Pad 0.5mm (used with i5-14600K)

- Carbonaut thermal grizzly 0.2mm (used with i9-14900K)

- 2x 16GB Corsair Dominator Platinum RGB DDR5 6000MT/s in XMP

- Sabrent 1TB Rocket 4 Plus-G (PCIe 4.0)

- MSI GeForce RTX 4070 Ti Ventus 3X

- OS: Windows 11 Professional Build 22621.2468 (Moment 4)

- dGPU driver: Nvidia driver 538.58

- iGPU driver: 31.0.15.3758

Reference System

- AMD Ryzen 9 7950X3D

- ASRock X670E Steel Legend (BIOS v1.28)

- be quiet! Pure Power 12 M 850W

- NZXT Kraken Elite 360 RGB

- 2x 32GB Kingston Fury Beast RGB DDR5 @ 6000MT/s in EXPO

- WD Black SN850X 1TB

- MSI GeForce RTX 4070 Ti Ventus 3X

- OS: Windows 11 Professional Build 22621.2468 (Moment 4)

- dGPU driver: Nvidia driver 538.58

- iGPU driver: 22.40.70

As you can see above, only the MSI GeForce RTX 4070 Ti Ventus 3X was used between both systems, (I do not have two of these cards!) this was to see how the CPUs complimented the same dGPU in some of the benchmarks, and Game Mode was on in Windows 11. Perhaps to try and be completely fair™, the top, and glass side panel of the Lian Li O11 Dynamic EVO case were removed from the AMD system as well, but also due to GPU swapping, essentially making it an open plan system too.

My test hardware was open plan, and as mentioned in the hardware specs above, I ensured that I had the latest stable BIOS, firmware and drivers from the respective hardware manufacturers. Resizeable BAR was enabled (default BIOS setting) as was MultiCore Enhancement (MCE), which was on Auto by default, and which showed it as "Enabled". On a similar note, Precision Boost Overdrive (PBO) was set to "Advanced" on our AMD system which is also the default setting.

Essentially, we ran everything on the default settings since that was the idea as the average user won't go into BIOS immediately to play around with the settings.

As you may also be able to see from the above picture, I was only able to fit one fan on the cooler, in an ideal world I would have preferred the heatsink to have a little more height. I needed another 1.7cm to properly clear the internal IO shield cover (pic). In hindsight, maybe I should have added it anyway, despite how it looks, and not fully covering that side of the heatsink.

I resisted to using thermal paste, one: because of the mess, and secondly, because it's not part of my day job to be swapping around CPUs multiple times a week. In addition, I looked into it and with Sayan's help discovered that the right thermal pads offer almost as good thermal conductivity for CPUs while also not being messy and prone to potentially harming the pins on the socket if some of it finds itself there.

First up, I ordered a CoolerMaster Thermal Pad 0.5mm to use with the Core i5-14600K tests, and which offered a conduction rate of 13.3 W/mK (watts per meter kelvin). The higher the W/mK value, the more the thermal coupling (heat dissipation rate) is between the CPU's integrated heat spreader (IHS) and the cooler. Essentially, the more the thermal conductivity value of a thermal interface material, the more efficient it is (source).

The pad was large enough at 95mm x 45mm to be cut into three portions. The first time I messed up by tearing it before it could be applied to the CPU (this stuff is somewhat difficult to handle) and the CoolerMaster Thermal Pad is sort of like a sheet of dried thermal paste/clay-like substance, and so very easy to rip and fold, making it impossible to unfold due to the stickyness of the material.

|

|

|

Left: CoolerMaster Thermal Pad (after use) | Right: Carbonaut thermal grizzly (before use)

We attempted to use the CoolerMaster Thermal Pad with the Core i9-14900K as well, but we kept seeing the CPU throttling in tests, so it was decided to order the Carbonaut thermal grizzly, which offers 62.5 W/mK, and is basically the "chef's kiss" of thermal conductivity as far as thermal pads are concerned.

When handling any of the hardware, I always used an anti-static band. I even ordered a cable that let me power on, reset, and see the status of HDD and Power LEDs, so I didn't have to use a screwdriver on the pins.

Benchmarks

First up, we tested the integrated graphics processing unit (iGPU) between the two CPUs. The i5 and i9 both use Intel UHD Graphics 770 which has 32 EU (Execution Units). Team Red uses AMD Radeon RDNA 2 graphics with 2 Compute Units (CUs).

First up, we played Jellyfish MKV 400 Mbps HEVC (H.265) Main10 file @ 3840x2160 (scaled to 1440p) in VideoLAN Player and monitored the max and average percentage usages during video decoding in HWiNFO64. It's clear to see that Intel takes the crown as far as integrated graphics' media decoding capabilities are concerned.

We did not stop there and also attempted two other videos with different codecs. We played Big Buck Bunny which is AV1, and LG Ink Art 4K60 which is VP9 on YouTube and monitored the video decode max and averages, again using HWiNFO.

It's clear that it's a cakewalk for the Intel UHD Graphics 770, leaving AMD Radeon Graphics in the dust.

One thing noteworthy was that the sensors on Intel's iGPU also recorded a "video processing" data in the YouTube videos that AMD does not. So, the Intel vs AMD data may not be exactly comparable as we are not sure how they are going about their duties here. However, it is clear from the Jellyfish 400Mbps demo that Intel's media engine is the more capable one. The Intel iGPUs also exhibited fewer dropped frames when the Jellyfish demo was looping.

Finally, we tested the iGPU with 3DMark to assess the gaming capabilities. Again, we can see that Intel is leaps and bounds better in the integrated graphics department. The UHD 770 is just more capable than what AMD offers with its 2CU RDNA 2.

Up next, we moved to test scenarios with a discrete GPU (dGPU) on board. The following benchmarks were done with an MSI GeForce RTX 4070 Ti Ventus 3X in the system.

Here we can see the AMD 7950X3D losing to both Raptor Lake-S refresh processors in the CPU score. Do keep in mind that Time Spy's physics seems to favor clock speeds over cache and hence the 14900K, and also the 14600K, are easily able to outpace the 7950X3D. Scaling to 16+ threads is also not happening on the X3D as you will see later in the CPU Profile test.

It's somewhat of the same story with Fire Strike Extreme, which tests DirectX 11 gameplay.

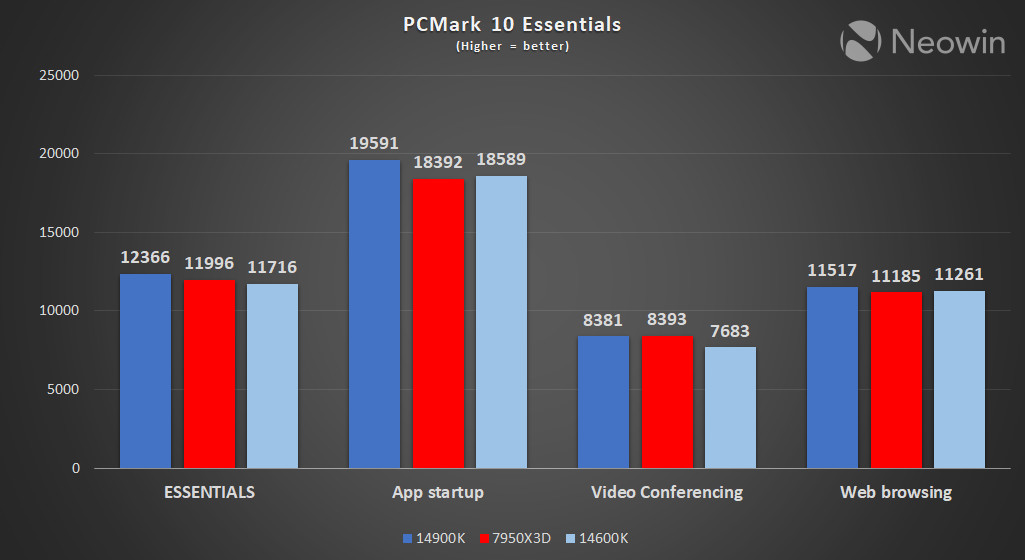

Here, on PCMark 10, we can see some impressive scoring by the Core i9-14900K, but it's apparent that the 7950X3D can hold its own against the newer generation, beating the i9 by a considerable margin with the Photo Editing test, but losing to both Intel chips in the Rendering and Visualisation test.

If you are wondering, the first data point on each of the above PCMark graphs is the overall combined score in that test.

The new Cinebench 2024 holds no surprises either, with scores around about where you'd expect to find them. Rendering tests like this leverage all available threads which is why both the i9 and the Ryzen 9 are significantly faster than the i5.

Next, we measured the max VRM (voltage regulator module) temperatures and power consumption of each chip while Cinebench 2024 was able to complete. Here we can see the efficiency of the 7950X3D at work, with Intel's flagship needing 108.5W (over 75%) more to achieve its score. Even the i5 was consuming more than the AMD chip while being much slower. Ambient room temperature was around, but no more than 20C.

In UL's CPU profiling test, again the Core i9-14900K shows its superiority in terms of scaling beyond 16 threads.

If we were to guess, it looks like the AMD 7950X3D, due to how the chipset driver handles the CCDs in gaming-like workloads like this test, does not scale at all above 16 threads. This is quite the contrary to what we saw in Cinebench (above) where the 7950X3D scales very nicely and almost keeps up with the 14900K.

In UL Procyon we used the AI Inference Benchmark which uses the Windows Machine Learning API. This was run to complement the PCMark 10 above which assessed performance in more traditional workloads. The default precision is set to FP32 (float32) and we proceeded with that.

Although we were able to complete the test with the AMD 7950X3D and twice using the i5-14600K, after repeated attempts, we were unable to get past the Deeplab V3 test with the i9, on which it failed. I reached out to my contact at UL and provided them with our findings, which included thermal throttling at the end of each test that may not have been recorded properly (as HWiNFO showed different data). And we will update once we know more.

Last but not least, we also benchmarked 7-Zip compression and decompression which measures in GIPS (billion instructions per second). Here we can see that AMD holds the crown for both scenarios.

Conclusion

This has certainly been a journey for me, and the one thing that I have taken away from this past week is that Intel runs hot! You need a really good cooling solution with the i9. When not under load I was seeing lows of 28C and averages of 33C which were in the range that the i5 idled at too, and even lower than the 39C of my 7950X3D which is liquid cooled.

Then there's the power use. As a European, we've had to get used to the idea of turning off lights in empty rooms and watching our power use thanks to the rising costs of our energy bills. One thing that will be on the minds of everyone on this side of the Atlantic when building a new high-end system, are questions like, "is it energy efficient?" This isn't. One of the major contributing decision for me to switch to AMD was the fact that it is super energy efficient, so much so that it costs half to power than my older 9th-gen Intel build.

The 14th gen Raptor Lake-S chips are beasts, Intel clearly took on AMD's flagship in the speed department and won. Intel loyalists will probably tell you it's better in every way, the benchmarks prove it. If you can cool it, and afford it, then you have a new gaming king of a processor with the i9.

However, let's not forget the i5 as well. This chip also proved itself in the benchmarks that it can hold its own against AMD's flagship in a number of tests, and coupled with a decent card like the 4070Ti, you have yourself a more than adequate gaming system at considerably less cost than an i9 and 4090 system. I've never been in the position to afford Nvidia's top card, but the i5 tests also prove that when coupled with an 4070 Ti, it can run (almost) everything on Ultra.

As an Amazon Associate when you purchase through links on our site, we earn from qualifying purchases.

When you purchase through links on our site, we may earn an affiliate commission.

3175x175(CURRENT).thumb.jpg.b05acc060982b36f5891ba728e6d953c.jpg)

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.