On Monday, Apple debuted "Apple Intelligence," a new suite of free AI-powered features for iOS 18, iPadOS 18, macOS Sequoia that includes creating email summaries, generating images and emoji, and allowing Siri to take actions on your behalf. These features are achieved through a combination of on-device and cloud processing, with a strong emphasis on privacy. Apple says that Apple Intelligence features will be widely available later this year and will be available as a beta test for developers this summer.

The announcements came during a livestream WWDC keynote and a simultaneous event attended by the press on Apple's campus in Cupertino, California. In an introduction, Apple CEO Tim Cook said the company has been using machine learning for years, but the introduction of large language models (LLMs) presents new opportunities to elevate the capabilities of Apple products. He emphasized the need for both personalization and privacy in Apple's approach.

At last year's WWDC, Apple avoided using the term "AI" completely, instead preferring terms like "machine learning" as Apple's way of avoiding buzzy hype while integrating applications of AI into apps in useful ways. This year, Apple figured out a new way to largely avoid the abbreviation "AI" by coining "Apple Intelligence," a catchall branding term that refers to a broad group of machine learning, LLM, and image generation technologies. By our count, the term "AI" was used sparingly in the keynote—most notably near the end of the presentation when Apple executive Craig Federighi said, "It's AI for the rest of us."

The Apple Intelligence umbrella includes a range of features that require an iPhone 15 Pro, iPhone 15 Pro Max, iPad with M1 or later, or Mac with M1 or later. Devices also must have Siri enabled and set to US English. The features include notification prioritization to minimize distractions, writing tools that can summarize text, change tone, or suggest edits, and the ability to generate personalized images for contacts. The system, through Siri, can also carry out tasks on the user's behalf, such as retrieving files shared by a specific person or playing a podcast sent by a family member.

Siri’s new brain

Apple says that privacy is a key priority in the implementation of Apple Intelligence. For some AI features, on-device processing means that personal data is not transmitted or processed in data centers. For complex requests that can't run locally on a pocket-sized LLM, Apple has developed "Private Cloud Compute," which sends only relevant data to servers without retaining it. Apple claims this process is transparent and that experts can verify the server code to ensure privacy.

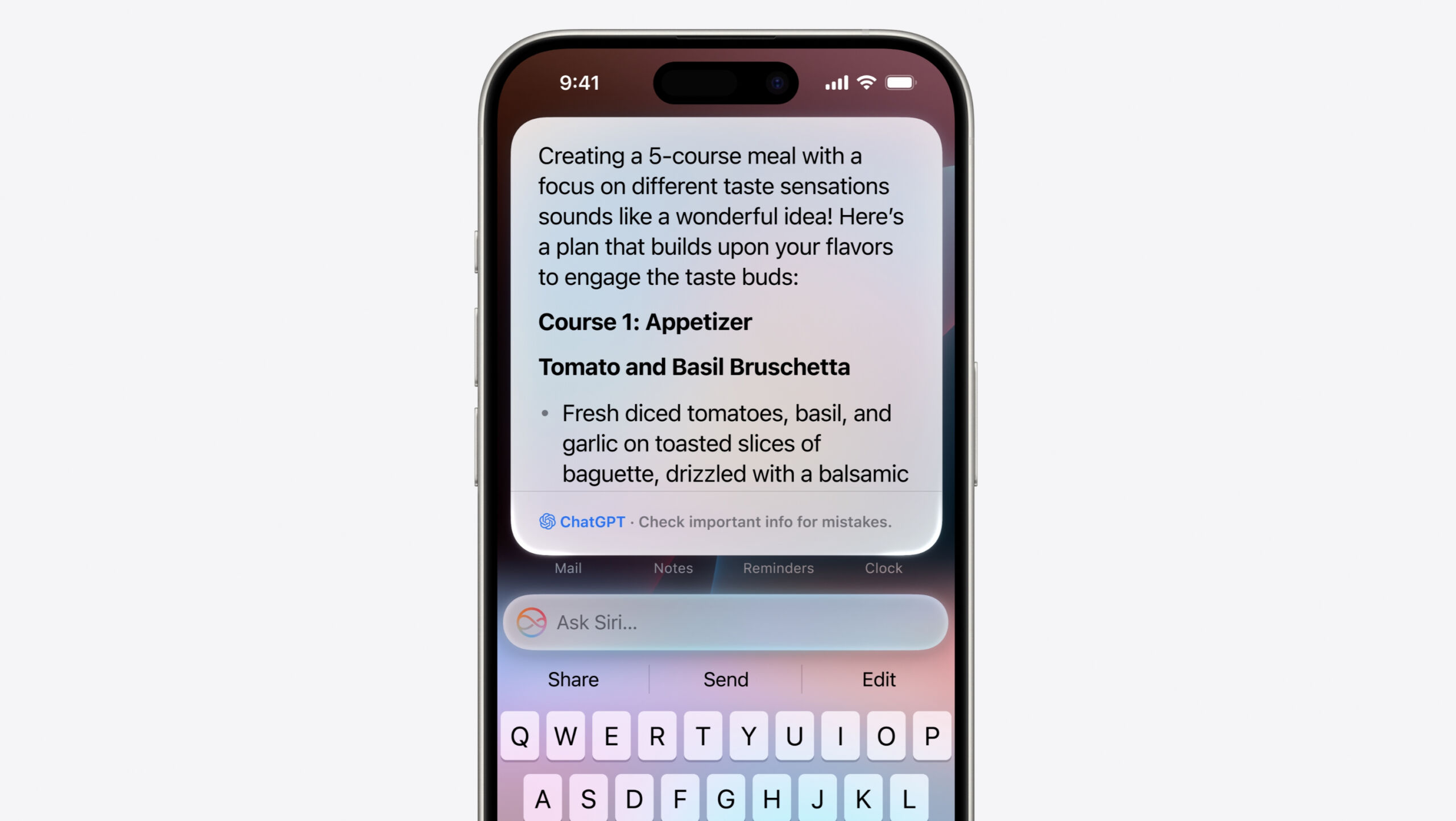

Under Apple Intelligence, Siri gets a big boost with a new logo and on-screen design, a new ability to understand more nuanced requests, and the ability to answer questions about the system state or take actions on the user's behalf.

Users can communicate with Siri using either voice or text input, and Siri can maintain context between requests.

The redesigned Siri also reportedly demonstrates onscreen awareness, allowing it to perform actions related to information displayed on the screen, such as adding an address from a Messages conversation to a contact card. Apple says the new Siri can execute hundreds of new actions across both Apple and third-party apps, such as finding book recommendations sent by a friend in Messages or Mail, or sending specific photos to a contact mentioned in a request.

3175x175(CURRENT).thumb.jpg.b05acc060982b36f5891ba728e6d953c.jpg)

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.