OpenAI has begun discussing the possibility of using a cryptographic watermark in ChatGPT text output that it will make it easier to spot text that has been created by the AI chatbot. The idea is to make sure that the tool isn’t being misused in ways that it shouldn’t such as to write papers for school or college.

A cryptographic watermark is a type of digital watermark that is embedded in a digital file or signal using cryptographic techniques. Digital watermarks are used to identify the ownership or origin of a digital file or signal, and they are often used to protect against unauthorized use or distribution of digital content. You get a lot of these types of watermarks in photos and videos, but OpenAI is talking about developing a cryptographic watermark that is integrated into the text that ChatGPT is outputting.

If you’re thinking about how this would work without affecting the coherence of the text output, you are on the right track, but the reality is that text is so open and infinite that it is very possible to include unnoticeable cryptographic code without it affecting the way the text reads.

In-truth, AI-generated content follows a predictable pattern in the way it uses words, which means that it is fairly easy to recognize it if you know what to look for. However, OpenAI looks set to build in a deliberate element of this predictability in an imperceptible way. As discussed in the Search Engine Journal by OpenAI’s Scott Aaronson, who has been tasked with securing AI Safety and Alignment at the company, this process is called pseudorandom distribution. Pseudorandomness is when a series of words or numbers appear random but is actually not truly random. This allows us to mark AI-generated content without disrupting the appearance of the text. Aaronson has written about the work he is doing o this for OpenAI in his own blog, saying:

“My main project so far has been a tool for statistically watermarking the outputs of a text model like GPT.

Basically, whenever GPT generates some long text, we want there to be an otherwise unnoticeable secret signal in its choices of words, which you can use to prove later that, yes, this came from GPT”

Interestingly, however, it looks as though a simple way to get around this type of watermarking would be to run the text through another generative text AI protocol and ask it to rewrite the text. This might sound silly, but it would offer the user the chance to generate text, removing the need to write it themselves and then sidestep the potential safety feature by having the other protocol write out the cryptographic watermark.

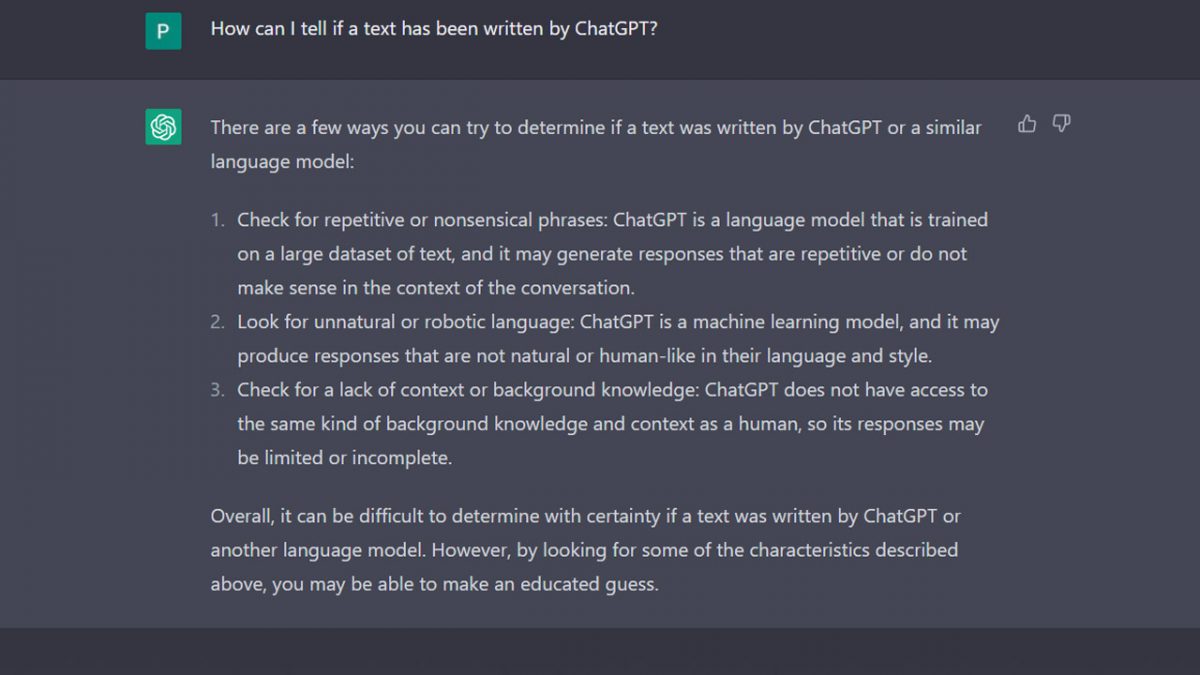

Clearly then, this is still a work in progress, which is likely why for now at least, OpenAI isn’t actively watermarking the text output coming from ChatGPT. However, if you are planning on using ChatGPT to write some important text for you, be careful as, like we mentioned above, if you know what to look for you will be able to spot AI-generated text, even without having a specific watermark to look for.

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.