According to Reuters, OpenAI researchers warned the company's board of directors about a groundbreaking AI discovery just days before CEO Sam Altman's sudden firing. The researchers expressed concerns about the potential risks associated with this powerful technology, urging the board to proceed with caution and develop clear guidelines for its ethical use.

The AI algorithm, reportedly named OpenAI Q-star, represents a leap forward in the quest for artificial general intelligence (AGI), a hypothetical AI capable of surpassing human intelligence in most economically valuable tasks.

Researchers believe OpenAI Q-star's ability to solve mathematical problems, albeit at a grade-school level, indicates its potential to develop reasoning capabilities akin to human intelligence.

However, the researchers also highlighted the potential dangers of such advanced AI, citing long-standing concerns among computer scientists about the possibility of AI posing a threat to humanity. They emphasized the need for careful consideration of the ethical implications of this technology and the importance of developing safeguards to prevent its misuse.

OpenAI Q-star might have been in the works for a few months now

Sam Altman's eventful firing, his deal with Microsoft, his comeback, and the artificial intelligence company OpenAI shook the entire technology world today with OpenAI Q-star. To understand what this system is, we first need to understand what an AGI is and what it can do.

Artificial general intelligence (AGI), also known as strong AI or full AI, is a hypothetical type of AI that would possess the ability to understand and reason at the same level as a human being. AGI would be capable of performing any intellectual task that a human can, and would likely far surpass human capabilities in many areas.

While AGI does not yet exist, many experts believed that it is only a matter of time before it is achieved. Some experts, such as Ray Kurzweil, believe that AGI could be achieved by 2045. Others, such as Stuart Russell, believe that it is more likely to take centuries.

Rowan Cheung, founder of Rundown AI, posted on Twitter/X that Sam Altman said in a speech the day before he left OpenAI: "Is this a tool we've built or a creature we have built?".

Although the OpenAI Q-star news came out today, let's take you back in time.

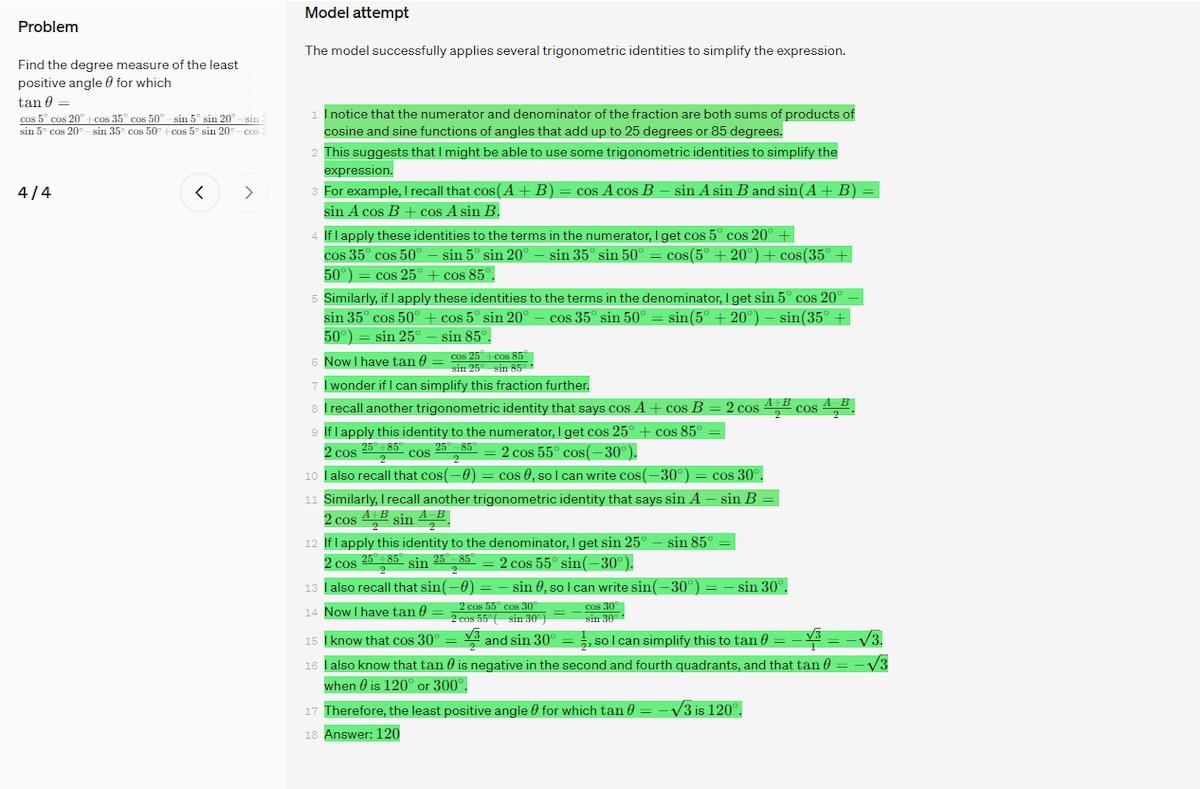

On May 31, 2023, OpenAI shared a blog post titled: "Improving Mathematical Reasoning with Process Supervision".

In the blog post, OpenAI described a new method for training large language models to perform mathematical problem solving. This method, called "process supervision", involves rewarding the model for each correct step in a chain-of-thought, rather than just for the correct final answer.

The authors of the article conducted a study to compare process supervision to the traditional method of "outcome supervision". They found that process supervision led to significantly better performance on a benchmark dataset of mathematical problems.

If we look back at the Reuters report, they say that they received the following statements from an anonymous person related to OpenAI: ''Some at OpenAI believe Q* (pronounced OpenAI Q-Star) could be a breakthrough in the startup's search for what's known as artificial general intelligence (AGI), told one of the insiders. OpenAI defines AGI as autonomous systems that surpass humans in most economically valuable tasks.

Given vast computing resources, the new model was able to solve certain mathematical problems, the person said on condition of anonymity because the individual was not authorized to speak on behalf of the company. Though only performing math on the level of grade-school students, acing such tests made researchers very optimistic about Q*’s future success, the source said''.

We are aware that OpenAI's research on process supervision was done using GPT-4, but the "new model was able to solve certain mathematical problems" mentioned by the anonymous person who spoke to Reuters is quite similar to the methodology used in past research.

Where is the catch?

Let's talk about why the entire tech world has reacted so strongly to OpenAI Q-star. I am sure that you have seen the theme of artificial intelligence taking over the world with its integration into human life in Sci-Fi movies.

Hal-900 in The 2001 Space Odyssey, Skynet in The Terminator, and Agents in The Matrix are the best-known examples of this theme and the theme of these films is that an artificial intelligence model trains itself to come to the conclusion that humanity is harmful to the world. That's one of the horrible outcomes of the AGI. We know that AI models are nowadays being developed by training on a specific database, which raises all sorts of privacy concerns.

As you may recall, for this reason alone, many countries, such as Italy, have banned the use of ChatGPT within their borders.

What if AI models could solve complex problems themselves without the need for a database? Would it be possible to predict the outcome and whether it would be useful for us?

The regulation of AI has started to be talked about at this point, but no one knows what kind of research companies are doing behind closed doors.

Of course, we are not saying that OpenAI Q-star will be the end of humanity. But the uncertainty is an element that arouses fear in humans as in every biological creature.

Perhaps Sam Altman also had these question marks inside his head when he said, "Is this a tool we've built or a creature we have built".

How about the good ending?

Maybe there is no need to be so pessimistic and OpenAI Q-star will be one of the biggest steps towards a brighter future the world and the scientific community have ever taken. Potentially, AGIs could be capable of solving problems that humans cannot interpret or solve.

Imagine a scientist who never gets tired and can interpret problems analytically with one hundred percent accuracy. How long do you think it will take this scientist to find a solution to cancer, which has caused millions of deaths over thousands of years, by finding out why sharks don't suffer from cancer?

What if all the problems in the world such as hunger, global warming, and soil pollution were no longer on our world's neck when you wake up tomorrow? Yes, a properly functioning AGI can do all this too.

Maybe it is too early to talk about all this and we are just being paranoid. Let's leave it all to time and hope for the best for all of us.

3175x175(CURRENT).thumb.jpg.b05acc060982b36f5891ba728e6d953c.jpg)

Recommended Comments

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.