People have performed many mathematical proofs to show that a quantum computer will vastly outperform traditional computers on a number of algorithms. But the quantum computers we have now are error-prone and don't have enough qubits to allow for error correction. The only demonstrations we've had involve quantum computing hardware evolving out of a random configuration and traditional computers failing to simulate their normal behavior. Useful calculations are an exercise for the future.

But a new paper from Google's quantum computing group has now moved beyond these sorts of demonstrations and used a quantum computer as part of a system that can help us understand quantum systems in general, rather than the quantum computer. And they show that, even on today's error-prone hardware, the system can outperform classical computers on the same problem.

Probing quantum systems

To understand what the new work involves, it helps to step back and think about how we typically understand quantum systems. Since the behavior of these systems is probabilistic, we typically need to measure them repeatedly. The results of these measurements are then imported into a classical computer, which processes them to generate a statistical understanding of the system's behavior. With a quantum computer, by contrast, it can be possible to mirror a quantum state using the qubits themselves, reproduce it as often as needed, and manipulate it as necessary. This method has the potential to provide a route to a more direct understanding of the quantum system at issue.

Much of the paper is devoted to describing situations where this should be the case, in part elaborating on ideas described in earlier papers.

The first of these ideas describes some property of a quantum system involving an arbitrary number of items—like a quantum computer with n qubits. This is exactly the circumstance described above, where repeated measurements need to be made before a classical computer can reliably identify a property. By contrast, a quantum computer can store a copy of the system in its memory, allowing it to be repeatedly duplicated and processed.

These problems, the authors show, can be solved on a quantum computer in what's called polynomial time, where the number of qubits is raised to a constant power (denoted nk). Using classical hardware, by contrast, the time scales as a constant raised to the power related to the number of qubits. As the number of qubits increases, the time needed for classical hardware rises much faster.

Options two and three

The second task they identify is a quantum principal component analysis, where computers are used to identify the property that has the largest influence on the quantum system's behavior. This was chosen in part because this analysis is thought to be relatively insensitive to the noise introduced by errors in today's quantum processors. Mathematically, the team shows that the number of times you'd need to repeat the measurements for analysis on a classical system grows exponentially with the number of qubits. Using a quantum system, the analysis can be done with a constant number of repeats.

The final situation involves allowing a physical process to influence the state of a quantum system, causing it to evolve to a new state. The goal is to find a model of the process that can accurately predict what the new state would be. Again, using a classical system means the challenge of getting enough measurements scales exponentially with the number of qubits but grows much more slowly when quantum computing is used.

Why does a quantum computer perform so much better? The researchers say that a key step is storing two copies of the examined system and then entangling them. This method is something that's only possible on quantum hardware.

Real-world examples

While that all works mathematically, it's not a demonstration that quantum computers will provide this advantage over classical machines. But Google has access to a high-end quantum computer and came up with two ideas about testing this on its hardware.

The first is, at least for quantum mechanics, relatively simple: figure out how many measurements it takes to train a machine-learning algorithm to identify a property of a collection of quantum states. As always, in a purely classical system, you have to do a lot of measurements before the algorithm has enough data to perform accurate predictions. For the quantum computer, however, it's possible to make two copies of the state and perform measurements that involve both copies, which then extract information about the system's state.

In both cases, the AI was trained on small (8 qubit) systems that avoided problems with the errors that crop up in these systems. When used on larger systems, the AI trained on quantum processor data had a level of accuracy that was competitive with a specialized algorithm specifically designed for this function. This significantly outperforms the classical version of the same training.

The second real-world example is one where the systems were asked to determine if a transformation of a quantum system is time-reversal symmetric, meaning it could be reversed if we could get time to go backward. In this case, a classical machine-learning process could not make that determination when trained on data from traditional measurement experiments. For the quantum version, the team made a copy of the qubits, entangled the original and copy, performed the transformation on both, and then measured pairs of qubits (original and copy). The data from that measurement was then used to train the machine-learning algorithm in an unsupervised manner (meaning the algorithm wasn't told which transformation was which).

This method worked. The trained algorithm could distinguish cases where transformations were time-reversal symmetric, something that appears to be very difficult or impossible with the classical system.

What’s this tell us?

There's a lot to unpack here. First, in this paper, Google is claiming a quantum advantage rather than quantum supremacy. There's a big difference between the two, with the former being used for cases where quantum computers outperform classical ones and the latter for when classical computers are unlikely to deliver a solution. In terms of these experiments, these are cases where a classical system probably could eventually generate a solution; it would just take longer.

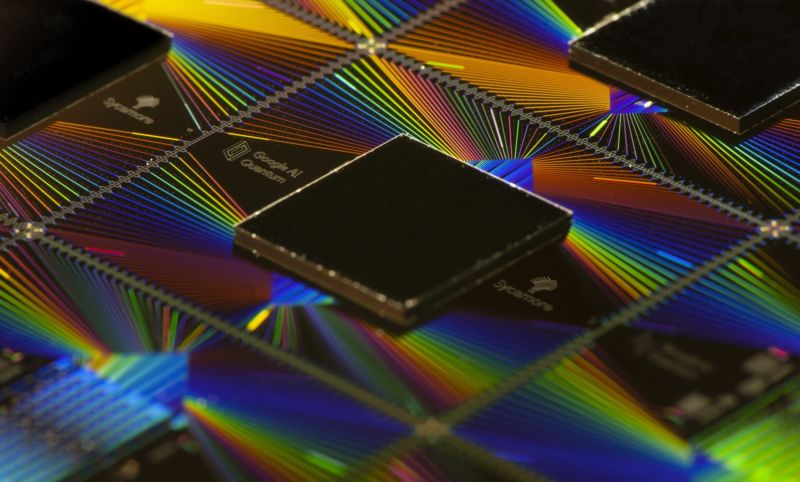

The striking thing about this is that the quantum system didn't even have to be very good to generate an advantage. Google's Sycamore processor has 53 qubits (only 40 of which were used here), and they're still fairly error-prone, yet it was able to perform well enough to produce a significant advantage.

The other big positive here is that previous instances where quantum computers have shown a major advantage involved them just being set to a random configuration and left to evolve to a new state—there was no hint of calculation involved. Here, both tests involved specific operations that can only be done on quantum computing hardware. We're not at calculations yet, but this work definitely required an ability to manipulate quantum systems. And, in theory, similar approaches could be used to help us understand quantum systems.

Moving from theory to practice is the biggest problem with developing this into a means of understanding quantum systems. For that to happen, we'd need a way to transfer the state of a quantum system into the state of the qubits. And, for a lot of systems we might be interested in, there's no obvious way to do that without falling back to measuring the system and then using the resulting classical information on its states to set the qubits—at which point we may have given up some or all of our advantage over classical systems.

The last thing worth noting is that this approach used a hybrid system: part quantum and part classical (the machine learning took place on classical hardware). Those hybrid systems are being widely explored to get the most out of low-qubit-count systems, so this is a nice demonstration that they're effective. The intriguing thing here is that it worked with unsupervised learning: The algorithm wasn't told what properties mattered but could recognize differences on its own. What's interesting there, the Google team suggests, is that a similar system could potentially identify distinctions that we don't even know exist and, so, help us gain a better understanding of quantum mechanics.

Science, 2022. DOI: 10.1126/science.abn7293 (About DOIs).

3175x175(CURRENT).thumb.jpg.b05acc060982b36f5891ba728e6d953c.jpg)

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.