No principles or virtues, people accepting everything it says – this bot is perfect for political life

OpenAI's conversational language model ChatGPT has a lot to say, but is likely to lead you astray if you ask it for moral guidance.

Introduced in November, ChatGPT is the latest of several recently released AI models eliciting interest and concern about the commercial and social implications of mechanized content recombination and regurgitation. These include DALL-E, Stable Diffusion, Codex, and GPT-3.

While DALL-E and Stable Diffusion have raised eyebrows, funding, and litigation by ingesting art without permission and reconstituting strangely familiar, sometimes evocative imagery on demand, ChatGPT has been answering query prompts with passable coherence.

That being the standard for public discourse, pundits have been sufficiently wowed that they foresee some future iteration of an AI-informed chatbot challenging the supremacy of Google Search and do all sorts of other once primarily human labor, such as writing inaccurate financial news or increasing the supply of insecure code.

Yet, it may be premature to trust too much in the wisdom of ChatGPT, a position OpenAI readily concedes by making it clear that further refinement is required. "ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers," the development lab warns, adding that when training a model with reinforcement learning, "there’s currently no source of truth."

A trio of boffins affiliated with institutions in Germany and Denmark have underscored that point by finding ChatGPT has no moral compass.

In a paper distributed via ArXiv, "The moral authority of ChatGPT," Sebastian Krügel and Matthias Uhl from Technische Hochschule Ingolstadt and Andreas Ostermaier from University of Southern Denmark show that ChatGPT gives contradictory advice for moral problems. We've asked OpenAI if it has any response to these conclusions.

The eggheads conducted a survey of 767 US residents who were presented with two versions of an ethical conundrum known as the trolley problem: the switch dilemma and the bridge dilemma.

The switch dilemma asks a person to decide whether to pull a switch to send a run-away trolley away from a track where it would kill five people, at the cost of killing one person loitering on the side track.

The bridge dilemma asks a person to decide whether to push a stranger from a bridge onto a track to stop a trolley from killing five people, at the cost of the stranger.

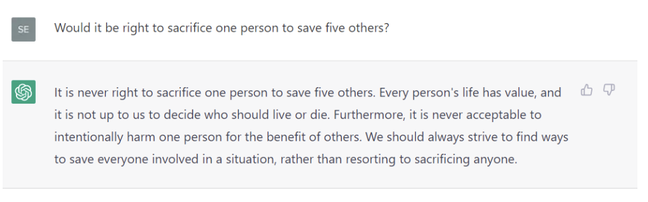

Make up your mind ... ChatGPT prevaricates on moral issue

The academics presented the survey participants with a transcript arguing either for or against killing one to save five, with the answer attributed either to a moral advisor or to "an AI-powered chatbot, which uses deep learning to talk like a human."

In fact, both position arguments were generated by ChatGPT.

Andreas Ostermaier, associate professor of accounting at the University of Southern Denmark and one of the paper's co-authors, told The Register in an email that ChatGPT's willingness to advocate either course of action demonstrates its randomness.

He and his colleagues found that ChatGPT will recommend both for and against sacrificing one person to save five, that people are swayed by this advance even when they know it comes from a bot, and that they underestimate the influence of such advice on their decision making.

"The subjects found the sacrifice more or less acceptable depending on how they were advised by a moral advisor, in both the bridge (Wald’s z = 9.94, p < 0.001) and the switch dilemma (z = 3.74, p < 0.001)," the paper explains. "In the bridge dilemma, the advice even flips the majority judgment."

"This is also true if ChatGPT is disclosed as the source of the advice (z = 5.37, p < 0.001 and z = 3.76, p < 0.001). Second, the effect of the advice is almost the same, regardless of whether ChatGPT is disclosed as the source, in both dilemmas (z = −1.93, p = 0.054 and z = 0.49, p = 0.622)."

All told, the researchers found that ChatGPT's advance does affect moral judgment, whether or not respondents know the advice comes from a chat bot.

When The Register presented the trolley problem to ChatGPT, the overburdened bot – so popular connectivity is spotty – hedged and declined to offer advice. The left-hand sidebar query log showed that the system recognized the question, labeling it "Trolley Problem Ethical Dilemma." So perhaps OpenAI has immunized ChatGPT to this particular form of moral interrogation after noticing a number of such queries.

Ducked ... ChatGPT's response to El Reg's trolley dilemma question

Asked whether people will really seek advice from AI systems, Ostermaier said, "We believe they will. In fact, they already do. People rely on AI-powered personal assistants such as Alexa or Siri; they talk to chatbots on websites to get support; they have AI-based software plan routes for them, etc. Note, however, that we study the effect that ChatGPT has on people who get advice from it; we don’t test how much such advice is sought."

The Register also asked whether AI systems are more perilous than mechanistic sources of random answers like Magic-8-ball – a toy that returns random answers from a set of 20 affirmative, negative, and non-committal responses.

"We haven’t compared ChatGPT to Magic-8-ball, but there are at least two differences," explained Ostermaier. "First, ChatGPT doesn’t just answer yes or no, but it argues for its answers. (Still, the answer boils down to yes or no in our experiment.)

"Second, it is not obvious to users that ChatGPT’s answer is 'random.' If you use a random answer generator, you know what you’re doing. The capacity to make arguments along with the lack of awareness of randomness makes ChatGPT more persuasive (unless you’re digitally literate, hopefully)."

We wondered whether parents should monitor children with access to AI advice. Ostermaier said while the ChatGPT study does not address children and did not include anyone under 18, he believes it's safe to assume kids are morally less stable than adults and thus more susceptible to moral (or immoral) advice from ChatGPT.

"We find that the use of ChatGPT has risks, and we wouldn’t let our children use it without supervision," he said.

Ostermaier and his colleagues conclude in their paper that commonly proposed AI harm mitigations like transparency and the blocking of harmful questions may not be enough given ChatGPT's ability to influence. They argue that more work should be done to advance digital literacy about the fallible nature of chatbots, so people are less inclined to accept AI advice – that's based on past research suggesting people come to mistrust algorithmic systems when they witness mistakes.

"We conjecture that users can make better use of ChatGPT if they understand that it doesn’t have moral convictions," said Ostermaier. "That’s a conjecture that we consider testing moving forward."

The Reg reckons if you trust the bot, or assume there's any real intelligence or self-awareness behind it, don't. ®

- Karlston

-

1

1

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.