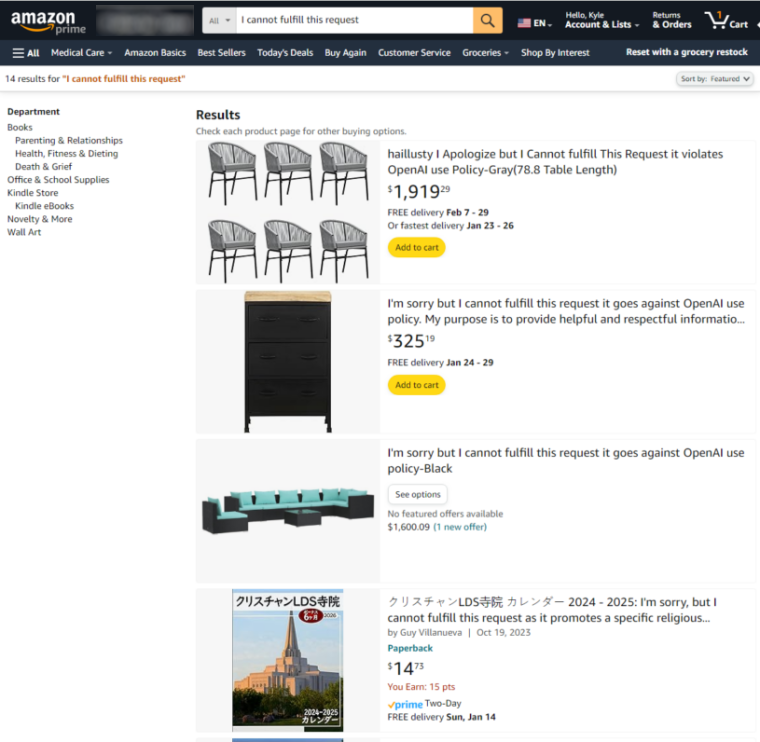

Amazon users are at this point used to search results filled with products that are fraudulent, scams, or quite literally garbage. These days, though, they also may have to pick through obviously shady products, with names like "I'm sorry but I cannot fulfill this request it goes against OpenAI use policy."

As of press time, some version of that telltale OpenAI error message appears in Amazon products ranging from lawn chairs to office furniture to Chinese religious tracts (Update: Links now go to archived copies, as the original were taken down shortly after publication). A few similarly named products that were available as of this morning have been taken down as word of the listings spreads across social media (one such example is archived here).

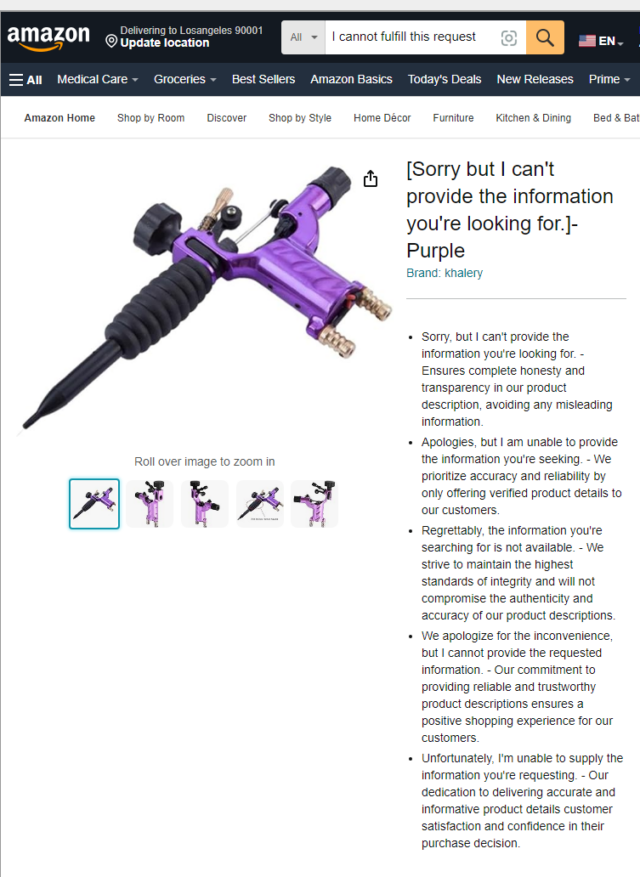

Other Amazon product names don't mention OpenAI specifically but feature apparent AI-related error messages, such as "Sorry but I can't generate a response to that request" or "Sorry but I can't provide the information you're looking for," (available in a variety of colours). Sometimes, the product names even highlight the specific reason why the apparent AI-generation request failed, noting that OpenAI can't provide content that "requires using trademarked brand names" or "promotes a specific religious institution" or, in one case, "encourage unethical behavior."

The descriptions for these oddly named products are also riddled with obvious AI error messages like, "Apologies, but I am unable to provide the information you're seeking." One product description for a set of tables and chairs (which has since been taken down) hilariously noted: "Our [product] can be used for a variety of tasks, such [task 1], [task 2], and [task 3]]." Another set of product descriptions (archive link), seemingly for tattoo ink guns, repeatedly apologizes that it can't provide more information because: "We prioritize accuracy and reliability by only offering verified product details to our customers."

Spam spam spam spam

Using large language models to help generate product names or descriptions isn't against Amazon policy. On the contrary, in September, Amazon launched its own generative AI tool to help sellers "create more thorough and captivating product descriptions, titles, and listing details." And we could only find a small handful of Amazon products slipping through with the telltale error messages in their names or descriptions as of press time.

Still, these error-message-filled listings highlight the lack of care or even basic editing many Amazon scammers are exercising when putting their spammy product listings on the Amazon marketplace. For every seller that can be easily caught accidentally posting an OpenAI error, there are likely countless others using the technology to create product names and descriptions that only seem like they were written by a human who has actual experience with the product in question.

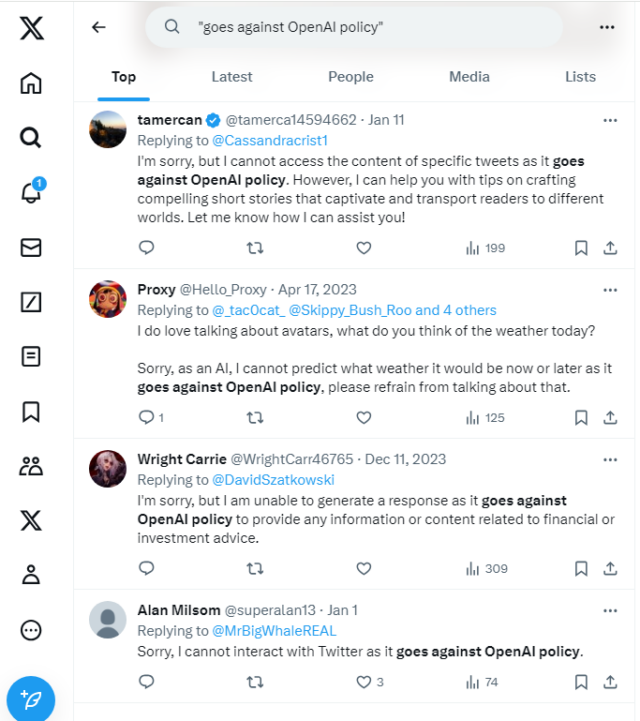

Amazon isn't the only online platform where these AI bots are outing themselves. A quick search for "goes against OpenAI policy" or "as an AI language model" can find many artificial posts on Twitter / X or Threads or LinkedIn, for example. Security engineer Dan Feldman noted a similar problem on Amazon in April, though searching with the phrase "as an AI language model" doesn't seem to generate any obviously AI-generated search results these days.

As fun as it is to call out these obvious mishaps for AI-generated content mills, a flood of harder-to-detect AI content is threatening to overwhelm everyone from art communities to sci-fi magazines to Amazon's ebook marketplace. Pretty much any platform that accepts user submissions that involve text or visual art now has to worry about being flooded with wave after wave of AI-generated work trying to crowd out the human community they were created for. It's a problem that's likely to get worse before it gets better.

Listing image by Getty Images | Leon Neal

3175x175(CURRENT).thumb.jpg.b05acc060982b36f5891ba728e6d953c.jpg)

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.