AMD's Senior Processor Technical Marketing Manager, Donny Woligroski, discusses the challenges of creating AI PC technology.

"We were the first ones with the NPU and then we're the first ones to have kind of our second iteration already out and running."

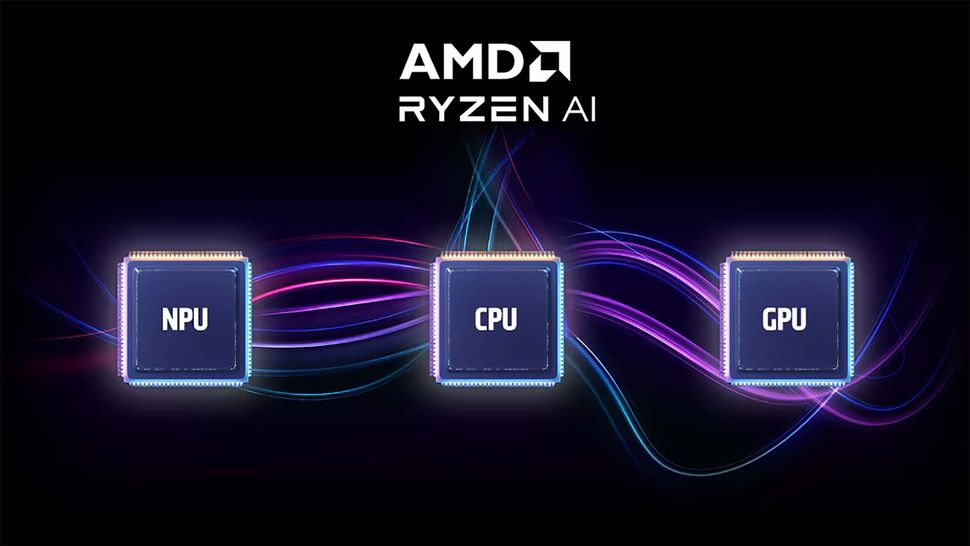

AI PCs have been getting a lot of attention lately, with Microsoft specifically defining AI PCs as AI-capable computers with a Copilot key and a system that utilizes an NPU (Neural Processing Unit) as well as a CPU and GPU. AMD was the first chip company to produce NPUs, but now Intel has its own series of Core Ultra processors with NPUs which have allowed several more AI PCs to enter the market (learn more at our NPU guide).

Recently, AMD shared benchmarks with me, which show that AMD Ryzen AI processors perform faster and more efficiently than Intel's AI processors. After viewing this information, I sat down with AMD Senior Processor Technical Marketing Manager Donny Woligroski to discuss how AMD plans to maintain a competitive edge against Intel. We also talked about AI PCs, Ryzen AI processors, and what AMD sees in its future.

Disclaimer

This interview has been condensed and edited for clarity.

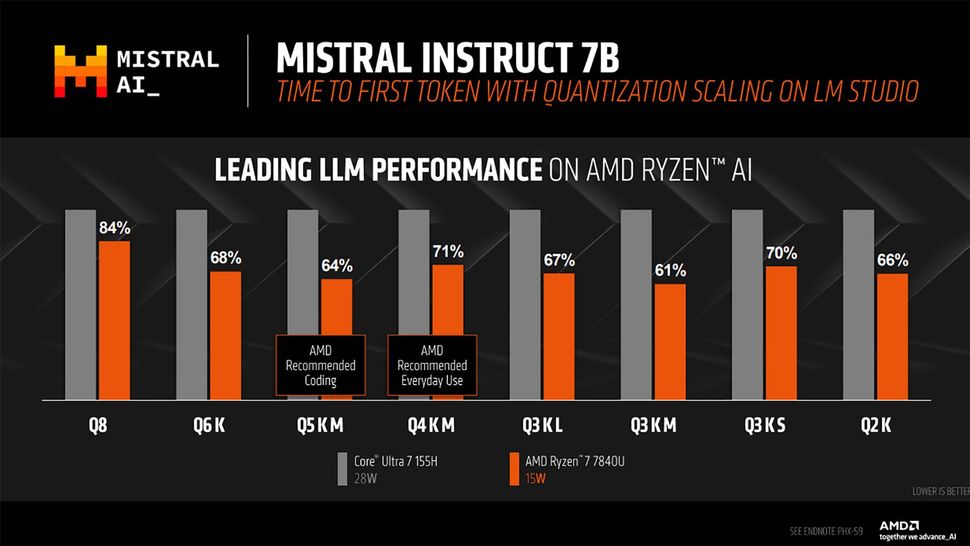

AMD Ryzen 7 vs Intel Core Ultra 7: Ryzen Mistral Intruct 7B benchmark measuring time to first token.

(Image credit: AMD)

Before speaking with Woligroski, AMD sent me presentation slides and a recorded briefing that showed benchmarks where the AMD Ryzen 7 7840U (15W) had clearly outperformed the Intel Core Ultra 7 155H (28W). Specifically, some benchmarks and demonstrations showed that AMD's processor was able to respond to LLM text prompts significantly faster than Intel's processor within Mistral AI (see the time to first token chart above). Impressive results, indeed.

I recently attended an Intel AI Summit in Taipei, where thousands of developers learned more about Intel's new Core Ultra AI-accelerating processors. This got me thinking about how, despite AMD having such impressive AI hardware, it's no secret that Intel has a larger sway on the processor market than AMD does. I brought this up with Woligroski and asked how AMD intends to remain competitive in the realm of AI processors now that Intel has joined the fray.

"I mean, full disclosure, our competitors probably have a much larger kitty when it comes to this kind of thing," he told me. "But AMD's strength has always been... just make good products and [buyers] will come sooner or later when the word gets out. This is really no different. We're just doing the best we can, making the best hardware we can, and trying to let people know our value prop."

AMD Ryzen AI processors. (Image credit: AMD)

A little while later in our discussion, we once more turned to the subject of staying competitive in the AI processors arena. "We were the first ones with the NPU," he continued. "And then we're the first ones to have kind of our second iteration already out and running. So we had 10 TOPS or 10 Trillions of Tera Operations per Second on the first one in the 7000 series. In the 8000 series, we have 16 [TOPS]. So it's already 60% faster. And what we have disclosed in our December AI event is that 'hey, the next-gen is going to have three times this power.'"

He went on to explain that "AMD is very much an aggressive player and quite innovative" thanks to its intelligent people "whose entire goal is to make sure that [AMD is] on the cutting edge." This being the case, discussions are frequently had at AMD about how best to optimize the GPU, CPU, and NPU for better overall performance so the company can continue to produce competitive and useful AI processors.

AI PCs feature an NPU in addition to a CPU and GPU. (Image credit: AMD)

Our discussion led us to talk about the benefits of using AI PCs. When I asked him what use cases do you think the average consumer would be most interested in for a computer with an NPU (AI PCs)? Woligroski responded that the ability to do AI tasks locally on an AI PC was very important, listing four key reasons: better performance, better security/privacy, the ability to use open-source software rather than subscription AI services, and having an NPU that is specifically created to be an efficient AI engine.

"So those are [reasons]... I would flag to people and say, 'Hey, this is why you want this hardware locally.' And that's not to say we don't think server AI is important. Absolutely, it is. And there's going to be things that are more appropriate to run on servers. And if you're not a commercial user, you might be more open to that but it's really evolving."

(Image credit: Kevin Okemwa | Bing Image Creator)

We ended up talking about how AI PCs allow us to use LLM locally on a laptop while on the go. "Yeah." he said. "It is humbling to run an LLM locally and be like, 'This is my laptop. My laptop is that smart. I can say things to it and it understands what I'm saying and it knows all these things. It's so bizarre.' Like LLaMA, the 7 billion parameter model I think fits in, I want to say 4 gigs of RAM. That's crazy. That's so tiny."

"...It's a new resource too. If you think about it that way, right? We've had CPUs and GPUs for years. And now you could do a bunch of things on this resource and it's not slowing down your other stuff that otherwise would have been relied on for that. And it's not like we have a slower CPU this year because we put in an NPU. And it's not like we have slower graphics. Quite the contrary it all kind of you know, the rising tide hits all the boats on that level."

AMD frequently takes part in tech events. (Image credit: AMD)

Woligroski also reminded me that while the NPU is important in AI PCs, the CPU and GPU are also key factors. What is more important is having a system that knows when best to use the NPU, CPU, and GPU for various tasks.

He stated, "In a perfect world - and I think in the future, this will be there - is that the OS is going to be intelligent enough to kind of handle all that stuff... I think the OS is a big piece of this, and it's great to have a great partner like Microsoft who's really focused on getting that experience up like Copilot Pro is probably one of the best things I've played with in my career and that's saying something."

"...AI isn't just an NPU problem," he continues. "The way AMD defines it, and the way Microsoft also, I believe will define it is an AI processor in the next-gen AI PC. It's going to need an NPU, yes, but it's also still going to need AI acceleration on the CPU. There's [sic] a lot of things the CPU does to also enhance your experience. There's also a ton of acceleration on the GPU now too. So it's not just this one thing (the NPU), although this one thing is in the center of everyone's minds because it's the new piece... The NPU is definitely going to come into its own in 2024. But it's ramping... Really, this is the first iteration of the AI PC- the next-gen PC will be coming. And when that is [here] it's going to be more integrated into your OS. And that means your whole operating system is going to be more tied into it."

As we continued to talk, I asked Woligroski about the future of AMD. Specifically asking "what challenges is AMD facing right now with machine learning and AI in general?" He told me that AI is evolving at such a rapid rate compared to past computer innovations that it's hard to know where things will be in the next few years. This has made it so that the company frequently has to change tack on its plans.

"The good is also the bad, right?" He replied. "The speed at which this is moving makes it very hard to crystal ball a year out, two years out, compared to our traditional computing models where it's like okay, we need more GPU, we need more CPU, and this amount every year seems to be really good and people like it. Now it's like, okay, what's going to be taxed the most? Where do we put our resources? What kind of software do we optimize?

"And I've seen those targets change over the last year where we thought it was one thing and then we're like, oh, okay, this thing over here is happening and then let's work on that and it's oh, this thing over here. So it is just so fast-moving. And the good news is, I can't see it sustaining that realistically. I mean, it's like the gold rush, but it's probably going to come down in a couple of years, and then we'll just be refining the experience and making it easier and more accessible, which we're already doing."

AI PCs in our future

This year, we have already seen a big wave of AI PCs hit the market, and more are on the way. These computers are already incredibly impressive, allowing us to perform AI tasks far quicker on our local systems than we have hitherto been able to. As time goes on, AMD will refine its hardware and bring us even better experiences.

We really are on the cusp of a huge shift in the laptop market and these first few waves of AI PCs are just the beginning. AMD will certainly take steps to continue growth in this area for years to come.

3175x175(CURRENT).thumb.jpg.b05acc060982b36f5891ba728e6d953c.jpg)

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.