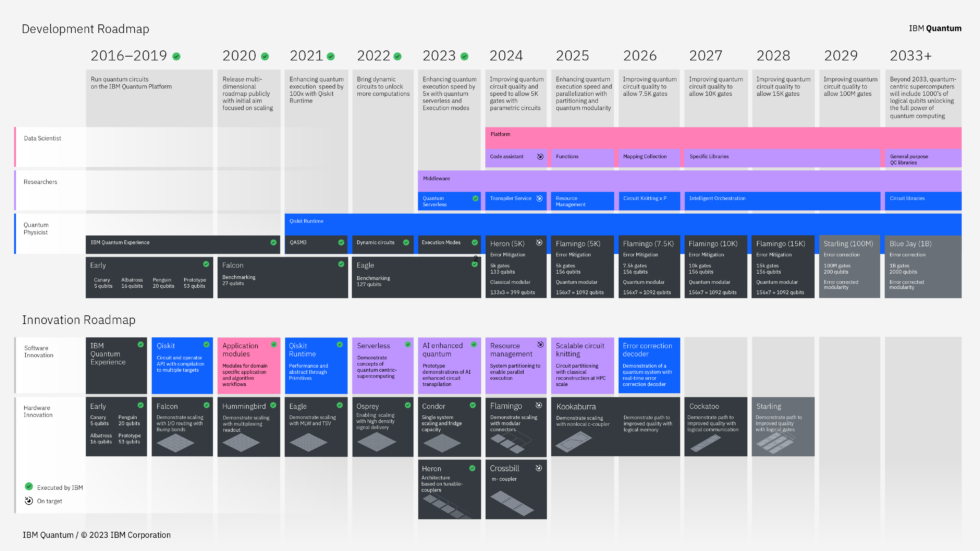

On Monday, IBM announced that it has produced the two quantum systems that its roadmap had slated for release in 2023. One of these is based on a chip named Condor, which is the largest transmon-based quantum processor yet released, with 1,121 functioning qubits. The second is based on a combination of three Heron chips, each of which has 133 qubits. Smaller chips like Heron and its successor, Flamingo, will play a critical role in IBM's quantum roadmap—which also got a major update today.

Based on the update, IBM will have error-corrected qubits working by the end of the decade, enabled by improvements to individual qubits made over several iterations of the Flamingo chip. While these systems probably won't place things like existing encryption schemes at risk, they should be able to reliably execute quantum algorithms that are far more complex than anything we can do today.

We talked with IBM's Jay Gambetta about everything the company is announcing today, including existing processors, future roadmaps, what the machines might be used for over the next few years, and the software that makes it all possible. But to understand what the company is doing, we have to back up a bit to look at where the field as a whole is moving.

Qubits and logical qubits

Nearly every aspect of working with a qubit is prone to errors. Setting its initial state, maintaining that state, performing operations, and reading out the state can all introduce errors that will keep quantum algorithms from producing useful results. So a major focus of every company producing quantum hardware has been to limit these errors, and great strides have been made in that regard.

There's some indication that those strides have now gotten us to the point where it's possible to execute some simpler quantum algorithms on existing hardware. And it's likely that this potential will expand to further algorithms thanks to the improvements that we can probably expect over the next few years.

In the long term, though, we're unlikely to ever get the qubit hardware to the point where the error rate is low enough that a processor could successfully complete a complex algorithm that might require billions of operations over hours of computation. For that, it's generally acknowledged that we'll need error-corrected qubits. These involve spreading the quantum information held by a qubit—termed a "logical qubit"—across multiple hardware qubits. Additional qubits are used to monitor the logical qubit for errors and allow for their correction.

Computing using logical qubits requires two things. One is that the error rates of the individual hardware qubits have to be low enough that individual errors can be identified and corrected before new ones take place. (There's some indication that the hardware is good enough for this to work with partial efficiency.) The second thing you need is lots of hardware qubits, since each logical qubit requires multiple hardware qubits to function. Some estimates suggest we'll need a million hardware qubits to create a machine capable of hosting a useful number of logical qubits.

IBM is now saying that it expects to have a useful number of logical qubits by the end of the decade, and Gambetta explained how today's announcements fit into that roadmap.

Qubits and gates

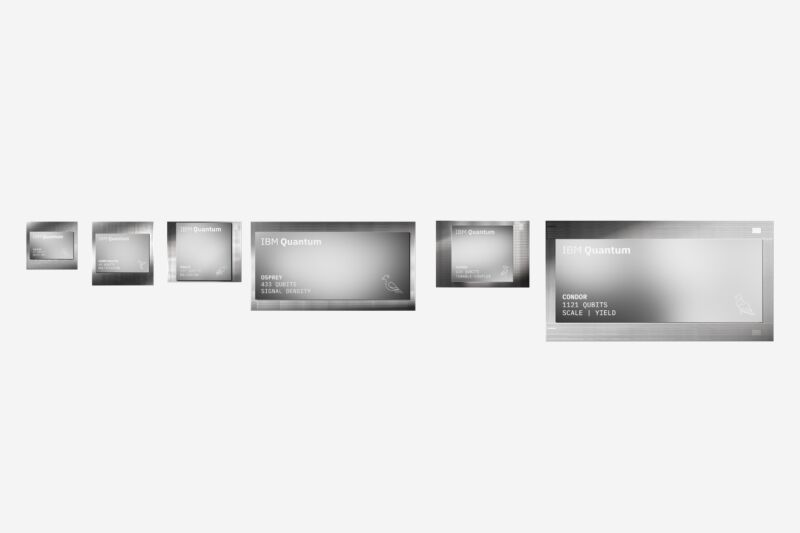

Gambetta said that the company has been taking a two-track approach to getting its hardware ready. One aspect of this has been developing the ability to consistently fabricate high-quality qubits in large numbers. And he said that the 1,000+ qubit Condor is an indication that the company is in good shape in that regard. "It's about 50 percent smaller qubits," Gambetta told Ars. "The yield is right up there—we got the yield close to 100 percent."

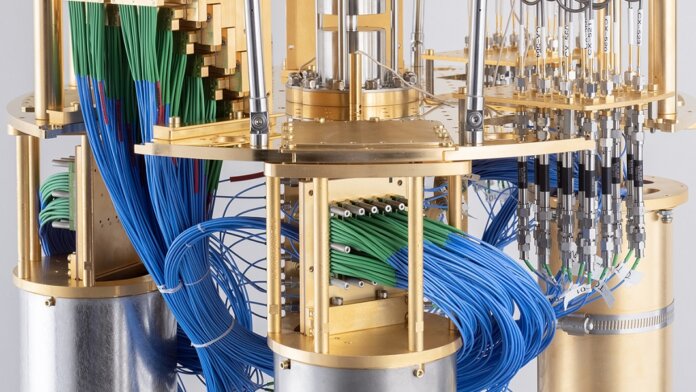

The second aspect IBM has been working on is limiting errors that occur when operations are done on individual or pairs of qubits. These operations, termed gates, can be error-prone themselves. And changing the state of a qubit can produce subtle signals that can bleed into neighboring qubits, a phenomenon called crosstalk. Heron, the smaller of the new processors, represents a four-year effort to improve gate performance. "It's a beautiful device," Gambetta said. "It's five times better than the previous devices, the errors are way less, [and] crosstalk can't really be measured."

Many of the improvements come down to introducing tunable couplers to the qubits, a change from the fixed-frequency hardware the company had used previously. This has sped up all gate operations, with some seeing a 10-fold boost. The less time you spend doing anything with a qubit, the less of an opportunity there is for errors to crop up.

Many of these improvements were tested over multiple iterations of the company's Eagle chip, which was first introduced in 2021. The company's new roadmap will see an improved iteration of the 133-qubit Heron released next year that will enable 5,000 gate operations. That will be followed with multiple iterations of next year's 156-qubit Flamingo processor that will take gate operations up to 15,000 by 2028.

These chips will also be linked together into larger processors like Crossbill and Kookaburra that also appear on IBM's roadmap (for example, seven Flamingos could be linked to create a processor with a similar qubit count to the current Condor). The focus here will be on testing different means of connecting qubits, both within and between chips.

3175x175(CURRENT).thumb.jpg.b05acc060982b36f5891ba728e6d953c.jpg)

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.