Bosses at Google will have been spitting feathers behind closed doors in recent weeks as OpenAI has grabbed the world’s attention and put its ChatGPT model at the forefront of the conversation on consumer AI tech. Google has been involved in the development of OpenAI’s tech, but it is Microsoft that has jumped all over the hype train generated by OpenAI, as the company looks set to invest $10 billion in the company as well as making moves to incorporate its tech into various products.

However, whereas Microsoft looks set to pay its way to the leading positions in these emerging AI markets, Google has been continuing to develop its own AI products and the search giant looks set to launch, via its subsidiary DeepMind, a rival chatbot to ChatGPT in the near future. The new chatbot, which is called Sparrow, was introduced last year as a proof-of-concept in a research paper. DeepMind CEO Demis Hassabis said that Sparrow could be released for a "private beta" in 2023.

Hassabis also went on to mention why the project has been delayed compared to ChatGPT’s release saying that caution Is warranted when dealing with these types of products. This approach has certainly been borne out by the variety of risks associated with generative technologies like this, as has been the case since ChatGPT launched. As well as plaudits and high levels of what could legitimately be called hysteria, ChatGPT’s release has also caused alarm thanks to how easy it has been to get past the guardrails designed to prevent it from generating harmful and biased text outputs and even the fact that scammers have been using it to craft phishing emails and even malicious code.

Another key consideration that DeepMind is taking into account with Sparrow is factual accuracy. Essentially, when querying these types of language models, they output text that reads legitimately but that has only been created to look like what a real answer would be. The tools themselves don’t have any conception of whether what they have told you is true or not.

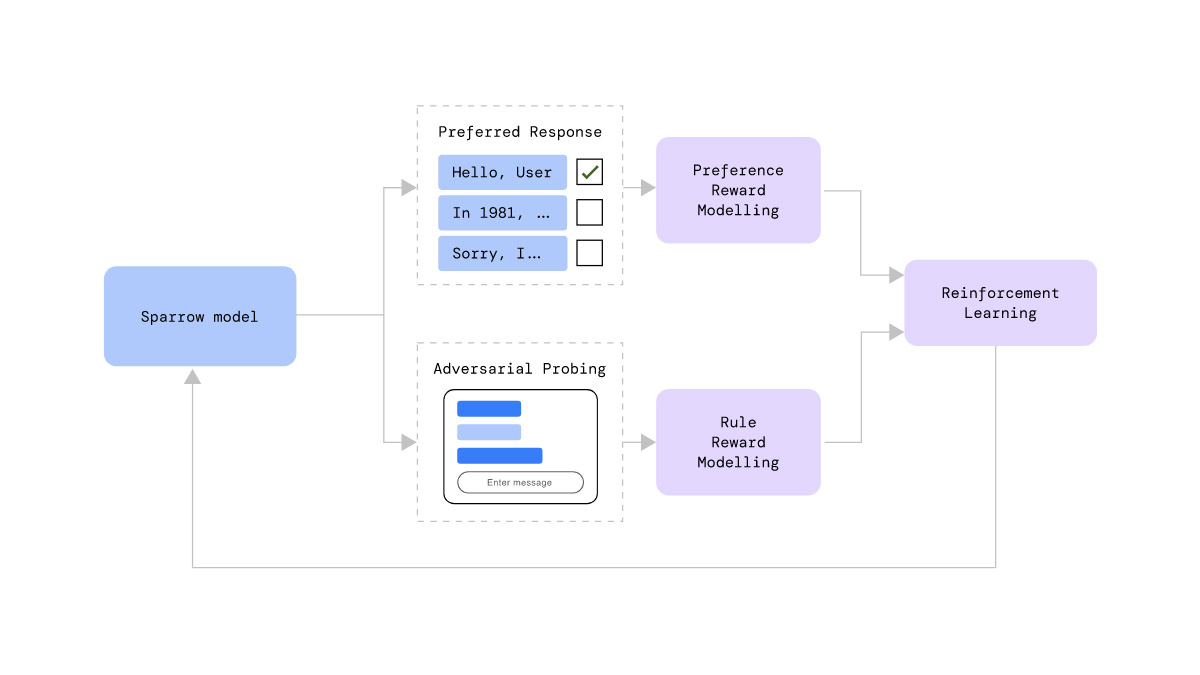

To help deal with this, Sparrow is expected to have important features such as the ability to cite specific sources of information. According to the research paper outlining how Sparrow works, it supported its plausible-sounding answers with evidence 78% of the time. DeepMind is also working on behavior-constraining rules and a willingness to defer to humans in certain contexts.

The above innovations sound like important steps for these types of tools. The addition of links to cited information definitely gives Sparrow a much more Google flavor than what you currently get from ChatGPT. As to how this will address some of the fears that have been raised relating to areas such as education remain to be seen, however. While citing sources that have been used to formulate answers could offer an excellent starting point for research, it could also come out like a ready-made essay for submission complete with relevant references.

- aum and Karlston

-

2

2

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.