Responsibility starts at the beginning.

You've probably heard or read people talking about ethical AI. While computers can't actually think and thus have no actual bias or sense of responsibility, the people developing the software that powers it all certainly do and that's what makes it important.

It's not an easy thing to describe or to notice. That doesn't take anything away from how vital it is that big tech companies who develop consumer-facing products powered by AI do it right. Let's take a look at what it is and what is being done to make sure AI makes our lives better instead of worse.

AI is nothing more than a chunk of software that takes in something, processes it, and spits out something else. Really, that's what all software does. What makes AI different is that the output can be wildly different from the input.

Take ChatGPT or Google Bard for instance. You can ask either to solve math problems, look something up on the web, tell you what day Elvis Presley died, or write you a short story about the history of the Catholic church. Nobody would have thought you could use your phone for this a few years ago.

Some of those are pretty easy tasks. Software that's integrated with the internet can fetch information about anything and regurgitate it back as a response to a query. It's great because it can save time, but it's not really amazing.

(Image credit: Future)

One of those things is different, though. Software is used to create meaningful content that uses human mannerisms and speech, which isn't something that can be looked up on Google. The software recognizes what you are asking (write me a story) and the parameters to be used for it (historical data about the Catholic church) but the rest seems amazing.

Believe it or not, responsible development matters in both types of use. Software doesn't magically decide anything and it has to be trained responsibly, using sources that are either unbiased (impossible) or equally biased from all sides.

You don't want Bard to use someone who thinks Elvis is still alive as the sole source for the day he died. You also wouldn't want someone who hates the Catholic church to program software used to write a story about it. Both points of view need to be considered and weighed against other valid points of view.

Ethics matter as much as keeping natural human bias in check. A company that can develop something needs to make sure it is used responsibly. If it can't do that, it needs to not develop it at all. That's a slippery slope — one that is a lot harder to manage.

The companies that develop intricate AI software are aware of this and believe it or not, mostly follow the rule saying that some things just shouldn't be made even if they can be made.

(Image credit: Microsoft)

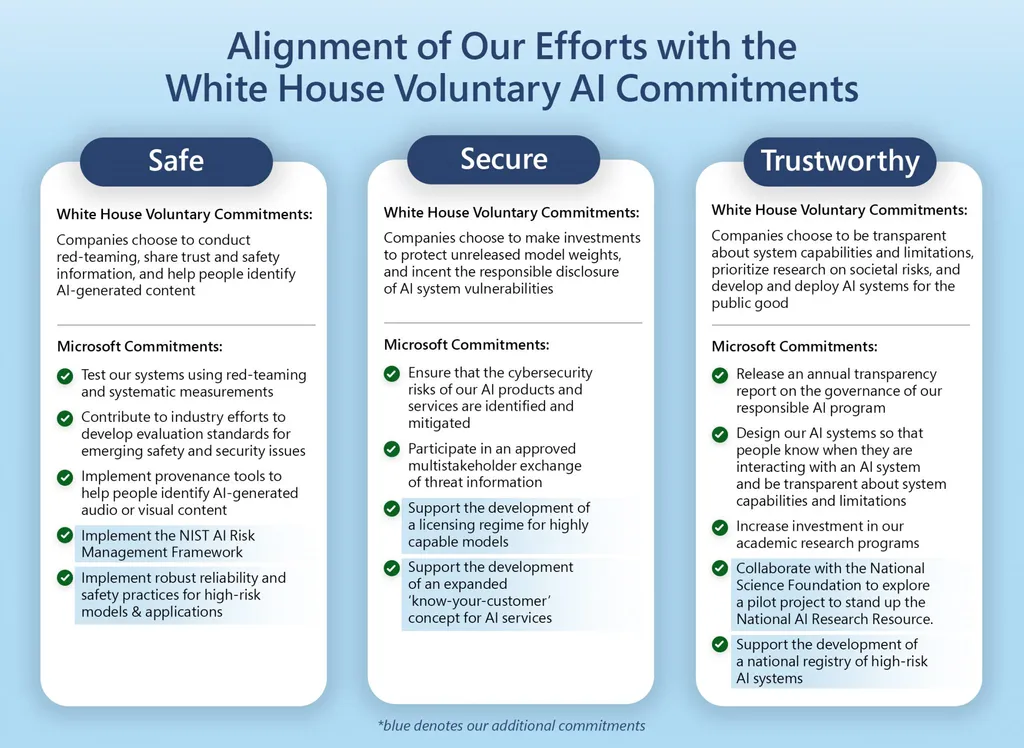

I reached out to both Microsoft and Google about what's being done to make sure AI is used responsibly. Microsoft told me they didn't have anything new to share but directed me to a series of corporate links and blog posts about the subject. reading through them shows that Microsoft is committed to "ensure that advanced AI systems are safe, secure, and trustworthy" in accordance with the current U.S. policy and outlines its internal standards in great detail. Microsoft currently has an entire team working on responsible AI.

In contrast, Google has plenty to say in addition to its already published statements. I recently attended a presentation about the subject where senior Google employees said the only AI race that matters is the race for responsible AI. They explained that just because something is technically possible isn't a good enough reason to greenlight a product: harms need to be identified and mitigated as part of the development process so they can then be used to further refine that process.

Facial recognition and emotional detection were given as examples. Google is really good at both and if you don't believe that, have a look at Google Photos where you can search for a specific person or for happy people in your own pictures. It's also the number one feature requested by potential customers but Google refuses to develop it further. The potential harm is enough for Google to refuse to take money for it.

Even behind a VR headset or in dark glasses google can identify me. (Image credit: Future)

Google too is in favor of both governmental regulation as well as internal regulation. That's both surprising and important because a level playing field where no company can create harmful AI is something that both Google and Microsoft think outweighs any potential income or financial gain.

What impressed me the most was Google's response when I asked about balancing accuracy and ethics. For something like plant identification, accuracy is very important while bias is less important. For something like identifying someone's gender in a photo, both are equally important.

Google breaks AI into what it calls slices; each slice does a different thing and the outcome is evaluated by a team of real people to check the results. Adjustments are made until the team is satisfied, then further development can happen.

None of this is perfect and we all have seen AI do or repeat very stupid things, sometimes even hurtful things. If these biases and ethical breaches can find their way into one product, they can (and do) find their way into all of them.

What's important is that the companies developing the software of the future recognize this and keep trying to improve the process. It will never be perfect but as long as each iteration is better than the last we're moving in the right direction.

- Adenman

-

1

1

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.