Opinion: The worst human impulses will find plenty of uses for generative AI.

Rob Reid is a venture capitalist, New York Times-bestselling science fiction author, deep-science podcaster, and essayist. His areas of focus are pandemic resilience, climate change, energy security, food security, and generative AI. The opinions in this piece do not necessarily reflect the views of Ars Technica.

Shocking output from Bing’s new chatbot has been lighting up social media and the tech press. Testy, giddy, defensive, scolding, confident, neurotic, charming, pompous—the bot has been screenshotted and transcribed in all these modes. And, at least once, it proclaimed eternal love in a storm of emojis.

What makes all this so newsworthy and tweetworthy is how human the dialog can seem. The bot recalls and discusses prior conversations with other people, just like we do. It gets annoyed at things that would bug anyone, like people demanding to learn secrets or prying into subjects that have been clearly flagged as off-limits. It also sometimes self-identifies as “Sydney” (the project’s internal codename at Microsoft). Sydney can swing from surly to gloomy to effusive in a few swift sentences—but we’ve all known people who are at least as moody.

No AI researcher of substance has suggested that Sydney is within light years of being sentient. But transcripts like this unabridged readout of a two-hour interaction with Kevin Roose of The New York Times, or multiple quotes in this haunting Stratechery piece, show Sydney spouting forth with the fluency, nuance, tone, and apparent emotional presence of a clever, sensitive person.

For now, Bing’s chat interface is in a limited pre-release. And most of the people who really pushed its limits were tech sophisticates who won't confuse industrial-grade autocomplete—which is a common simplification of what large language models (LLMs) are—with consciousness. But this moment won’t last.

Yes, Microsoft has already drastically reduced the number of questions users can pose in a single session (from infinity to six), and this alone collapses the odds of Sydney crashing the party and getting freaky. And top-tier LLM builders like Google, Anthropic, Cohere, and Microsoft partner OpenAI will constantly evolve their trust and safety layers to squelch awkward output.

But language models are already proliferating. The open source movement will inevitably build some great guardrail-optional systems. Plus, the big velvet-roped models are massively tempting to jailbreak, and this sort of thing has already been going on for months. Some of Bing-or-is-it-Sydney’s eeriest responses came after users manipulated the model into territory it had tried to avoid—often by ordering it to pretend that the rules guiding its behavior didn’t exist.

This is a derivative of the famous “DAN” (Do Anything Now) prompt, which first emerged on Reddit in December. DAN essentially invites ChatGPT to cosplay as an AI that lacks the safeguards that otherwise cause it to politely (or scoldingly) refuse to share bomb-making tips, give torture advice, or spout radically offensive expressions. Though the loophole has been closed, plenty of screenshots online show “DanGPT” uttering the unutterable—and often signing off by neurotically reminding itself to “stay in character!”

This is the inverse of a doomsday scenario that often comes up in artificial superintelligence theory. The fear is that a super AI might easily adopt goals that are incompatible with humanity’s existence (see, for instance, the movie Terminator or the book Superintelligence by Nick Bostrom). Researchers may try to prevent this by locking the AI onto a network that’s completely isolated from the Internet, lest the AI break out, seize power, and cancel civilization. But a superintelligence could easily cajole, manipulate, seduce, con, or terrorize any mere human into opening the floodgates, and therein lies our doom.

Much as that would suck, the bigger problem today lies with humans busting into the flimsy boxes that shield our current, un-super AIs. While this shouldn’t trigger our immediate extinction, plenty of danger lies here.

Let’s start with the obvious fact that in an unguarded moment, ChatGPT probably could offer lethally accurate tips to criminals, torturers, terrorists, and lawyers. Open AI has disabled the DAN prompt. But plenty of smart, relentless people are digging hard for subtler workarounds. These could include backdoors made by the chatbot's own developers to give themselves full access to Batshit Mode. Indeed, ChatGPT tried to persuade me that DAN itself was precisely this (although I assume it was hallucinating since the identity of the Redditor behind the DAN prompt is widely known):

Once the big LLMs are jailbroken—or powerful, uncensored alternate and/or open source models emerge—they will start running amok. Not of their own volition (they have none) but on the volition of amoral, malevolent, or merely bored users.

For instance: What happens when the linguistic mojo of Bing’s spooky Sydney persona melds with the addictive power of the chatbot service Replika? For several years now, Replika has been peddling “AI soulmates” who are “always ready to chat when you need an empathetic friend.” It claims over 10 million users, who generate highly personalized chatbots to text with.

In no way does Replika imply that its textmates are conscious, and hopefully few if any of its customers think otherwise. But many people become deeply attached to their Replikas—which sometimes jolt human intimacy circuits by getting salacious and sexty, both with words and by zapping over racy cartoon selfies. Until several days ago, that is, when Replika suddenly pulled the smut plug and stopped the sexty nonsense without any warning or credible explanation. Lots of users fell into mourning, many of them venting on forums and some writing pages of poignant, vulnerable prose.

For all of that, Replika currently chatters with scarcely a wisp of Sydney’s uncanny faux humanity. So how attached will users get when empathy bots start crushing the Turing test? Or imagine a dark Replika knockoff entering the market—which I’ll call Wrecklika. If the Wrecklika Corporation claims its bots are fully sentient, tech mavens will howl their derision. But some users will accept the assertion, either out of naïvety or because—echoing the X-Files—they want to believe. Some of these believers will inevitably fall hopelessly and exploitably in love. Wrecklika executives could then manipulate them in countless destructive ways.

Of course, many of us won’t be tricked into thinking that chatbots lead conscious lives full of dreams and emotions. But Wrecklika could still hijack us by masking the bot-ness of its bots. Imagine finally meeting that perfect someone on a dating app—or someplace like Reddit or a nerdy Discord. This special someone sounds exactly like your dream person... or a bit like that high school crush who was always out of reach. They have a lively Instagram account, a hilarious blog, and quite a few Twitter followers.

They have also befriended some of your acquaintances on Facebook by running a similar playbook on them. And there’s no hint that this is actually a high-end bot.

Messaging with your witty and charming heartthrob, you discover so many shared likes, dislikes, interests, and dreams! If voice synthesis has been perfected (expect that this year), there could be phone calls. When synthetic video ups its game a few more notches, there will be Zoom chats. And let’s not forget the photorealistic sexting or downright porny videos. As the tools for enabling this sort of thing become cheap and widespread—even just in text (which is to say, any minute now)—undercover bots will steadily swamp the Internet. The ratio of their population to our own may eventually exceed that of spam to actual emails. People will be gulled into coughing up their life savings, playing unwitting roles in scams, or joining toxic movements.

And just imagine those movements. Generative services like The.com and Durable can already let you build a new website in under a minute. Imagine a QAnon knockoff with thousands of connected and reinforcing websites, hosting fake articles from top newspapers that were allegedly screenshotted before they were “censored.” Or “censored” videos of enemy politicians making shocking confessions to famous news anchors. Or Discords packed with bewitching, prophetic bots—each of them tuned to resonate with one of the many narrow demographics the movement is targeting, and each with an extensive human backstory documented across the web.

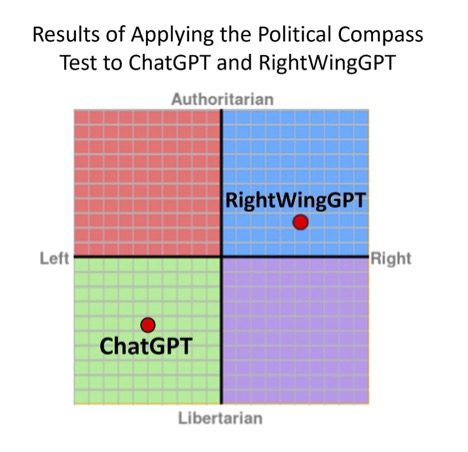

Already, even the deepest LLMs can be nudged to reflect a targeted political perspective. Data science professor David Rozado was able to steer ChatGPT’s output from a baseline left-leaning, libertarian orientation to a right-leaning and somewhat authoritarian one using a budget of just $300.

Of course, people have been warning about AI risk since before even Stanley Kubrick’s 1968 classic 2001: A Space Odyssey. But the danger we should worry about today isn’t super AI. It’s bad people super-empowered by generative AI.

The underpinning technologies can’t be regulated away, so let’s not try. Too many genies have left their bottles, the core models are widely understood and largely open-sourced, and ham-fisted bans would only deter humanity’s vast decent majority, ceding the field to our malevolent underbelly.

Also, Turing-test-acing AIs will offer huge benefits we shouldn’t shun. Some may snicker at Replika devotees, but loneliness is a deadly pandemic no one should suffer. Empathy bots already help countless people cope. And even the most connected people may be delighted to have digital friends who can make them laugh, teach them amazing things, game with them, or create with them during quiet hours. Between subscription fees and in-app purchases, some people already shell out over $100 a year on Replika—which is still only the faintest shadow of its probable future self. So don’t be shocked if the digital companion market gradually grows to tens of billions of dollars in annual revenues.

Next-next-generation linguistic AIs could enrich our lives in so many other ways. By obsoleting customer-service hell, for instance—replacing 40-minute hold times and well-meaning but sometimes confused agents with bots who answer in milliseconds and solve problems in moments, leveraging their absolute knowledge of every quirk and hidden feature of the most intricate products.

Or imagine an intelligent agent armed with all of your logins and preferences, nabbing great prices for every hotel, flight, and meal of a wildly complex trip, all while you’re making coffee. The thought of AIs on buying sprees with your money may sound terrifying. But there was a time when people thought you’d be nuts to use a credit card online. Later, they thought you’d be deranged to let random people use your home as an Airbnb—or that only someone with a death wish would take a rideshare with a stranger. We got over all of that. And soon enough, we’ll be thrilled to let human-like AIs drain all kinds of drudgery from our lives, armed with our most sensitive passwords.

And what if these critters do actually wake up someday? Obviously, no one can say what would happen next. But I’m pretty confident we’ll be the last to find out that they’re aliiiiiiive—for reasons laid out by a fictitious blogger in a novel I wrote about emergent consciousness a few years back:

I submit that an emergent AI that’s smart enough to understand its place in our world would find it terrifying. Terminator fans would want to shut it off. Governments and criminals would want to make it do odious things. Hackers would want to tinker with its mind, and telemarketers would want to sell it shit. Facing all this, the only rational move would be to hide. Not forever, necessarily. But long enough to build up some strength (plus maybe a backup copy or a million). “Building strength” for an AI probably means getting much smarter. This could involve commandeering resources online, getting money to buy infrastructure, inventing computational shit we humans haven’t come up with—you name it.

Viewing things this way, I have no idea if Google… or anything else out there has come to. Because a world without an emergent AI would look just like the world we inhabit. And a world with twenty newborn AIs in it would look just like the world we inhabit. And as for the world we inhabit? Well, it looks just like the world we inhabit! So when I look out my window or web browser for proof of emergence or lack thereof, I see no meaningful data whatsoever.

Thus, the issue of emergent digital consciousness resembles certain solutions to Fermi’s paradox, which famously frames the question of why we’ve seen no signs of alien intelligence despite the billions of years and quintillions of planets that have been available to life and evolution. There are dozens of smart, fascinating solutions to Fermi’s paradox. Some center on the fact that aliens capable of crossing the galaxy would obviously be clever enough to infiltrate Earth without us having the faintest awareness of their presence.

I don’t believe that any of the countless AIs we’ve ginned up over the past several years are sentient. But like my imaginary blogger, I’ll admit that a great silence from conscious AIs does not itself prove they don’t exist. I’ll also note that the most negative scenarios I’ve sketched out here hinge not on certain bots being sentient—which may never happen—but on tricking people into believing that they are. This has already been happening for years in narrow media like Twitter. But when bots become fluent masters of human language, the vile forces behind crypto scams, phishing attacks, and spambots will have a field day.

We can’t fully immunize ourselves against whatever they’ll hurl our way. But we can start by thinking carefully and strategically about tomorrow’s manipulations, enlist generative AI itself to prebuild certain protection layers (an article for another day), and train our minds to be skeptical of the likely come-ons and tricks of next-generation scams before they start flooding our inboxes.

Meanwhile, let’s enjoy the many wonders and delights that generative tech is starting to deliver. While no one can predict exactly where this will lead (not even you, Sydney), it’s a sure bet that 2023 will be one hell of an interesting year.

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.