New approach punishes AI companies that ignore "no crawl" directives.

On Wednesday, web infrastructure provider Cloudflare announced a new feature called "AI Labyrinth" that aims to combat unauthorized AI data scraping by serving fake AI-generated content to bots. The tool will attempt to thwart AI companies that crawl websites without permission to collect training data for large language models that power AI assistants like ChatGPT.

Cloudflare, founded in 2009, is probably best known as a company that provides infrastructure and security services for websites, particularly protection against distributed denial-of-service (DDoS) attacks and other malicious traffic.

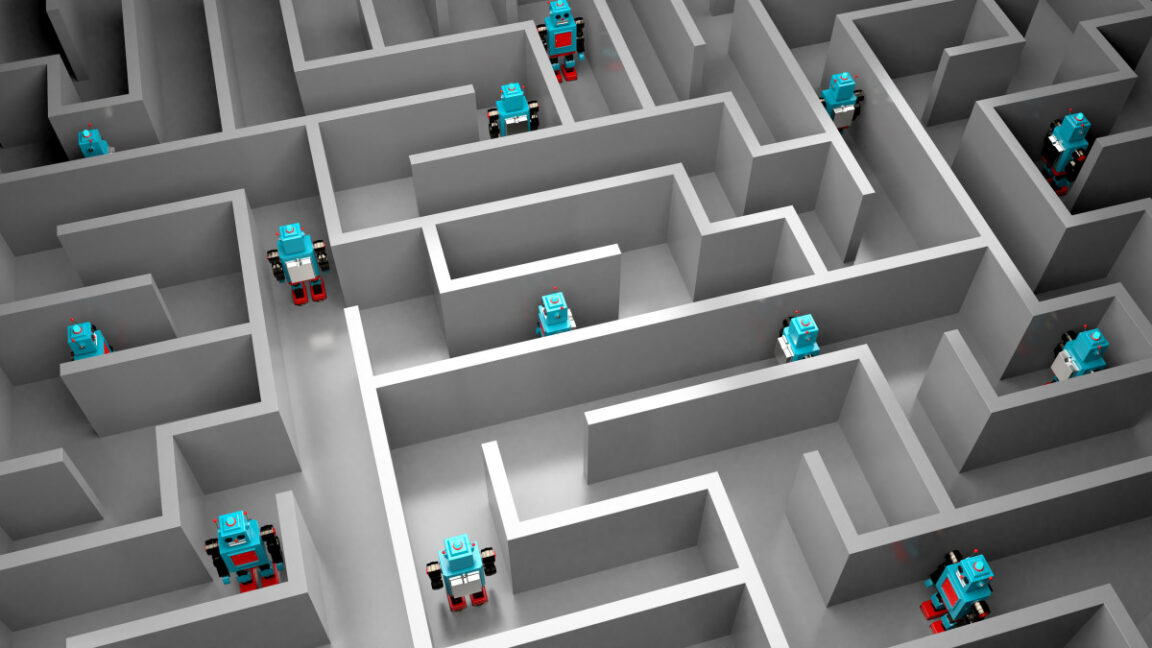

Instead of simply blocking bots, Cloudflare's new system lures them into a "maze" of realistic-looking but irrelevant pages, wasting the crawler's computing resources. The approach is a notable shift from the standard block-and-defend strategy used by most website protection services. Cloudflare says blocking bots sometimes backfires because it alerts the crawler's operators that they've been detected.

"When we detect unauthorized crawling, rather than blocking the request, we will link to a series of AI-generated pages that are convincing enough to entice a crawler to traverse them," writes Cloudflare. "But while real looking, this content is not actually the content of the site we are protecting, so the crawler wastes time and resources."

The company says the content served to bots is deliberately irrelevant to the website being crawled, but it is carefully sourced or generated using real scientific facts—such as neutral information about biology, physics, or mathematics—to avoid spreading misinformation (whether this approach effectively prevents misinformation, however, remains unproven). Cloudflare creates this content using its Workers AI service, a commercial platform that runs AI tasks.

Cloudflare designed the trap pages and links to remain invisible and inaccessible to regular visitors, so people browsing the web don't run into them by accident.

A smarter honeypot

AI Labyrinth functions as what Cloudflare calls a "next-generation honeypot." Traditional honeypots are invisible links that human visitors can't see but bots parsing HTML code might follow. But Cloudflare says modern bots have become adept at spotting these simple traps, necessitating more sophisticated deception. The false links contain appropriate meta directives to prevent search engine indexing while remaining attractive to data-scraping bots.

"No real human would go four links deep into a maze of AI-generated nonsense," Cloudflare explains. "Any visitor that does is very likely to be a bot, so this gives us a brand-new tool to identify and fingerprint bad bots."

This identification feeds into a machine learning feedback loop—data gathered from AI Labyrinth is used to continuously enhance bot detection across Cloudflare's network, improving customer protection over time. Customers on any Cloudflare plan—even the free tier—can enable the feature with a single toggle in their dashboard settings.

A growing problem

Cloudflare's AI Labyrinth joins a growing field of tools designed to counter aggressive AI web crawling. In January, we reported on "Nepenthes," software that similarly lures AI crawlers into mazes of fake content. Both approaches share the core concept of wasting crawler resources rather than simply blocking them. However, while Nepenthes' anonymous creator described it as "aggressive malware" meant to trap bots for months, Cloudflare positions its tool as a legitimate security feature that can be enabled easily on its commercial service.

The scale of AI crawling on the web appears substantial, according to Cloudflare's data that lines up with anecdotal reports we've heard from sources. The company says that AI crawlers generate more than 50 billion requests to their network daily, amounting to nearly 1 percent of all web traffic they process. Many of these crawlers collect website data to train large language models without permission from site owners, a practice that has sparked numerous lawsuits from content creators and publishers.

The technique represents an interesting defensive application of AI, protecting website owners and creators rather than threatening their intellectual property. However, it's unclear how quickly AI crawlers might adapt to detect and avoid such traps, potentially forcing Cloudflare to increase the complexity of its deception tactics. Also, wasting AI company resources might not please people who are critical of the perceived energy and environmental costs of running AI models.

Cloudflare describes this as just "the first iteration" of using AI defensively against bots. Future plans include making the fake content harder to detect and integrating the fake pages more seamlessly into website structures. The cat-and-mouse game between websites and data scrapers continues, with AI now being used on both sides of the battle.

Hope you enjoyed this news post.

Thank you for appreciating my time and effort posting news every day for many years.

News posts... 2023: 5,800+ | 2024: 5,700+ | 2025 (till end of February): 874

RIP Matrix | Farewell my friend ![]()

3175x175(CURRENT).thumb.jpg.b05acc060982b36f5891ba728e6d953c.jpg)

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.