In patients undergoing echocardiographic evaluation of cardiac function, preliminary assessment by artificial intelligence (AI) is superior to initial sonographer assessment, according to late breaking research presented in a Hot Line session on 27 August at ESC Congress 2022.

Dr. David Ouyang of the Smidt Heart Institute at Cedars-Sinai, Los Angeles, U.S. said, "There has been much excitement about the use of AI in medicine, but the technologies are rarely assessed in prospective clinical trials. We previously developed one of the first AI technologies to assess cardiac function (left ventricular ejection fraction; LVEF) in echocardiograms and in this blinded, randomized trial, we compared it head to head with sonographer tracings. This trial was powered to show non-inferiority of the AI compared to sonographer tracings, and so we were pleasantly surprised when the results actually showed superiority with respect to the pre-specified outcomes."

Accurate assessment of LVEF is essential for diagnosing cardiovascular disease and making treatment decisions. Human assessment is often based on a small number of cardiac cycles that can result in high inter-observer variability. EchoNet-Dynamic is a deep learning algorithm that was trained on echocardiogram videos to assess cardiac function and was previously shown to assess LVEF with a mean absolute error of 4.1–6.0% .2 The algorithm uses information across multiple cardiac cycles to minimize error and produce consistent results.

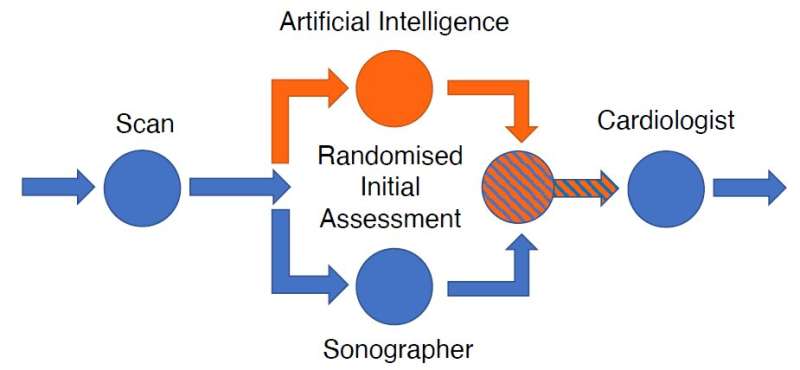

EchoNet-RCT tested whether AI or sonographer assessment of LVEF is more frequently adjusted by a reviewing cardiologist. The standard clinical workflow for determining LVEF by echocardiography is that a sonographer scans the patient; the sonographer provides an initial assessment of LVEF; and then a cardiologist reviews the assessment to provide a final report of LVEF. In this clinical trial, the sonographer's scan was randomly allocated 1:1 to AI initial assessment or sonographer initial assessment, after which blinded cardiologists reviewed the assessment and provided a final report of LVEF (see figure).

The researchers compared how much cardiologists changed the initial assessment by AI to how much they changed the initial assessment by sonographer. The primary endpoint was the frequency of a greater than 5% change in LVEF between the initial assessment (AI or sonographer) and the final cardiologist report. The trial was designed to test for noninferiority, with a secondary objective of testing for superiority.

The study included 3,495 transthoracic echocardiograms performed on adults for any clinical indication. The proportion of studies substantially changed was 16.8% in the AI group and 27.2% in the sonographer group (difference -10.4%, 95% confidence interval [CI] -13.2% to -7.7%, p<0.001 for noninferiority, p<0.001 for superiority). The safety endpoint was the difference between the final cardiologist report and a historical cardiologist report.

The mean absolute difference was 6.29% in the AI group and 7.23% in the sonographer group (difference -0.96%, 95% CI -1.34% to -0.54%, p<0.001 for superiority).

Dr. Ouyang said, "We learned a lot from running a randomized trial of an AI algorithm, which hasn't been done before in cardiology. First, we learned that this type of trial is highly feasible in the right setting, where the AI algorithm can be integrated into the usual clinical workflow in a blinded fashion.

Second, we learned that blinding really can work well in this situation. We asked our cardiologist over-readers to guess if they thought the tracing they had just reviewed was performed by AI or by a sonographer, and it turns out that they couldn't tell the difference—which both speaks to the strong performance of the AI algorithm as well as the seamless integration into clinical software. We believe these are all good signs for future trial research in the field."

He concluded, "We are excited by the implications of the trial. What this means for the future is that certain AI algorithms, if developed and integrated in the right way, could be very effective at not only improving the quality of echo reading output but also increasing efficiencies in time and effort spent by sonographers and cardiologists by simplifying otherwise tedious but important tasks. Embedding AI into clinical workflows could potentially provide more precise and consistent evaluations, thereby enabling earlier detection of clinical deterioration or response to treatment."

- Karlston

-

1

1

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.