AMD CEO Lisa Su on the MI325X: "This is the beginning, not the end of the AI race."

On Thursday, AMD announced its new MI325X AI accelerator chip, which is set to roll out to data center customers in the fourth quarter of this year. At an event hosted in San Francisco, the company claimed the new chip offers "industry-leading" performance compared to Nvidia's current H200 GPUs, which are widely used in data centers to power AI applications such as ChatGPT.

With its new chip, AMD hopes to narrow the performance gap with Nvidia in the AI processor market. The Santa Clara-based company also revealed plans for its next-generation MI350 chip, which is positioned as a head-to-head competitor of Nvidia's new Blackwell system, with an expected shipping date in the second half of 2025.

In an interview with the Financial Times, AMD CEO Lisa Su expressed her ambition for AMD to become the "end-to-end" AI leader over the next decade. "This is the beginning, not the end of the AI race," she told the publication.

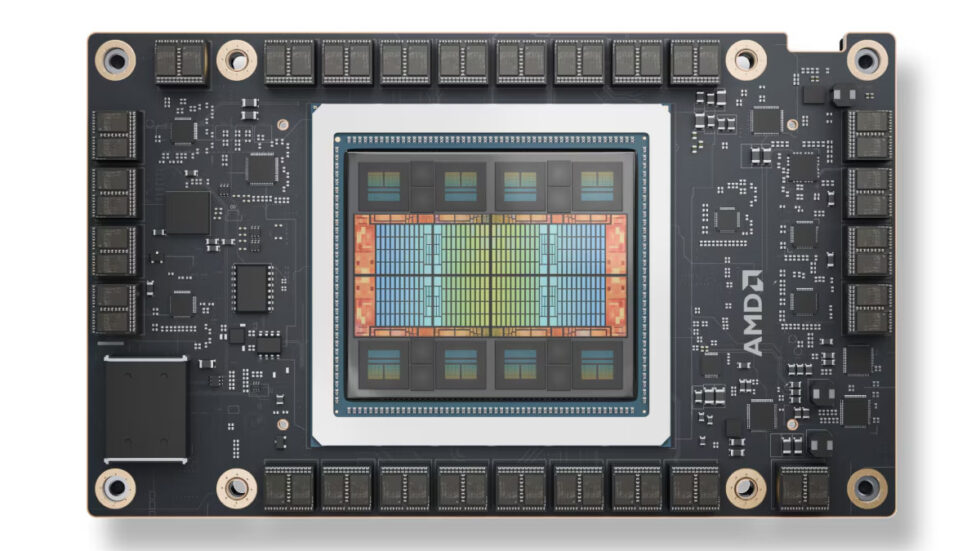

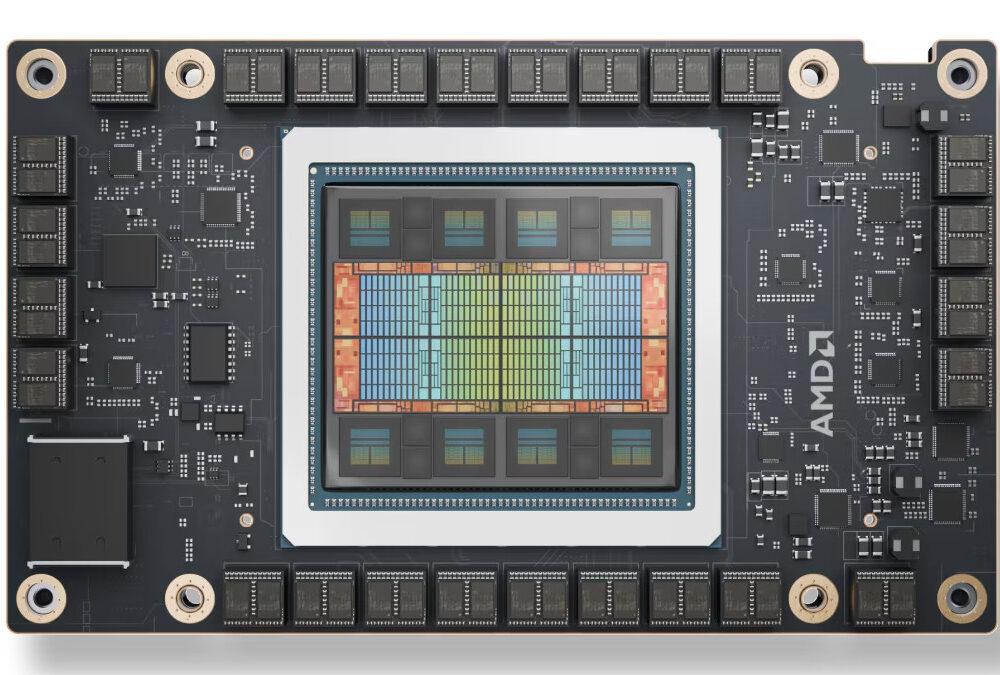

According to AMD's website, the announced MI325X accelerator contains 153 billion transistors and is built on the CDNA3 GPU architecture using TSMC's 5 nm and 6 nm FinFET lithography processes. The chip includes 19,456 stream processors and 1,216 matrix cores spread across 304 compute units. With a peak engine clock of 2100 MHz, the MI325X delivers up to 2.61 PFLOPs of peak eight-bit precision (FP8) performance. For half-precision (FP16) operations, it reaches 1.3 PFLOPs.

A fraction of Nvidia’s AI market share

The new chip announcement comes as Nvidia's customers prepare to deploy its Blackwell chips in the current quarter. Microsoft has already become the first cloud provider to offer Nvidia's latest GB200 chips, which combine two B200 Blackwell chips and a "Grace" CPU for more performance.

While AMD has emerged as Nvidia's closest competitor in off-the-shelf AI chips, it still lags behind in market share, according to the Financial Times. AMD projects $4.5 billion in AI chip sales for 2024, a fraction of Nvidia's $26.3 billion in AI data center chip sales for the quarter ending in July. Even so, AMD has already secured Microsoft and Meta as customers for its current generation of MI300 AI GPUs, with Amazon possibly to follow.

The company's recent focus on AI marks a shift from its traditional PC-oriented business that includes consumer graphics cards, but Su remains optimistic about future demand for AI datacenter GPUs. AMD predicts the total addressable market for AI chips will reach $400 billion by 2027.

RIP Matrix | Farewell my friend ![]()

Hope you enjoyed this news post.

Thank you for appreciating my time and effort posting news every day for many years.

2023: Over 5,800 news posts | 2024 (till end of September): 4,292 news posts

3175x175(CURRENT).thumb.jpg.b05acc060982b36f5891ba728e6d953c.jpg)

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.