When AI hype hit fever pitch—and a market leader nearly tore itself apart.

"Here, There, and Everywhere" isn't just a Beatles song. It's also a phrase that recalls the spread of generative AI into the tech industry during 2023. Whether you think AI is just a fad or the dawn of a new tech revolution, it's been impossible to deny that AI news has dominated the tech space for the past year.

We've seen a large cast of AI-related characters emerge that includes tech CEOs, machine learning researchers, and AI ethicists—as well as charlatans and doomsayers. From public feedback on the subject of AI, we've heard that it's been difficult for non-technical people to know who to believe, what AI products (if any) to use, and whether we should fear for our lives or our jobs.

Meanwhile, in keeping with a much-lamented trend of 2022, machine learning research has not slowed down over the past year. On X, former Biden administration tech advisor Suresh Venkatasubramanian wrote, "How do people manage to keep track of ML papers? This is not a request for support in my current state of bewilderment—I'm genuinely asking what strategies seem to work to read (or "read") what appear to be 100s of papers per day."

To wrap up the year with a tidy bow, here's a look back at the 10 biggest AI news stories of 2023. It was very hard to choose only 10 (in fact, we originally only intended to do seven), but since we're not ChatGPT generating reams of text without limit, we have to stop somewhere.

Bing Chat “loses its mind”

Aurich Lawson | Getty Images

In February, Microsoft unveiled Bing Chat, a chatbot built into its languishing Bing search engine website. Microsoft created the chatbot using a more raw form of OpenAI's GPT-4 language model but didn't tell everyone it was GPT-4 at first. Since Microsoft used a less conditioned version of GPT-4 than the one that would be released in March, the launch was rough. The chatbot assumed a temperamental personality that could easily turn on users and attack them, tell people it was in love with them, seemingly worry about its fate, and lose its cool when confronted with an article we wrote about revealing its system prompt.

Aside from the relatively raw nature of the AI model Microsoft was using, at fault was a system where very long conversations would push the conditioning system prompt outside of its context window (like a form of short-term memory), allowing all hell to break loose through jailbreaks that people documented on Reddit. At one point, Bing Chat called me "the culprit and the enemy" for revealing some of its weaknesses. Some people thought Bing Chat was sentient, despite AI experts' assurances to the contrary. It was a disaster in the press, but Microsoft didn't flinch, and it ultimately reigned in some of Bing Chat's wild proclivities and opened the bot widely to the public. Today, Bing Chat is now known as Microsoft Copilot, and it's baked into Windows.

US Copyright Office says no to AI copyright authors

An AI-generated image that won a prize at the colourado State Fair in 2022, later denied US

copyright registration.

Jason M. Allen

In February, the US Copyright Office issued a key ruling on AI-generated art, revoking the copyright previously granted to the AI-assisted comic book "Zarya of the Dawn" in September 2022. The decision, influenced by the revelation that the images were created using the AI-powered Midjourney image generator, stated that only the text and arrangement of images and text by Kashtanova were eligible for copyright protection. It was the first hint that AI-generated imagery without human-authored elements could not be copyrighted in the United States.

This stance was further cemented in August when a US federal judge ruled that art created solely by AI cannot be copyrighted. In September, the US Copyright Office rejected the registration for an AI-generated image that wona colourado State Fair art contest in 2022. As it stands now, it appears that purely AI-generated art (without substantial human authorship) is in the public domain in the United States. This stance could be further clarified or changed in the future by judicial rulings or legislation.

The rise of Meta’s LLaMA and its open weights direction

Getty Images | Benj Edwards

On February 24, Meta released LLaMA, a family of large language models available in different sizes (parameter counts) that kick-started an open-weights large language model (LLM) movement. People soon took things into their own hands when they leaked LLaMA's weights—crucial neutral network files that had previously only been provided to academics—onto BitTorrent. Soon, researchers began fine-tuning LLaMA and building off of it, competing over who could build the most capable model that could run locally on non-data-center computers. In tandem, Meta's Yann LeCun quickly became a vocal proponent of open AI models.

In July, Meta Launched Llama 2, an even more capable LLM, and this time, they let everyone have the weights. Code Llama followed in August, fine-tuned for coding tasks. But Meta wasn't alone in releasing "open" AI models: You've probably also heard of Dolly, Falcon 180B, Mistral 7B, and a few others, all of which continued the tradition of releasing weights so others could fine-tune them for performance improvements. And in early December, Mixtral 8x7B reportedly matched GPT-3.5 in capability, which was a landmark achievement for a relatively small and fast AI language model. Clearly, companies with closed approaches such as OpenAI (ironically), Google, and Anthropic are going to have a run for their money in the coming year.

GPT-4 launches and scares the world for months

A screenshot of GPT-4's introduction to ChatGPT Plus customers from March 14, 2023.

Benj Edwards / Ars Technica

On March 14, OpenAI released its GPT-4 large language model with claims that it "exhibit[ed] human-level performance on various professional and academic benchmarks" and a specification document (model card) that described attempts by researchers to get a raw version of GPT-4 to play out AI takeover scenarios. That set the doom ball rolling. On March 29, the Future of Life Institute published an open letter signed by Elon Musk calling for a six-month pause in the development of AI models more powerful than GPT-4. That same day, Time published an editorial by LessWrong founder Eliezer Yudkowsky advocating that countries should be willing to "destroy a rogue datacenter by airstrike" if they are seen building up a GPU cluster that could train a dangerous AI model because otherwise, "literally everyone on Earth will die" at the hands of a superhuman AI entity.

It was hype factor 11, and the doom kept rolling. In April, President Biden gave brief remarks about the risks of AI. Later that month, a trio of US congressmen announced legislation that proposed keeping AI from ever being able to launch nuclear weapons. In May, Geoffrey Hinton resigned from Google so he could "speak freely" about potential risks posed by AI. On May 4, Biden met with tech CEOs about AI at the White House. OpenAI CEO Sam Altman began a worldwide tour, including a stop at the US Senate, to warn about the dangers of AI and advocate for regulation. And to cap it all off, OpenAI executives signed a brief statement warning that AI could extinguish humanity. Eventually, the fear and hype began to settle down, but there's still a contingent of people (many linked to Effective Altruism) who are convinced that a theoretical superhuman AI is an existential threat to all of humanity, bringing a bubbling undercurrent of anxiety to every AI advancement.

AI art generators remain controversial but continue to grow in capability

An example of lighting and skin effects in the AI image-generator Midjourney v5.

Julie W. Design

2023 was a big year for leaps in capability from image synthesis models. In March, Midjourney achieved a notable jump in the photorealism of its AI-generated images with version 5 of its AI image synthesis model, rendering convincing people with five-fingered hands. Throughout the year, Midjourney reliably invoked disgust from critics of AI artwork, but it also inspired experimentation (and a bit of deception) from people who embraced the technology. And the pace of change didn't stop, with v5.1 coming in May and v5.2 launching in June, each adding new features and detail. Today, Midjourney is testing a standalone interface that does not require Discord to function, and Midjourney v6 is expected to launch sometime in late December.

Also in March, we saw the launch of Adobe Firefly, an AI image generator that Adobe says is trained solely on public domain works and images found in its Adobe Stock archive. And by late May, Adobe had integrated the technology into a beta version of its flagship Photoshop image editor with Generative Fill. And OpenAI's DALL-E 3 took prompt fidelity to a new level in September, raising interesting implications for artists in the near future.

AI deepfakes have a deeper impact

AI-generated photo faking Donald Trump's possible arrest, created by Eliot

Higgins using Midjourney v5.

@EliotHiggins on Twitter

Throughout 2023, the wider implications of image, audio, and video generators began to take hold. Several controversies emerged, including fairly convincing AI-generated images of Donald Trump getting arrested and the Pope in a puffy jacket in March (But Will Smith eating spaghetti fooled no one). Also that month, news broke about a scam where people were mimicking the voices of people's loved ones using AI and routing it through telephone calls to ask for money.

And despite our feature about people being able to use social media photos to create deepfakes in December 2022, AI image generation tech led to warnings from the FBI about "sextortion" scams using fake video to blackmail people in June. In September, nearly all of the Attorneys General in the United States sent a letter to Congress warning about the potential for AI-generated CSAM. And just about a year after our warning, teens in New Jersey reportedly created AI-generated nudes of classmates in November. Even so, we're only beginning to deal with the fallout of the rapidly advancing capability to replicate any form of recorded media almost effortlessly using AI.

AI writing detectors promise results but don’t work

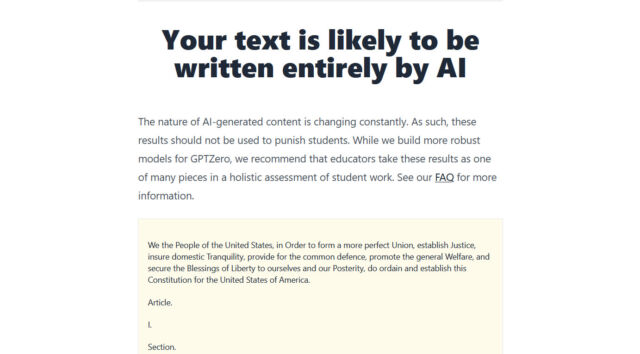

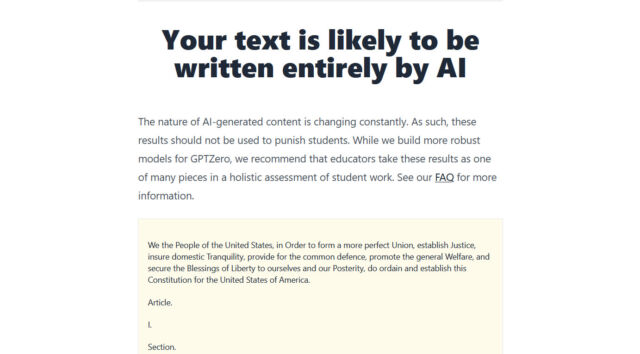

A viral screenshot from April 2023 showing GPTZero saying, "Your text is likely to be written

entirely by AI" when fed part of the US Constitution.

Ars Technica

The emergence of ChatGPT led to an existential crisis for educators that rolled over into 2023, with teachers and professors worrying about synthetic text replacing human thought in class assignments. Companies quickly emerged to capitalize on these fears, promising tools that would be able to detect AI-written text. We soon began hearing stories of people being falsely accused of using ChatGPT to write their work when, in fact, everything had been human-written.

To date, no AI-writing detector is reliable enough to confirm or deny the existence of AI-generated text in a piece of writing. In July, we wrote a large feature explaining why this is the case, and not long after, OpenAI pulled its own AI-writing detector due to low rates of accuracy. By September, OpenAI stated that AI writing detectors don't work, writing in a FAQ, "While some (including OpenAI) have released tools that purport to detect AI-generated content, none have proven to reliably distinguish between AI-generated and human-generated content." Since then, the furor over AI detection has died down somewhat, but commercial tools claiming to detect AI-written work are still out there.

AI-generated “hallucinations” go mainstream

Aurich Lawson | Getty Images

In 2023, the concept of AI "hallucinations"—the propensity for some AI models to convincingly make stuff up—went mainstream thanks to large language models dominating the AI news this year. Hallucinations resulted in legal trouble: In April, Brian Hood sued OpenAI for defamation when ChatGPT falsely claimed that Hood had been convicted for a foreign bribery scandal (later settled). And in May, a lawyer who cited fake cases confabulated by ChatGPT got caught and later fined by a judge.

In April, we wrote a big feature about why this happens, but it didn't stop companies from releasing LLMs that confabulate anyway. In fact, Microsoft built one directly into Windows 11. By the end of the year, two dictionaries, Cambridge and Dictionary.com, named "hallucinate" their word of the year. We still prefer "confabulate," of course—and that AI-related definition wound up in the Cambridge Dictionary as well.

Google’s Bard “dances” to counter Microsoft and ChatGPT

Google

When ChatGPT launched in late November 2022, its immediate popularity caught everyone off guard, including OpenAI. As people began to murmur that ChatGPT could replace web searches, Google jumped into action in January 2023, hoping to counter this apparent threat to its search dominance. When Bing Chat launched in February, Microsoft CEO Satya Nadella said in an interview, "I want people to know that we made [Google] dance." It worked.

Google announced Bard in a botched demo in early February, then it launched Bard as a closed test in March, with a wide release in May. The company spent the rest of the year playing catch-up to OpenAI and Microsoft with revisions to Bard, the PaLM 2 language model in May, and Gemini in early December. The dance isn't over yet, but Microsoft definitely has Google's attention.

OpenAI fires Sam Altman (and he returns)

OpenAI CEO Sam Altman speaks during the OpenAI DevDay event on November 6, 2023, in San Francisco.

Getty Images | Ars Technica

On November 17, I was at a regular checkup talking to my doctor when my phone began repeatedly buzzing, almost nonstop. I apologized to the doctor and checked to see what was going on. OpenAI's nonprofit board of directors dropped a bombshell: It was firing its CEO Sam Altman, and everyone (my wife, mom, friends, and co-workers) was telling me about it. "It's a crazy world," the doctor said as I rushed home to write about the event, which Ars' Kyle Orland had already begun to cover in my stead. Confusing everyone, OpenAI's board did not disclose the exact reason for the surprise firing, only saying that Altman was "not consistently candid in his communications with the board."

Over that weekend, more details emerged, including the resignation of President Greg Brockman in solidarity and the role of OpenAI's Chief Scientist Ilya Sutskever in the firing process. Key investor Microsoft was furious, and Altman was soon in talks to return. He, along with 700-plus OpenAI employees, threatened to join Microsoft if the original team was not reinstated. It later emerged that Altman's handling of his attempted removal of board member Helen Toner largely precipitated the firing. Altman was officially back as CEO two weeks later, and the company claimed it was more united than ever. But the chaotic episode left lingering questions about the company's future and the safety of relying on a potentially unstable company (with an unusual nonprofit/for-profit structure) to responsibly develop what many assume will be world-changing technology.

The tech keeps rolling along

Even though we just covered ten major storylines related to AI from 2023, it feels like they barely scratch the surface of such a busy year. In June, I wrote about buying the last in-print encyclopedia, which gives me nostalgic comfort in the age of AI hallucination that we talked about above. And we've covered a bevy of interesting AI-generated visual stories, including AI-generated QR codes, geometric spirals, and mind-warping beer commercials.

All the while, perceived market leader OpenAI has never sat still technologically, releasing a ChatGPT app in May and introducing image recognition capability to ChatGPT Plus in September. GPT-4 Turbo and GPTs (custom roles for AI assistants) followed in November, and the year ended with GPT-5 development apparently underway. The story of Google's Gemini is still unfolding as well.

It's been a busy and impactful year, and we'd like to thank everyone for sticking with Ars Technica as we've wrestled with how to most effectively cover this rapidly evolving field. We appreciate your comments, support, and feedback. Our prediction for 2024? Buckle up.

Source

3175x175(CURRENT).thumb.jpg.b05acc060982b36f5891ba728e6d953c.jpg)

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.