Original botched launch still haunts new version of data-scraping AI feature.

Microsoft is preparing to reintroduce Recall to Windows 11. A feature limited to Copilot+ PCs—a label that just a fraction of a fraction of Windows 11 systems even qualify for—Recall has been controversial in part because it builds an extensive database of text and screenshots that records almost everything you do on your PC.

But the main problem with the initial version of Recall—the one that was delayed at the last minute after a large-scale outcry from security researchers, reporters, and users—was not just that it recorded everything you did on your PC but that it was a rushed, enabled-by-default feature with gaping security holes that made it trivial for anyone with any kind of access to your PC to see your entire Recall database.

It made no efforts to automatically exclude sensitive data like bank information or credit card numbers, offering just a few mechanisms to users to manually exclude specific apps or websites. It had been built quickly, outside of the normal extensive Windows Insider preview and testing process. And all of this was happening at the same time that the company was pledging to prioritize security over all other considerations, following several serious and highly public breaches.

Any coverage of the current version of Recall should mention what has changed since then.

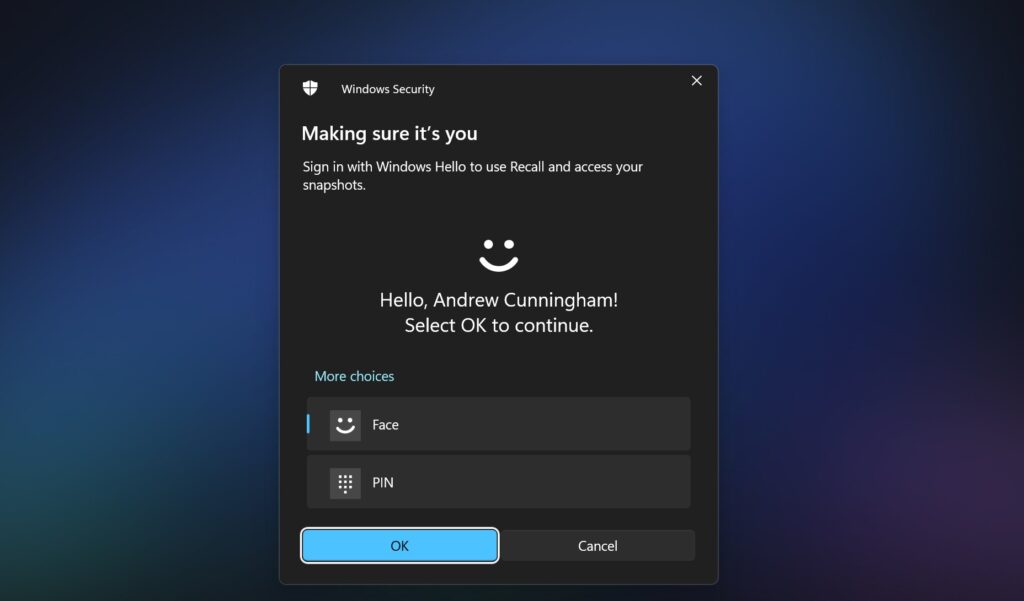

Recall is being rolled out to Microsoft’s Windows Insider Release Preview channel after months of testing in the more experimental and less-stable channels, just like most other Windows features. It’s turned off by default and can be removed from Windows root-and-branch by users and IT administrators who don’t want it there. Microsoft has overhauled the feature's underlying security architecture, encrypting data at rest so it can't be accessed by other users on the PC, adding automated filters to screen out sensitive information, and requiring frequent reauthentication with Windows Hello anytime a user accesses their own Recall database.

Testing how Recall works

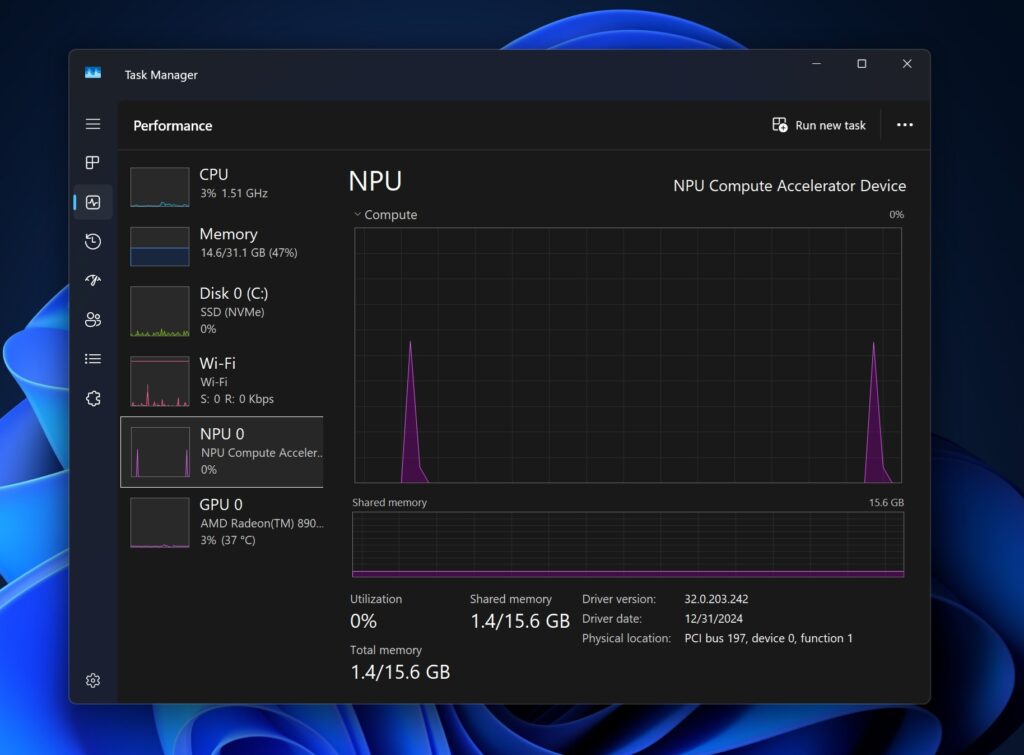

I installed the Release Preview Windows 11 build with Recall on a Snapdragon X Elite version of the Surface Laptop and a couple of Ryzen AI PCs, which all have NPUs fast enough to support the Copilot+ features.

No Windows PCs without this NPU will offer Recall or any other Copilot+ features—that's every single PC sold before mid-2024 and the vast majority of PCs since then. Users may come up with ways to run those features on unsupported hardware some other way. But by default, Recall isn't something most of Windows' current user base will have to worry about.

After installing the update, you'll see a single OOBE-style setup screen describing Recall and offering to turn it on; as promised, it is now off by default until you opt in. And even if you accept Recall on this screen, you have to opt in a second time as part of the Recall setup to actually turn the feature on. We'll be on high alert for a bait-and-switch when Microsoft is ready to remove Recall's "preview" label, whenever that happens, but at least for now, opt-in means opt-in.

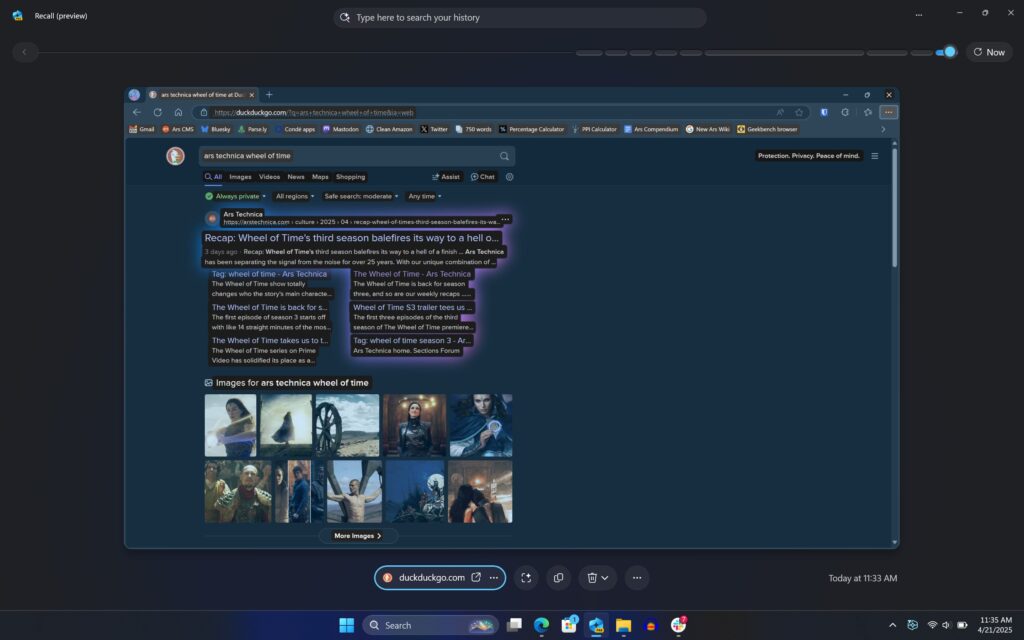

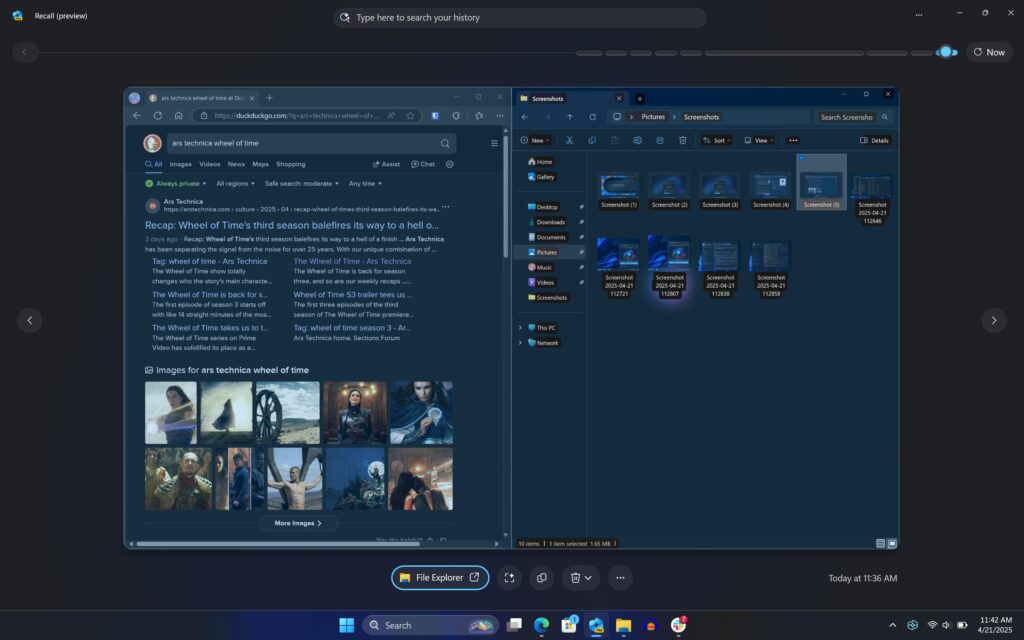

Enable Recall, and the snapshotting begins. As before, it's storing two things: actual screenshots of the active area of your screen, minus the taskbar, and a searchable database of text that it scrapes from those screenshots using OCR. Somewhat oddly, there are limits on what Recall will offer to OCR for you; even if you're using multiple apps onscreen at the same time, only the active, currently-in-focus app seems to have its text scraped and stored.

This is also more or less how Recall handles multi-monitor support; only the active display has screenshots taken, and only the active window on the active display is OCR'd. This does prevent Recall from taking gigabytes and gigabytes of screenshots of static or empty monitors, though it means the app may miss capturing content that updates passively if you don't interact with those windows periodically.

Only the active window is OCR'd in Recall (here, the Explorer window), even when multiple apps are visible in a

single snapshot. This is also how multi-monitor support is handled; only the active desktop is being snapshotted

at any given time.

All of this OCR'd text is fully searchable and can be copied directly from Recall to be pasted somewhere else. Recall will also offer to open whatever app or website is visible in the screenshot, and it gives you the option to delete that specific screenshot and all screenshots from specific apps (handy, if you decide you want to add an entire app to your filtering settings and you want to get rid of all existing snapshots of it).

Here are some basic facts about how Recall works on a PC since there's a lot of FUD circulating about this, and much of the information on the Internet is about the older, insecure version from last year:

- Recall is per-user. Setting up Recall for one user account does not turn on Recall for all users of a PC.

- Recall does not require a Microsoft account.

- Recall does not require an Internet connection or any cloud-side processing to work.

- Recall does require your local disk to be encrypted with Device Encryption/BitLocker.

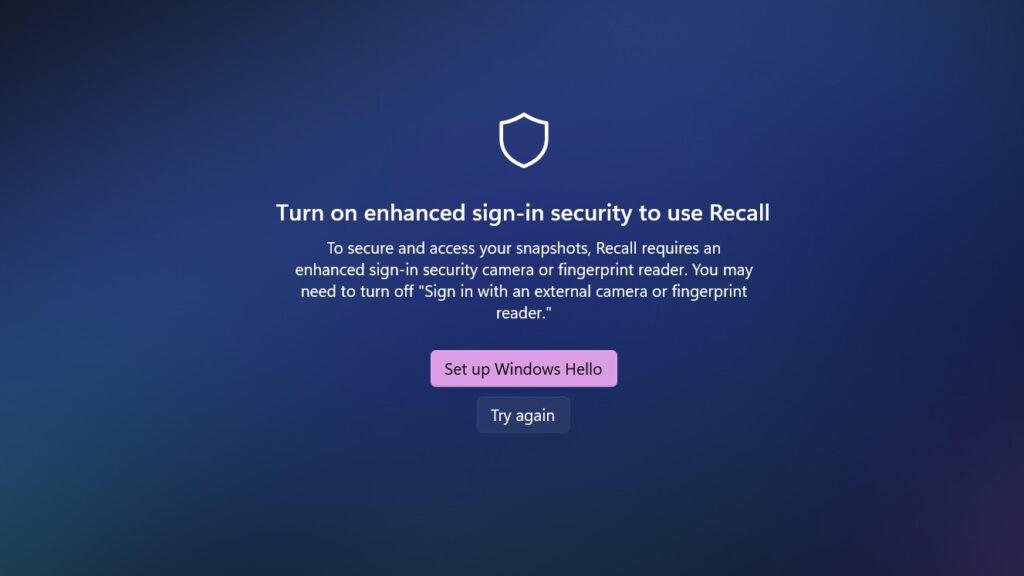

- Recall does require Windows Hello and either a fingerprint reader or face-scanning camera for setup, though once it's set up, it can be unlocked with a Windows Hello PIN.

- Windows Hello authentication happens every time you open the Recall app.

- Using a Windows Hello PIN to unlock Recall is available as a fallback option, but you cannot set up Recall without having a fingerprint or face scan registered.

- Enabling Recall and changing its settings does not require an administrator account.

- Recall can be uninstalled entirely by unchecking it in the legacy Windows Features control panel (you can also search for "turn Windows features on and off").

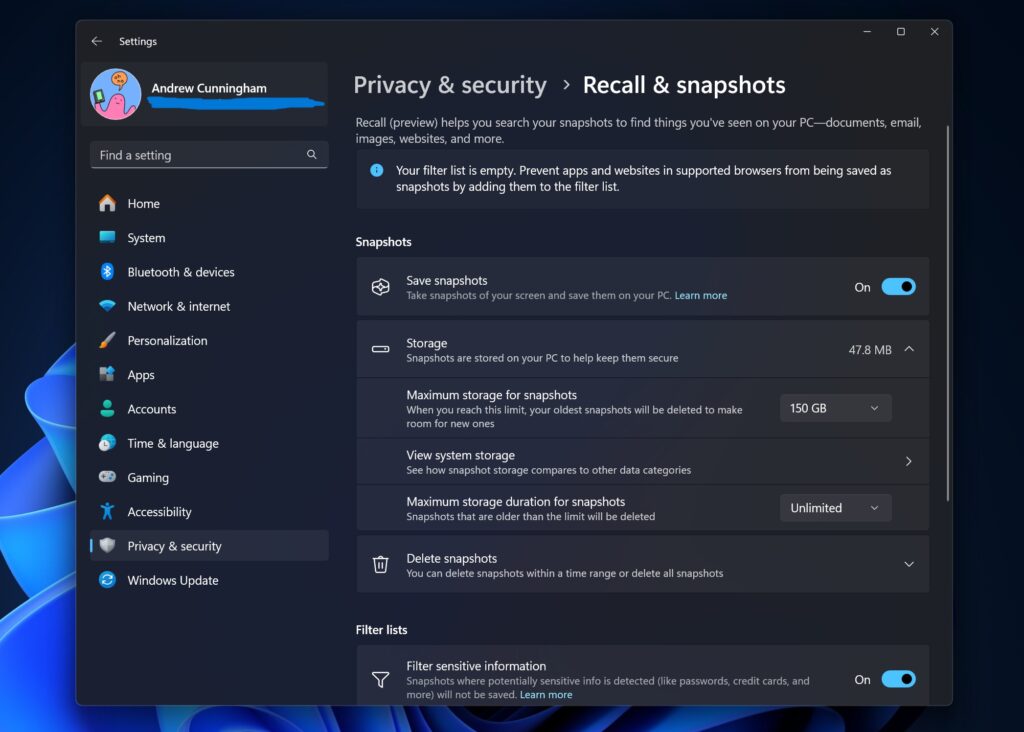

If you read our coverage of the initial version, there's a whole lot about how Recall functions that's essentially the same as it was before. In Settings, you can see how much storage the feature is using and limit the total amount of storage Recall can use. The amount of time a snapshot can be kept is normally determined by the amount of space available, not by the age of the snapshot, but you can optionally choose a second age-based expiration date for snapshots (options range from 30 to 180 days).

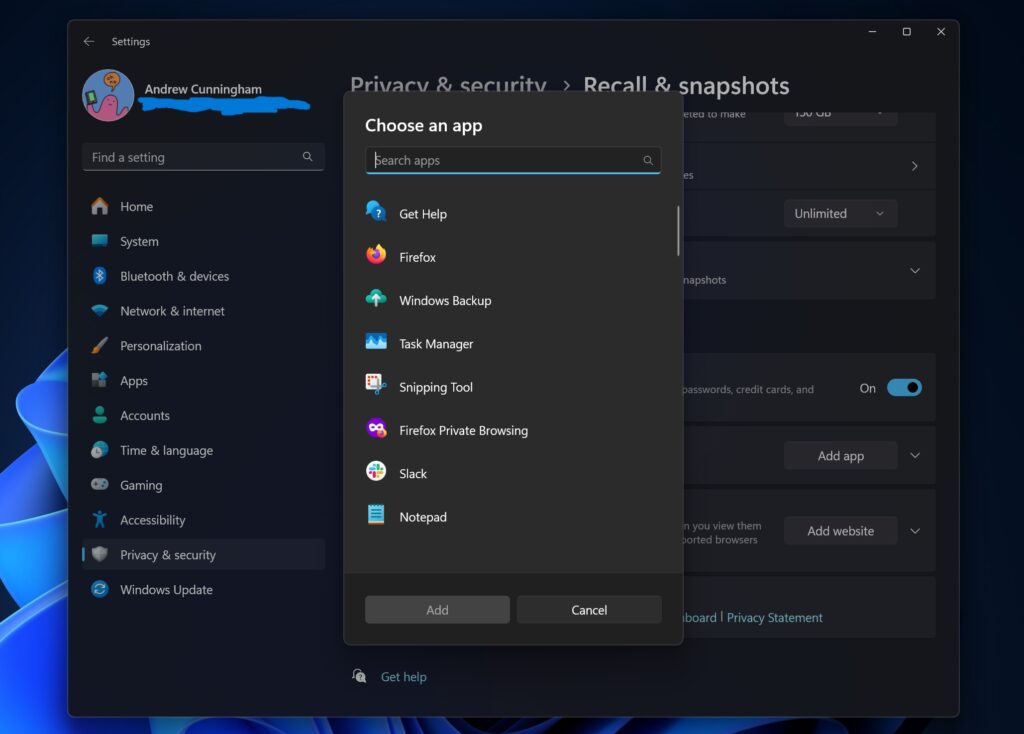

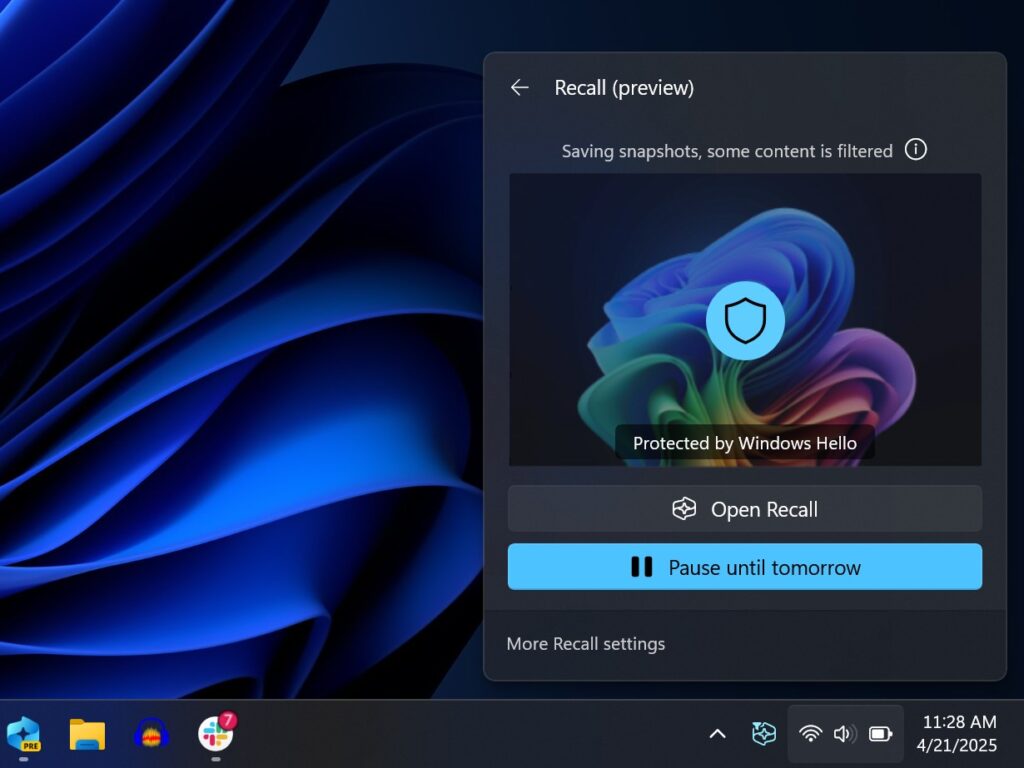

It's also possible to delete the entire database or all recent snapshots (those from the past hour, past day, past week, or past month), toggle the automated filtering of sensitive content, or add specific apps and websites you'd like to have filtered. Recall can temporarily be paused by clicking the system tray icon (which is always visible when you have Recall turned on), and it can be turned off entirely in Settings. Neither of these options will delete existing snapshots; they just stop your PC from creating new ones.

The amount of space Recall needs to do its thing will depend on a bunch of factors, including how actively you use your PC and how many things you filter out. But in my experience, it can easily generate a couple of hundred megabytes per day of images. A Ryzen system with a 1TB SSD allocated 150GB of space to Recall snapshots by default, but even a smaller 25GB Recall database could easily store a few months of data.

Fixes: Improved filtering, encryption at rest

For apps and sites that you know you don't want to end up in Recall, you can manually add them to the exclusion lists in the Settings app. As a rule, major browsers running in private or incognito modes are also generally not snapshotted.

If you have an app that's being filtered onscreen for any reason—even if it's onscreen at the same time as an app that's not being filtered, Recall won't take pictures of your desktop at all. I ran an InPrivate Microsoft Edge window next to a regular window, and Microsoft's solution is just to avoid capturing and storing screenshots entirely rather than filtering or blanking out the filtered app or site in some way.

This is probably the best way to do it! It minimizes the risk of anything being captured accidentally just because it's running in the background, for example. But it could mean you don't end up capturing much in Recall at all if you're frequently mixing filtered and unfiltered apps.

The Recall tray icon is always visible when the service is running, just so you're always aware of it. Sometimes

it will show you snapshot previews here if you've opened the Recall app and unlocked it recently, but usually

these previews are blurred because Windows Hello is protecting them.

When anything is being filtered for any reason, the tray icon changes and you get a status message here, but

Recall doesn't tell you what is being filtered or why.

New to this version of Recall is an attempt at automated content filtering to address one of the major concerns about the original iteration of Recall—that it can capture and store sensitive information like credit card numbers and passwords. This filtering is based on the technology Microsoft uses for Microsoft Purview Information Protection, an enterprise feature used to tag sensitive information on business, healthcare, and government systems.

This automated content filtering is hit and miss. Recall wouldn't take snapshots of a webpage with a visible credit card field, or my online banking site, or an image of my driver's license, or a recent pay stub, or of the Bitwarden password manager while viewing credentials. But I managed to find edge cases in less than five minutes, and you'll be able to find them, too; Recall saved snapshots showing a recent check, with the account holder's name, address, and account and routing numbers visible, and others testing it have still caught it recording credit card information in some cases.

The automated filtering is still a big improvement from before, when it would capture this kind of information indiscriminately. But things will inevitably slip through, and the automated filtering won't help at all with other kinds of data; Recall will take pictures of email and messaging apps without distinguishing between what's sensitive (school information for my kid, emails about Microsoft's own product embargoes) and what isn't.

The upshot is that if you capture months and months and gigabytes and gigabytes of Recall data on your PC, it's inevitable that it will capture something you probably wouldn't want to be preserved in an easily searchable database.

One issue is that there's no easy way to check and confirm what Recall is and isn't filtering without actually scrolling through the database and checking snapshots manually. The system tray status icon does change to display a small triangle and will show you a "some content is being filtered" status message when something is being filtered, but the system won't tell you what it is; I have some kind of filtered app or browser tab open somewhere right now, and I have no idea which one it is because Windows won't tell me. That any attempt at automated filtering is hit-and-miss should be expected, but more transparency would help instill trust and help users fine-tune their filtering settings.

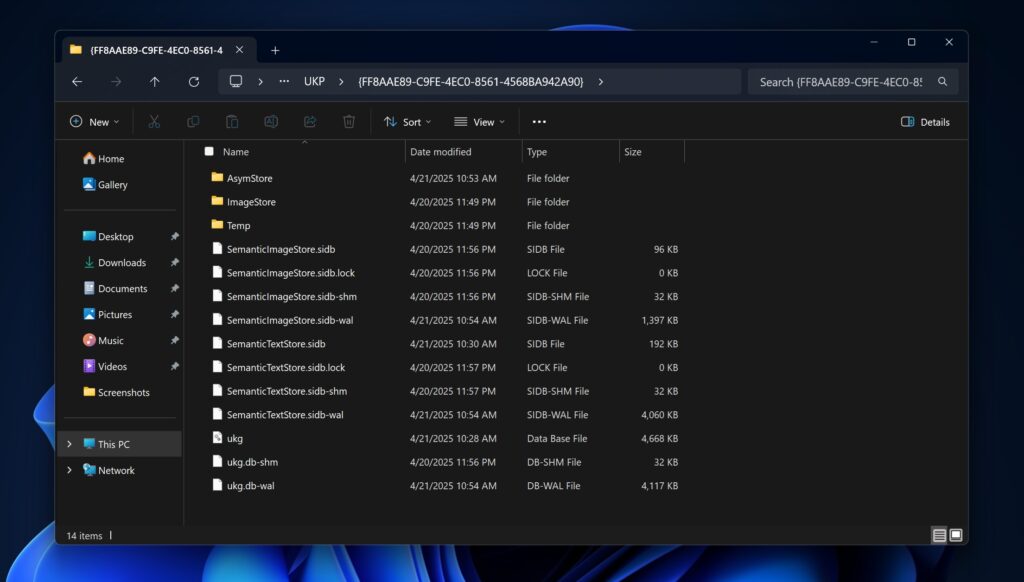

Microsoft also seems to have fixed the single largest problem with Recall: previously, all screenshots and the entire text database were stored in plaintext with zero encryption. It was technically, usually encrypted, insofar as the entire SSD in a modern PC is encrypted when you sign into a Microsoft account or enable Bitlocker, but any user with any kind of access to your PC (either physical or remote) could easily grab those files and view them anywhere with no additional authentication necessary.

This is fixed now. Recall's entire file structure is available for anyone to look at, stored away in the user’s AppData folder in a directory called CoreAIPlatform.00\UKP. Other administrators on the same PC can still navigate to these folders from a different user account and move or copy the files. Encryption renders them (hypothetically) unreadable.

Microsoft has gone into some detail about exactly how it's protecting and storing the encryption keys used to encrypt these files—the company says "all encryption keys [are] protected by a hypervisor or TPM." Rate-limiting and "anti-hammering" protections are also in place to protect Recall data, though I kind of have to take Microsoft at its word on that one.

That said, I don't love that it's still possible to get at those files at all. It leaves open the possibility that someone could theoretically grab a few megabytes' worth of data. But it's now much harder to get at that data, and better filtering means what is in there should be slightly less all-encompassing.

Lingering technical issues

As we mentioned already, Microsoft's automated content filtering is hit-and-miss. Certainly, there's a lot of stuff that the original version of Recall would capture that the new one won't, but I didn't have to work hard to find corner-cases, and you probably won't, either. Turning Recall on still means assuming risk and being comfortable with the data and authentication protections Microsoft has implemented.

We'd also like there to be a way for apps to tell Recall to exclude them by default, which would be useful for password managers, encrypted messaging apps, and any other software where privacy is meant to be the point. Yes, users can choose to exclude these apps from Recall backups themselves. But as with Recall itself, opting in to having that data collected would be preferable to needing to opt out.

Another issue is that, while Recall does require a fingerprint reader or face-scanning camera when you set it up the very first time, you can unlock it with a Windows Hello PIN after it's already going.

Microsoft has said that this is meant to be a fallback option in case you need to access your Recall database and there's some kind of hardware issue with your fingerprint sensor. But in practice, it feels like too easy a workaround for a domestic abuser or someone else with access to your PC and a reason to know your PIN (and note that the PIN also gets them into your PC in the first place, so encryption isn't really a fix for this). It feels like too broad a solution for a relatively rare problem.

Security researcher Kevin Beaumont, whose testing helped call attention to the problems with the original version of Recall last year, identified this as one of Recall's biggest outstanding technical problems.

"In my opinion, requiring devices to have enhanced biometrics with Windows Hello but then not requiring said biometrics to actually access Recall snapshots is a big problem," Beaumont wrote. "It will create a false sense of security in customers and false downstream advertising about the security of Recall."

Beaumont also noted that, while the encryption on the Recall snapshots and database made it a "much, much better design," "all hell would break loose" if attackers ever worked out a way to bypass this encryption.

"Microsoft know this and have invested in trying to stop it by encrypting the database files, but given I live in the trenches where ransomware groups are running around with zero days in Windows on an almost monthly basis nowadays, where patches arrive months later... Lord, this could go wrong," he wrote.

But most of what’s wrong with Recall is harder to fix

Microsoft has actually addressed many of the specific, substantive Recall complaints raised by security researchers and our own reporting. It's gone through the standard Windows testing process and has been available in public preview in its current form since late November. And yet the knee-jerk reaction to Recall news is still generally to treat it as though it were the same botched, bug-riddled software that nearly shipped last summer.

Some of this is the asymmetrical nature of how news spreads on the Internet—without revealing traffic data, I'll just say that articles about Recall having problems have been read many, many more times by many more people than pieces about the steps Microsoft has taken to fix Recall. The latter reports simply aren't being encountered by many of the minds Microsoft needs to change.

But the other problem goes deeper than the technology itself and gets back to something I brought up in my first Recall preview nearly a year ago—regardless of how it is architected and regardless of how many privacy policies and reassurances the company publishes, people simply don't trust Microsoft enough to be excited about "the feature that records and stores every single thing you do with your PC."

Recall continues to demand an extraordinary level of trust that Microsoft hasn't earned. However secure and private it is—and, again, the version people will actually get is much better than the version that caused the original controversy—it just feels creepy to open up the app and see confidential work materials and pictures of your kid. You're already trusting Microsoft with those things any time you use your PC, but there's something viscerally unsettling about actually seeing evidence that your computer is tracking you, even if you're not doing anything you're worried about hiding, even if you've excluded certain apps or sites, and even if you "know" that part of the reason why Recall requires a Copilot+ PC is because it's processing everything locally rather than on a server somewhere.

This was a problem that Microsoft made exponentially worse by screwing up the Recall rollout so badly in the first place. Recall made the kind of ugly first impression that it's hard to dig out from under, no matter how thoroughly you fix the underlying problems. It's Windows Vista. It's Apple Maps. It's the Android tablet.

And in doing that kind of damage to Recall (and possibly also to the broader Copilot+ branding project), Microsoft has practically guaranteed that many users will refuse to turn it on or uninstall it entirely, no matter how it actually works or how well the initial problems have been addressed.

Unfortunately, those people probably have it right. I can see no signs that Recall data is as easily accessed or compromised as before or that Microsoft is sending any Recall data from my PC to anywhere else. But today's Microsoft has earned itself distrust-by-default from many users, thanks not just to the sloppy Recall rollout but also to the endless ads and aggressive cross-promotion of its own products that dominate modern Windows versions. That's the kind of problem you can't patch your way out of.

Hope you enjoyed this news post.

Thank you for appreciating my time and effort posting news every day for many years.

News posts... 2023: 5,800+ | 2024: 5,700+ | 2025 (till end of March): 1,357

RIP Matrix | Farewell my friend ![]()

3175x175(CURRENT).thumb.jpg.b05acc060982b36f5891ba728e6d953c.jpg)

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.