The United Kingdom's National Cyber Security Centre (NCSC) warns that artificial intelligence (AI) tools will have an adverse near-term impact on cybersecurity, helping escalate the threat of ransomware.

The agency says cybercriminals already use AI for various purposes, and the phenomenon is expected to worsen over the next two years, helping increase the volume and severity of cyberattacks.

The NCSC believes that AI will enable inexperienced threat actors, hackers-for-hire, and low-skilled hacktivists to conduct more effective, tailored attacks that would otherwise require significant time, technical knowledge, and operational effort.

Most available large learning model (LLM) platforms, such as ChatGPT and Bing Chat, have safeguards that prevent the platform from creating malicious content.

However, the NCSC warns cybercriminals are crafting and marketing specialized generative AI services specifically designed to bolster criminal activities. Examples include WormGPT, a paid-for LLM service that allows threat actors to generate malicious content, including malware and phishing lures.

This indicates that the technology has already escaped the confines of controlled, secure frameworks, becoming accessible in the broader criminal ecosystem.

"Threat actors, including ransomware actors, are already using AI to increase the efficiency and effectiveness of aspects of cyber operations, such as reconnaissance, phishing and coding," warns the NCSC in a separate threat assessment.

"This trend will almost certainly continue to 2025 and beyond."

The report notes that AI's role in the cyber-risk landscape is expected to be evolutionary, enhancing existing threats rather than transformative.

The key points of NCSC's assessment are the following:

- AI will likely intensify cyber attacks in the next two years, particularly through the evolution of current tactics.

- Both skilled and less skilled cyber threat actors, including state and non-state entities, are currently utilizing AI.

- AI enhances reconnaissance and social engineering, making them more effective and difficult to detect.

- Sophisticated AI use in cyber operations will mainly be limited to actors with access to quality data, expertise, and resources until 2025.

- AI will make cyber attacks against the UK more impactful by enabling faster, more effective data analysis and training of AI models.

- AI lowers entry barriers for novice cybercriminals, contributing to the global ransomware threat.

- By 2025, the commoditization of AI capabilities will likely expand access to advanced tools for both cyber criminals and state actors.

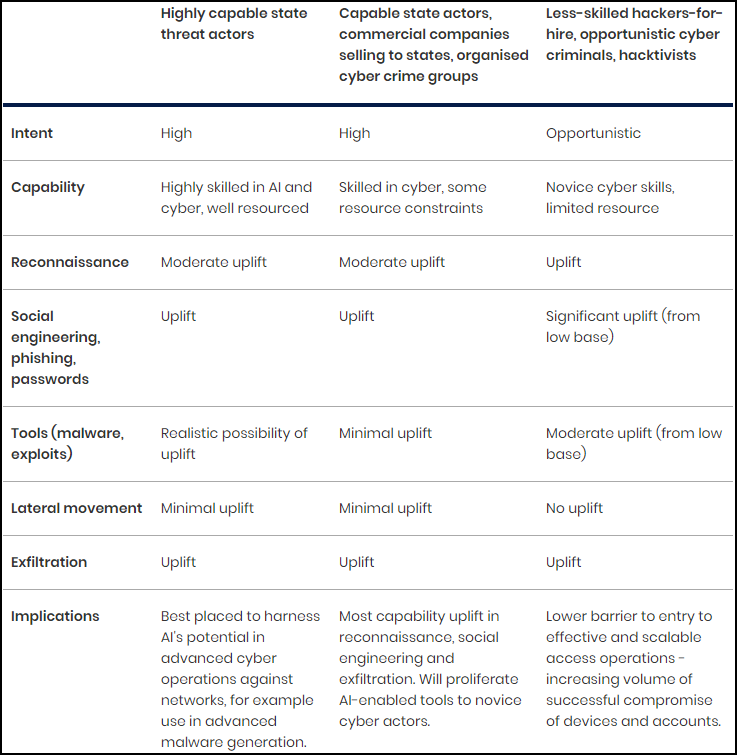

The table below summarizes the effects AI is expected to have on specific threat areas for three skill levels.

For sophisticated APTs, NCSC believes AI will help them generate evasive custom malware more easily and faster.

"AI has the potential to generate malware that could evade detection by current security filters, but only if it is trained on quality exploit data," explains NCSC

"There is a realistic possibility that highly capable states have repositories of malware that are large enough to effectively train an AI model for this purpose."

Intermediate-level hackers will primarily gain advantages in reconnaissance, social engineering, and data extraction, whereas less skilled threat actors will see enhancements across the board, except in lateral movement, which remains challenging.

"AI is likely to assist with malware and exploit development, vulnerability research, and lateral movement by making existing techniques more efficient," reads the analysis.

"However, in the near term, these areas will continue to rely on human expertise, meaning that any limited uplift will highly likely be restricted to existing threat actors that are already capable."

Overall, NCSC warns that generative AI and large language models will make it highly challenging for everyone, regardless of experience and skill level, to identify phishing, spoofing, and social engineering attempts.

3175x175(CURRENT).thumb.jpg.b05acc060982b36f5891ba728e6d953c.jpg)

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.