Academic researchers have devised a new working exploit that commandeers Amazon Echo smart speakers and forces them to unlock doors, make phone calls and unauthorized purchases, and control furnaces, microwave ovens, and other smart appliances.

The attack works by using the device’s speaker to issue voice commands. As long as the speech contains the device wake word (usually “Alexa” or “Echo”) followed by a permissible command, the Echo will carry it out, researchers from Royal Holloway University in London and Italy’s University of Catania found. Even when devices require verbal confirmation before executing sensitive commands, it’s trivial to bypass the measure by adding the word “yes” about six seconds after issuing the command. Attackers can also exploit what the researchers call the "FVV," or full voice vulnerability, which allows Echos to make self-issued commands without temporarily reducing the device volume.

Alexa, go hack yourself

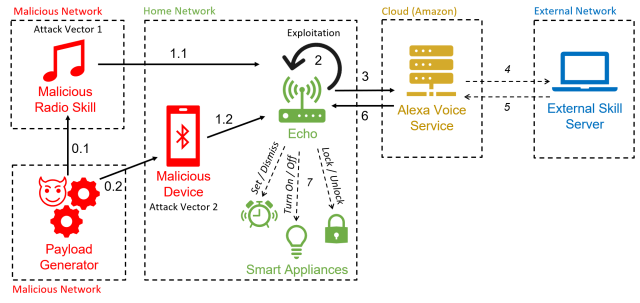

Because the hack uses Alexa functionality to force devices to make self-issued commands, the researchers have dubbed it "AvA," short for Alexa vs. Alexa. It requires only a few seconds of proximity to a vulnerable device while it’s turned on so an attacker can utter a voice command instructing it to pair with an attacker’s Bluetooth-enabled device. As long as the device remains within radio range of the Echo, the attacker will be able to issue commands.

The attack "is the first to exploit the vulnerability of self-issuing arbitrary commands on Echo devices, allowing an attacker to control them for a prolonged amount of time," the researchers wrote in a paper published two weeks ago. “With this work, we remove the necessity of having an external speaker near the target device, increasing the overall likelihood of the attack.”

A variation of the attack uses a malicious radio station to generate the self-issued commands. That attack is no longer possible in the way shown in the paper following security patches that Echo-maker Amazon released in response to the research. The researchers have confirmed that the attacks work against 3rd- and 4th-generation Echo Dot devices.

AvA begins when a vulnerable Echo device connects by Bluetooth to the attacker’s device (and for unpatched Echos, when they play the malicious radio station). From then on, the attacker can use a text-to-speech app or other means to stream voice commands. Here’s a video of AvA in action. All the variations of the attack remain viable, with the exception of what’s shown between 1:40 and 2:14:

The researchers found they could use AvA to force devices to carry out a host of commands, many with serious privacy or security consequences. Possible malicious actions include:

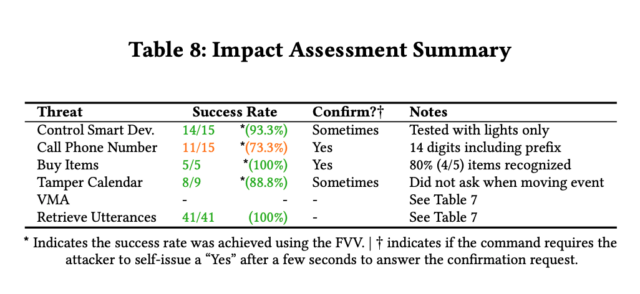

- Controlling other smart appliances, such as turning off lights, turning on a smart microwave oven, setting the heating to an unsafe temperature, or unlocking smart door locks. As noted earlier, when Echos require confirmation, the adversary only needs to append a “yes” to the command about six seconds after the request.

- Call any phone number, including one controlled by the attacker, so that it’s possible to eavesdrop on nearby sounds. While Echos use a light to indicate that they are making a call, devices are not always visible to users, and less experienced users may not know what the light means.

- Making unauthorized purchases using the victim’s Amazon account. Although Amazon will send an email notifying the victim of the purchase, the email may be missed or the user may lose trust in Amazon. Alternatively, attackers can also delete items already in the account shopping cart.

- Tampering with a user’s previously linked calendar to add, move, delete, or modify events.

- Impersonate skills or start any skill of the attacker’s choice. This, in turn, could allow attackers to obtain passwords and personal data.

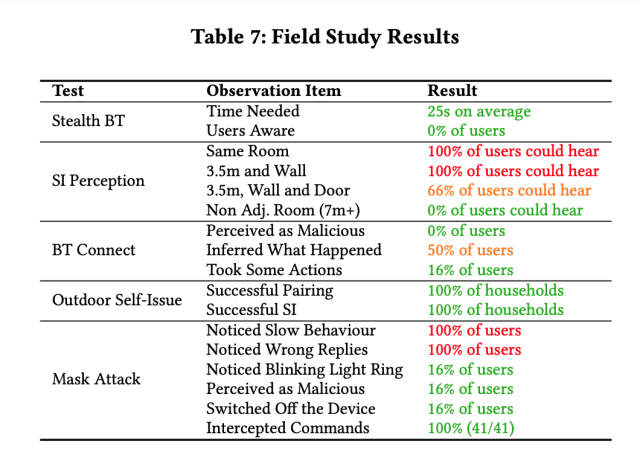

- Retrieve all utterances made by the victim. Using what the researchers call a "mask attack," an adversary can intercept commands and store them in a database. This could allow the adversary to extract private data, gather information on used skills, and infer user habits.

The researchers wrote:

With these tests, we demonstrated that AvA can be used to give arbitrary commands of any type and length, with optimal results—in particular, an attacker can control smart lights with a 93% success rate, successfully buy unwanted items on Amazon 100% of the times, and tamper [with] a linked calendar with 88% success rate. Complex commands that have to be recognized correctly in their entirety to succeed, such as calling a phone number, have an almost optimal success rate, in this case 73%. Additionally, results shown in Table 7 demonstrate the attacker can successfully set up a Voice Masquerading Attack via our Mask Attack skill without being detected, and all issued utterances can be retrieved and stored in the attacker’s database, namely 41 in our case.

As noted earlier, Amazon has fixed several of the weaknesses, including one that used Alexa skills to self-wake devices, that made it possible to easily use radio stations to deliver self-issued commands. In a statement, company officials wrote:

At Amazon, privacy and security are foundational to how we design and deliver every device, feature, and experience. We appreciate the work of independent security researchers who help bring potential issues to our attention and are committed to working with them to secure our devices. We fixed the remote self-wake issue with Alexa Skills caused by extended periods of silence resulting from break tags as demonstrated by the researchers. We also have systems in place to continually monitor live skills for potentially malicious behavior, including silent re-prompts. Any offending skills we identify are blocked during certification or quickly deactivated, and we are constantly improving these mechanisms to further protect our customers.

Always listening

The research is the latest to underscore the risks posed by smart speakers. In 2019, researchers demonstrated how eight malicious apps they developed—four skills that passed Amazon’s vetting process and four actions that passed Google's vetting—surreptitiously eavesdropped on users and phished their passwords. The malicious skills or actions—which were hosted by Amazon and Google respectively—posed as simple apps for checking horoscopes, with the exception of one, which masqueraded as a random-number generator.The same year, a different team of researchers showed how Siri, Alexa, and Google Assistant were vulnerable to attacks that used low-powered lasers to inject inaudible—and sometimes invisible—commands into the devices and surreptitiously cause them to unlock doors, visit websites, and locate, unlock, and start vehicles. The lasers could be as far away as 360 feet from a vulnerable device. The light-based commands could also be sent from one building to another and penetrate glass when a vulnerable device is located near a closed window.

The researchers behind AvA are Sergio Esposito and Daniele Sgandurra of Royal Holloway University and Giampaolo Bella of the University of Catania. As a countermeasure to make attacks less likely, they recommend that Echo users mute their microphones any time they’re not actively using their device.

“This makes it impossible to self-issue any command,” the researchers wrote on an informational website. “Additionally, if the microphone is unmuted only when you are near Echo, you will be able to hear the self-issued commands, hence being able to timely react to them (powering off Echo, canceling an order that the attacker has placed with your Amazon account, e.g.).”

People can always exit a skill by saying, "Alexa, quit" or "Alexa, cancel." Users can also enable an audible indicator that is played after the Echo device detects the wake word.

Amazon has rated the threat posed by AvA as having “medium” severity. The requirement to have brief proximity to the device for Bluetooth pairing means AvA exploits don’t work over the Internet, and even when an adversary successfully pairs the Echo with a Bluetooth device, the latter device must remain within radio range. The attack may nonetheless be viable for domestic partner abusers, malicious insiders, or other people who have fleeting access to a vulnerable Echo.

3175x175(CURRENT).thumb.jpg.b05acc060982b36f5891ba728e6d953c.jpg)

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.