People have more efficient conversations, use more positive language and perceive each other more positively when using an artificial intelligence-enabled chat tool, a group of Cornell researchers has found.

Postdoctoral researcher Jess Hohenstein is lead author of "Artificial Intelligence in Communication Impacts Language and Social Relationships," published in Scientific Reports.

Co-authors include Malte Jung, associate professor of information science in the Cornell Ann S. Bowers College of Computing and Information Science (Cornell Bowers CIS), and Rene Kizilcec, assistant professor of information science (Cornell Bowers CIS).

Generative AI is poised to impact all aspects of society, communication and work. Every day brings new evidence of the technical capabilities of large language models (LLMs) like ChatGPT and GPT-4, but the social consequences of integrating these technologies into our daily lives are still poorly understood.

AI tools have potential to improve efficiency, but they may have negative social side effects. Hohenstein and colleagues examined how the use of AI in conversations impacts the way that people express themselves and view each other.

"Technology companies tend to emphasize the utility of AI tools to accomplish tasks faster and better, but they ignore the social dimension," Jung said. "We do not live and work in isolation, and the systems we use impact our interactions with others."

In addition to greater efficiency and positivity, the group found that when participants think their partner is using more AI-suggested responses, they perceive that partner as less cooperative, and feel less affiliation toward them.

"I was surprised to find that people tend to evaluate you more negatively simply because they suspect that you're using AI to help you compose text, regardless of whether you actually are," Hohenstein said. "This illustrates the persistent overall suspicion that people seem to have around AI."

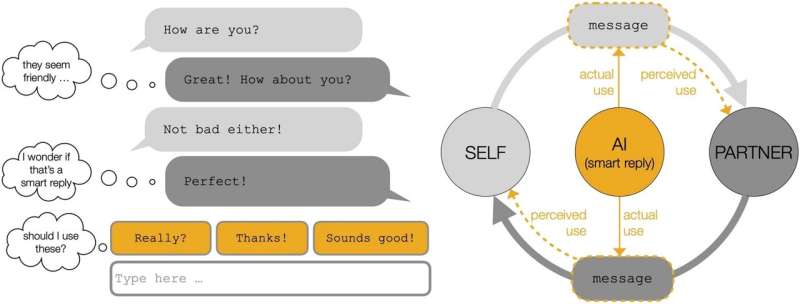

For their first experiment, co-author Dominic DiFranzo, a former postdoctoral researcher in the Cornell Robots and Groups Lab and now an assistant professor at Lehigh University, developed a smart-reply platform the group called "Moshi" (Japanese for "hello"), patterned after the now-defunct Google "Allo" (French for "hello"), the first smart-reply platform, unveiled in 2016. Smart replies are generated from LLMs to predict plausible next responses in chat-based interactions.

A total of 219 pairs of participants were asked to talk about a policy issue and assigned to one of three conditions: both participants can use smart replies; only one participant can use smart replies; or neither participant can use smart replies.

The researchers found that using smart replies increased communication efficiency, positive emotional language and positive evaluations by communication partners. On average, smart replies accounted for 14.3% of sent messages (1 in 7).

But participants who their partners suspected of responding with smart replies were evaluated more negatively than those who were thought to have typed their own responses, consistent with common assumptions about the negative implications of AI.

In a second experiment, 299 randomly assigned pairs of participants were asked to discuss a policy issue in one of four conditions: no smart replies; the default Google smart replies; smart replies with a positive emotional tone; and ones with a negative emotional tone. The presence of positive and Google smart replies caused conversations to have more positive emotional tone than conversations with negative or no smart replies, highlighting the impact that AI can have on language production in everyday conversations.

"While AI might be able to help you write," Hohenstein said, "it's altering your language in ways you might not expect, especially by making you sound more positive. This suggests that by using text-generating AI, you're sacrificing some of your own personal voice."

Said Jung: "What we observe in this study is the impact that AI has on social dynamics and some of the unintended consequences that could result from integrating AI in social contexts. This suggests that whoever is in control of the algorithm may have influence on people's interactions, language and perceptions of each other."

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.