Today's quantum computing hardware is severely limited in what it can do by errors that are difficult to avoid. There can be problems with everything from setting the initial state of a qubit to reading its output, and qubits will occasionally lose their state while doing nothing. Some of the quantum processors in existence today can't use all of their individual qubits for a single calculation without errors becoming inevitable.

The solution is to combine multiple hardware qubits to form what's termed a logical qubit. This allows a single bit of quantum information to be distributed among multiple hardware qubits, reducing the impact of individual errors. Additional qubits can be used as sensors to detect errors and allow interventions to correct them. Recently, there have been a number of demonstrations that logical qubits work in principle.

On Wednesday, Microsoft and Quantinuum announced that logical qubits work in more than principle. "We've been able to demonstrate what's called active syndrome extraction, or sometimes it's also called repeated error correction," Microsoft's Krysta Svore told Ars. "And we've been able to do this such that it is better than the underlying physical error rate. So it actually works."

A hardware/software stack

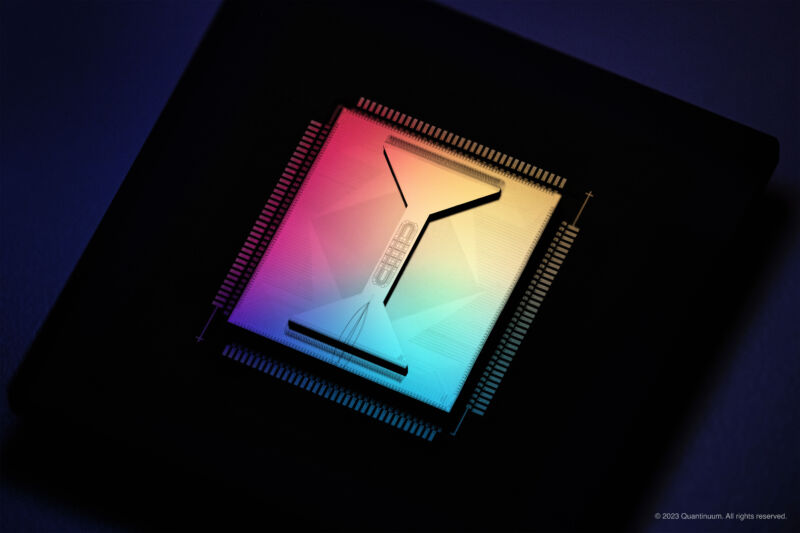

Microsoft has its own quantum computing efforts, and it also acts as a service provider for other companies' hardware. Its Azure Quantum service allows users to write instructions for quantum computers in a hardware-agnostic manner and then run them on the offerings of four different companies, many of them based on radically different hardware qubits. This work, however, was done on one specific hardware platform: a trapped-ion computer from a company called Quantinuum.

We covered the technology behind Quantinuum's computers when the company was an internal project at industrial giant Honeywell. Briefly, trapped ion qubits benefit from a consistent behavior (there's no device-to-device variation in atoms), ease of control, and relative stability. Because the ions can be moved around easily, it's possible to entangle any qubit with any other in the hardware and to perform measurements on them while calculations are in progress. "These are some of the key capabilities: the two-qubit gate fidelities, the fact that you can move and have all the connectivity through movement, and then mid-circuit measurement," Svore told Ars.

Quantinuum's hardware does lag in one dimension: the total number of qubits. While some of its competitors have pushed over 1,000 qubits, Quantinuum's latest hardware is limited to 32 qubits.

That said, a low error rate is valuable for this work. Logical qubits work by combining multiple hardware qubits. If each of those qubits has a high enough error rate, combining them increases the probability that errors will crop up more quickly than they can be corrected. So the error rate has to be below a critical point for error correction to work. And existing qubit technologies seem to be at that point—albeit barely. Initial work in this area had either barely detected the impact of error correction or had simply registered the errors but not corrected them.

As the draft of a new manuscript describing this work puts it, "To the best our knowledge, none of these experiments have demonstrated logical error rates better than the physical error rates."

Microsoft is also well-positioned to be doing this work. Its role requires it to translate generic quantum code into the commands needed to be performed on Quantinuum's hardware—including acting as a compiler provider. And in at least part of this work, it used this knowledge to specifically optimize the code to cut down on the time spent moving ions around.

Error correction actually corrects errors

The work involved three experiments. In the first, the researchers formed a logical qubit with seven information-holding hardware qubits and three ancillary qubits for error detection and correction. The 32 qubits in the hardware allowed two of these to be created; they were then entangled, which required two gate operations. Errors were checked for during the initialization of the qubits and after the entanglement. These operations were performed thousands of times to derive error rates.

On individual hardware qubits, the error rate was 0.50 percent. When error correction was included, this rate dropped to 0.05 percent. But the system could do even better if it identified readings that indicated difficult-to-interpret error states and discarded those calculations. Doing the discarding dropped the error rate to 0.001 percent. These instances were rare enough that the team didn't have to throw out a significant number of operations, but they still made a huge difference in the error rate.

Next, the team switched to what they call a "Carbon code," which requires 30 physical qubits (24 data and six correction/detection), meaning the hardware could only host one. But the code was also optimized for the hardware. "Knowing the two-qubit gate fidelities, knowing how many interaction zones, how much parallelism you can have, we then optimize our error-correction codes for that," Svore said.

The Carbon code also allows the identification of errors that are difficult to correct properly, allowing those results to be discarded. With error correction and discarding of difficult-to-fix errors, the error rate dropped from 0.8 percent to 0.001 percent—a factor of 800 difference.

Finally, the researchers performed repeated rounds of gate operations followed by error detection and correction on a logical qubit using the Carbon code. These again showed a major improvement thanks to error correction (about an order of magnitude) after one round. By the second round, however, error correction had only cut the error rate in half, and any effect was statistically insignificant by round three.

So while the results tell us that error correction works, they also indicate that our current hardware isn't yet sufficient to allow for the extended operations that useful calculations will require. Still, Svore said, "I think this marks a critical milestone on the path to more elaborate computations that are fault tolerant and reliable" and emphasized that it was done on production commercial hardware rather than a one-of-a-kind academic machine.

3175x175(CURRENT).thumb.jpg.b05acc060982b36f5891ba728e6d953c.jpg)

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.