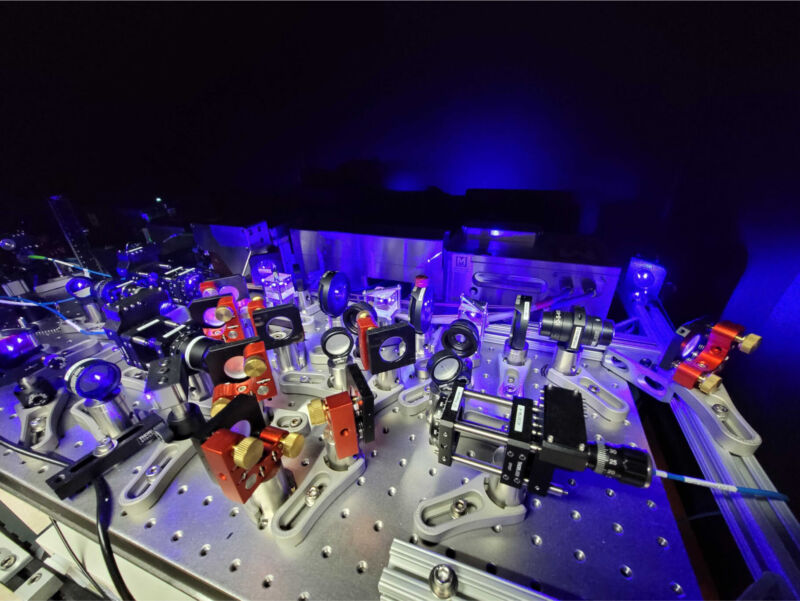

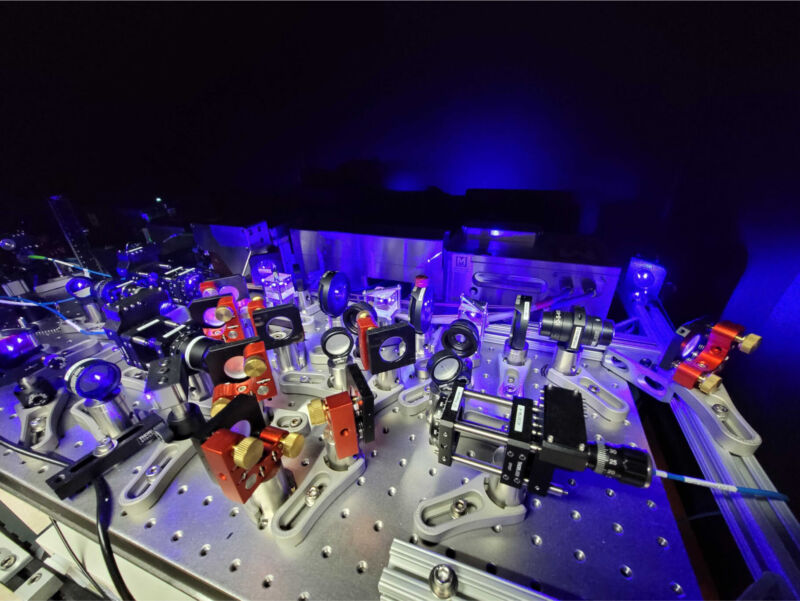

Some of the optical hardware needed to get QuEra's machine to work.

QuEra

There's widespread agreement that most useful quantum computing will have to wait for the development of error-corrected qubits. Error correction involves distributing a bit of quantum information—termed a logical qubit—among a small collection of hardware qubits. The disagreements mostly focus on how best to implement it and how long it will take.

A key step toward that future is described in a paper released in Nature today. A large team of researchers, primarily based at Harvard University, have now demonstrated the ability to perform multiple operations on as many as 48 logical qubits. The work shows that the system, based on hardware developed by the company QuEra, can correctly identify the occurrence of errors, and this can significantly improve the results of calculations.

Yuval Boger, QuEra's chief marketing officer, told Ars: "We feel it is a very significant milestone on the path to where we all want to be, which is large-scale, fault-tolerant quantum computers.

Catching and fixing errors

Complex quantum algorithms can require hours of maintaining and manipulating quantum information, and existing hardware qubits aren't likely to ever reach the point where they're capable of handling that without causing errors. The generally accepted solution to this is to work with error-correcting logical qubits instead. These involve distributing individual qubits among a collection of hardware qubits so that an error in one of these qubits doesn't completely destroy the information.

Additional qubits can add error correction to these logical qubits. These are linked to the hardware qubits that hold the logical qubits, allowing their state to be monitored in a way that will identify when errors have occurred. Manipulation of these additional qubits can restore them to the state that was lost when the error happened.

In theory, this error correction can allow the hardware to hold quantum states for far longer than the individual hardware qubits are capable of.

The trade-off is significantly increased complexity and qubit counts. The latter should be obvious—if each logical qubit requires a dozen qubits, then you need a lot more hardware qubits to run any algorithm. Full error correction would also require repeated measurements to identify when errors have occurred, identify the type of error, and perform the necessary corrections. And all of that would have to happen while the logical qubits are also being used for running those algorithms.

There's also the actual practicalities of getting any of this to work. It's really easy (by a very relaxed definition of "easy") to understand how to perform operations on pairs of hardware qubits. It's far more difficult to understand how to do them when any individual hardware qubit holds, at most, only a fraction of a logical qubit. Adding to the complexity is that there are a variety of potential error-correction schemes, and we're still figuring out their trade-offs in terms of robustness, convenience, and qubit use.

That's not to say that there hasn't been progress. Error-corrected qubits have been demonstrated, and they do maintain quantum information better than the hardware qubits that host them. And, in a few cases, individual quantum operations (termed gates) have been demonstrated using pairs of logical qubits. And two companies (Atom Computing and IBM) have been ramping up qubit counts to provide enough hardware to host lots of logical qubits.

Enter QuEra

Like Atom Computing, QuEra's hardware uses neutral atoms, which have several advantages. Quantum information gets stored in the nuclear spin of individual atoms, which is relatively stable in terms of maintaining quantum information. And, since every atom of a given isotope is equivalent, there's no device-to-device variation as there is in qubits based on superconducting hardware. Individual atoms can be addressed with lasers instead of needing wiring, and the atoms can be moved around, potentially allowing any qubit to be linked to any other.

QuEra's current generation of hardware supports up to 280 atom-based qubits. For this to work, those atoms were moved around among several functional regions. One is simply storage, where qubits live when they're not being manipulated or measured. This holds both any logical qubits in use and a pool of unused qubits that can be mobilized over the course of executing an algorithm. There's also an "entanglement zone" where those manipulations take place and a readout zone where the state of individual qubits can be measured without disturbing qubits elsewhere in the hardware.

Boger drew a comparison between this architecture and traditional computers, telling Ars that "the advantage of this architecture is you could, for instance, double your memory without changing your CPU." In terms of the actual hardware, QuEra could potentially alter the hardware that holds the atoms in memory without changing the system it uses for readout.

Several aspects of neutral atom qubits make performing operations on logical qubits somewhat easier. For example, performing an operation on a logical qubit can be as simple as moving all the atoms that comprise it into the entanglement zone and shining a single laser on them, essentially performing the same operation on all of the logical qubit's component atoms at once. A similar thing can be done with two logical qubits to perform a gate operation on them.

In addition, the separate measurement zone allows the qubits used for error correction to be moved and measured while algorithms are in process without disrupting any of the other components of the logical qubit. Alternatively, in some of the experiments here, these qubits are held in memory until after an algorithm is complete. At this point, they can be measured and the results discarded if there's an indication that an error took place.

Working logically

Even the process of initializing logical qubits showed their potential benefit. By selecting instances where later measurements showed no indication of errors, the fidelity of the initialization reached over 99.9 percent, well above the rate of success when individual hardware qubits are initialized (99.3 percent).

In addition, the research team tested various error-correction schemes that used different numbers of qubits. As the number of hardware qubits in the logical qubit went up, the overall error rate dropped.

This isn't full error correction. "What is happening in the paper is that the errors are corrected only after the calculation is done," Boger said. "So, what we have not demonstrated yet is mid-circuit correction, where during the calculation, we measure... an indication of whether there's an error, correct it, and move forward."

The researchers then performed a variety of algorithms using the logical qubits. In one case, they could use classical computers to estimate the probabilities of different outcomes of a series of manipulations; that could then be compared with actually performing the manipulations. Without any sort of error detection, there was a fair bit of noise in the outcome of the experiment. But as the researchers got more stringent about rejecting measurements with indications of errors, the results got progressively cleaner. One measurement of accuracy rose from 0.16 to 0.62.

In a separate set of experiments, the researchers tested algorithms on collections of logical qubits that ranged in size from three up to 48. In all these cases, the logical qubits outperformed both physical qubits and logical qubits where no measures were taken to limit the impact of errors, even in algorithms that involved as many as 270 gate operations. In the operations on 48 logical qubits, the difference in performance was a factor of 10.

The researchers also estimate that adding just three more operations would be enough to make the system intractable to simulation using classical computers.

What’s next?

Boger told Ars that QuEra plans to provide a road map for its future developments in January. But it's possible to infer a fair amount about what still needs to be done. For starters, as Boger said, this isn't full error correction done while calculations are in progress, and QuEra is working on that. In addition, the algorithms used in these tests aren't useful in the sense that no commercial customer would pay to run them. The logical qubit count will have to be brought up before that's going to be possible.

The other reason to bring the qubit count up is that, as this work demonstrates, having more qubits to use for a logical qubit drops the error rate. If each logical qubit required 10 hardware qubits, the current QuEra hardware can only host 22 of them at once. Obviously, to run these demonstrations with 48 qubits means that fewer than 10 were used, so more errors ended up uncaught than might be possible on a larger machine.

That said, QuEra states in its paper that optimized control and boosted laser power should allow this architecture to reach 10,000 physical qubits, so there should be quite a bit of headroom there. And, since all control operations are handled using lasers, it should be possible to use photonic links to bridge separate pieces of hardware. Boger also mentioned that boosting the readout system should boost performance by cutting down the time available for errors to take place.

But the value of all of that potential progress is predicated on the belief that we'd ultimately be able to perform a complex series of manipulations on logical qubits and correct any errors in real time. The first half of that has now moved out of the realm of belief and into the list of technologies that have been demonstrated.

Nature, 2023. DOI: 10.1038/s41586-023-06927-3 (About DOIs).

3175x175(CURRENT).thumb.jpg.b05acc060982b36f5891ba728e6d953c.jpg)

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.