One academic and two people from DeepMind take home the Nobel.

On Wednesday, the Nobel Committee announced that it had awarded the Nobel Prize in chemistry to researchers who pioneered major breakthroughs in computational chemistry. These include two researchers at Google's DeepMind in acknowledgment of their role in developing AI software that could take a raw protein sequence and use it to predict the three-dimensional structure the protein would adopt in cells. Separately, the University of Washington's David Baker was honored for developing software that could design entirely new proteins with specific structures.

The award makes for a bit of a theme for this year, as yesterday's Physics prize honored AI developments. In that case, the connection to physics seemed a bit tenuous, but here, there should be little question that the developments solved major problems in biochemistry.

Understanding protein structure

DeepMind, represented by Demis Hassabis and John Jumper, had developed AIs that managed to master games as diverse as chess and StarCraft. But it was always working on more significant problems in parallel, and in 2020, it surprised many people by announcing that it had tackled one of the biggest computational challenges in existence: the prediction of protein structures.

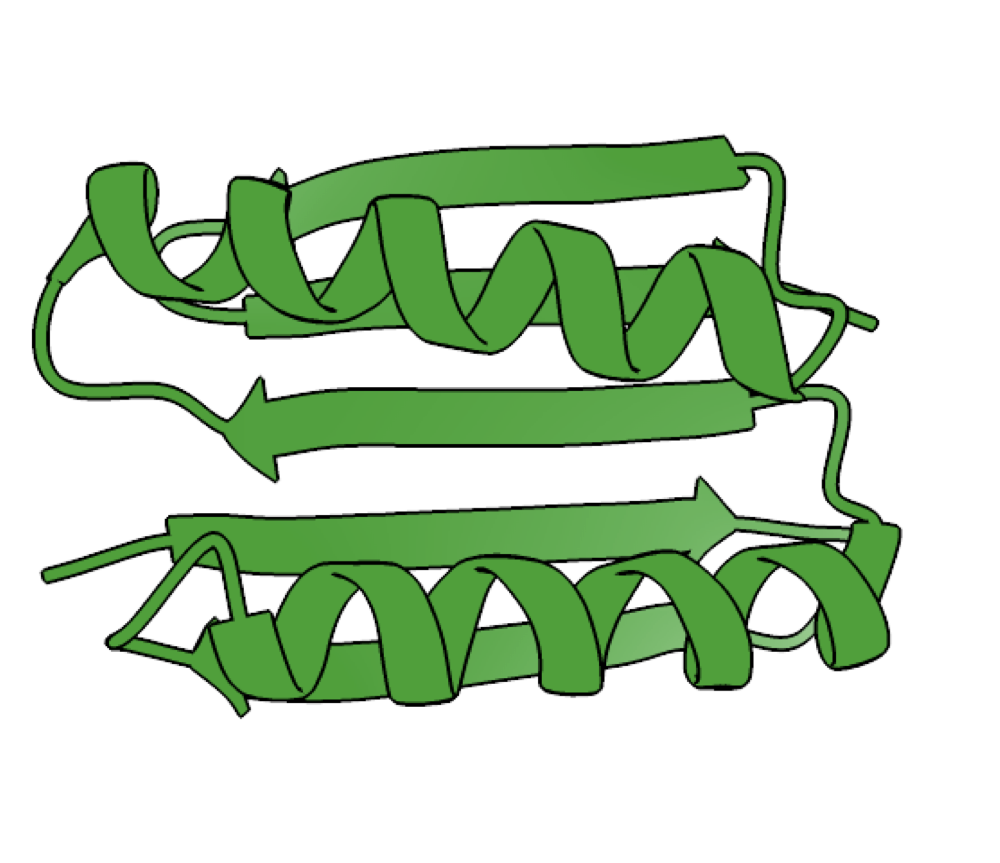

Chemically, proteins are a linear string of amino acids linked together, with living creatures typically having the choice of 20 different amino acids for each position along the string. Most of those 20 have distinctive chemical properties: some are acidic, others basic; some may be negatively charged, others positively charged, and still others neutral, etc. These properties allow different areas of the string to interact with each other, causing it to fold up into a complex three-dimensional structure. That structure is essential for the protein's function.

Typically, figuring out the structure involves laborious biochemistry to purify the protein, followed by a number of imaging techniques to determine where each of its atoms resides. But in theory, all of that should be predictable since the structure is just the product of chemistry and physics. Since any amino acid could potentially interact with any other on the chain, however, the complexity of making predictions rises very rapidly with the length of the protein. Extend it out past a dozen amino acids long, and it could quickly humble the most powerful supercomputers.

A lot of work over the years went into trying to figure out computational shortcuts. DeepMind, by contrast, did what it did best and put an AI on the case. For protein folding, the AI was trained on two large existing data sets. One included every protein structure that had been solved through lab work, allowing it to extract general principles for how different amino acids typically interact. The second was the sequence of every protein we've determined, allowing it to identify proteins related through evolution and determine what sorts of flexibility can be tolerated in a given structure.

The net result is software that produced reasonable structural predictions, easily beating every other software package we'd developed in a regular computational challenge. DeepMind has since used it to generate predictions for most of the existing protein-coding genes in the databases (it still struggles with excessively long ones) and has continued to upgrade the software. The predictions aren't perfect, and some appear to stumble badly, but when the alternative is simply a string of amino acids and a shrug, this represents a major advance.

Rolling our own

The University of Washington's David Baker had also tried to tackle the protein-folding problem for a number of years, taking some distinct approaches to it. The Rosetta software developed by his group was adapted to operate as a distributed computing project (Rosetta@home) and later served as the basis of a protein-folding game called Foldit. When DeepMind announced its AlphaFold software, he was part of a group that quickly adopted some of its principles into a version they called RosettaFold.

But his group has also tackled a second question: Can we use the ability to predict protein structures to essentially do the converse of figuring out the structure of natural proteins? In other words, can we design proteins that don't exist in the natural world to fold up into specific structures?

Over the last few years, those questions have clearly been answered with a "yes." It sometimes feels like I receive monthly mailings about the latest breakthroughs coming out of the Baker lab, and a number of them seem significant. For example, the lab has developed systems that can take a target protein and suggest the amino acid sequence of an antibody that will stick to them. The lab has also developed software that can take a protein with a known structure and design other proteins that will interact with and inhibit them.

These targeted inhibitors have the potential to inhibit proteins that are mutated in genetic diseases or provide a rapid response to emerging pathogens. They might not always be the best or fastest way of achieving those things, but the more options we have, the better. And they're just a narrow snapshot of all the projects that appear to be going on in the lab.

Given that science is a massively collaborative endeavor and that the Nobel Prize is limited to honoring three individuals each year, there will always be arguments over whether some worthy contributors were left out. But in this case, there's unlikely to be much argument over whether the achievements were worthy of the attention.

RIP Matrix | Farewell my friend ![]()

Hope you enjoyed this news post.

Thank you for appreciating my time and effort posting news every day for many years.

2023: Over 5,800 news posts | 2024 (till end of September): 4,292 news posts

3175x175(CURRENT).thumb.jpg.b05acc060982b36f5891ba728e6d953c.jpg)

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.