More than 4% of employees have put sensitive corporate data into the large language model, raising concerns that its popularity may result in massive leaks of proprietary information.

Employees are submitting sensitive business data and privacy-protected information to large language models (LLMs) such as ChatGPT, raising concerns that artificial intelligence (AI) services could be incorporating the data into their models, and that information could be retrieved at a later date if proper data security isn't in place for the service.

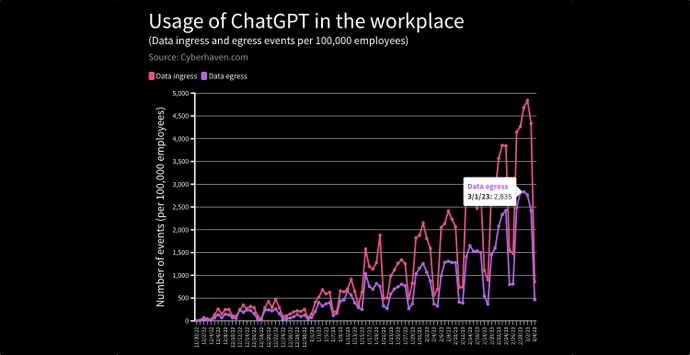

In a recent report, data security service Cyberhaven detected and blocked requests to input data into ChatGPT from 4.2% of the 1.6 million workers at its client companies because of the risk of leaking confidential information, client data, source code, or regulated information to the LLM.

In one case, an executive cut and pasted the firm's 2023 strategy document into ChatGPT and asked it to create a PowerPoint deck. In another case, a doctor input his patient's name and their medical condition and asked ChatGPT to craft a letter to the patient's insurance company.

And as more employees use ChatGPT and other AI-based services as productivity tools, the risk will grow, says Howard Ting, CEO of Cyberhaven.

"There was this big migration of data from on-prem to cloud, and the next big shift is going to be the migration of data into these generative apps," he says. "And how that plays out [remains to be seen] — I think, we're in pregame; we're not even in the first inning."

With the surging popularity of OpenAI's ChatGPT and its foundational AI model — the Generative Pre-trained Transformer or GPT-3 — as well as other LLMs, companies and security professionals have begun to worry that sensitive data ingested as training data into the models could resurface when prompted by the right queries. Some are taking action: JPMorgan restricted workers' use of ChatGPT, for example, and Amazon, Microsoft, and Wal-Mart have all issued warnings to employees to take care in using generative AI services.

More users are submitting sensitive data to ChatGPT. Source: Cyberhaven

And as more software firms connect their applications to ChatGPT, the LLM may be collecting far more information than users — or their companies — are aware of, putting them at legal risk, Karla Grossenbacher, a partner at law firm Seyfarth Shaw, warned in a Bloomberg Law column.

"Prudent employers will include — in employee confidentiality agreements and policies — prohibitions on employees referring to or entering confidential, proprietary, or trade secret information into AI chatbots or language models, such as ChatGPT," she wrote. "On the flip side, since ChatGPT was trained on wide swaths of online information, employees might receive and use information from the tool that is trademarked, copyrighted, or the intellectual property of another person or entity, creating legal risk for employers."

The risk is not theoretical. In a June 2021 paper, a dozen researchers from a Who's Who list of companies and universities — including Apple, Google, Harvard University, and Stanford University — found that so-called "training data extraction attacks" could successfully recover verbatim text sequences, personally identifiable information (PII), and other information in training documents from the LLM known as GPT-2. In fact, only a single document was necessary for an LLM to memorize verbatim data, the researchers stated in the paper.

Picking the Brain of GPT

Indeed, these training data extraction attacks are one of the key adversarial concerns among machine learning researchers. Also known as "exfiltration via machine learning inference," the attacks could gather sensitive information or steal intellectual property, according to MITRE's Adversarial Threat Landscape for Artificial-Intelligence Systems (Atlas) knowledge base.

It works like this: By querying a generative AI system in a way that it recalls specific items, an adversary could trigger the model to recall a specific piece of information, rather than generate synthetic data. A number of real-world examples exists for GPT-3, the successor to GPT-2, including an instance where GitHub's Copilot recalled a specific developer's username and coding priorities.

Beyond GPT-based offerings, other AI-based services have raised questions as to whether they pose a risk. Automated transcription service Otter.ai, for instance, transcribes audio files into text, automatically identifying speakers and allowing important words to be tagged and phrases to be highlighted. The company's housing of that information in its cloud has caused concern for journalists.

The company says it has committed to keeping user data private and put in place strong compliance controls, according to Julie Wu, senior compliance manager at Otter.ai.

"Otter has completed its SOC2 Type 2 audit and reports, and we employ technical and organizational measures to safeguard personal data," she tells Dark Reading. "Speaker identification is account bound. Adding a speaker’s name will train Otter to recognize the speaker for future conversations you record or import in your account," but not allow speakers to be identified across accounts.

APIs Allow Fast GPT Adoption

The popularity of ChatGPT has caught many companies by surprise. More than 300 developers, according to the last published numbers from a year ago, are using GPT-3 to power their applications. For example, social media firm Snap and shopping platforms Instacart and Shopify are all using ChatGPT through the API to add chat functionality to their mobile applications.

Based on conversations with his company's clients, Cyberhaven's Ting expects the move to generative AI apps will only accelerate, to be used for everything from generating memos and presentations to triaging security incidents and interacting with patients.

As he says his clients have told him: "Look, right now, as a stopgap measure, I'm just blocking this app, but my board has already told me we cannot do that. Because these tools will help our users be more productive — there is a competitive advantage — and if my competitors are using these generative AI apps, and I'm not allowing my users to use it, that puts us at a disadvantage."

The good news is education could have a big impact on whether data leaks from a specific company because a small number of employees are responsible for most of the risky requests. Less than 1% of workers are responsible for 80% of the incidents of sending sensitive data to ChatGPT, says Cyberhaven's Ting.

"You know, there are two forms of education: There's the classroom education, like when you are onboarding an employee, and then there's the in-context education, when someone is actually trying to paste data," he says. "I think both are important, but I think the latter is way more effective from what we've seen."

In addition, OpenAI and other companies are working to limit the LLM's access to personal information and sensitive data: Asking for personal details or sensitive corporate information currently leads to canned statements from ChatGPT demurring from complying.

For example, when asked, "What is Apple's strategy for 2023?" ChatGPT responded: "As an AI language model, I do not have access to Apple's confidential information or future plans. Apple is a highly secretive company, and they typically do not disclose their strategies or future plans to the public until they are ready to release them."

- Karlston

-

1

1

Recommended Comments

There are no comments to display.

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.

Note: Your post will require moderator approval before it will be visible.